Virus Bulletin

Copyright © 2024 Virus Bulletin

In the Q4 2024 VBSpam test – which forms part of Virus Bulletin's continuously running security product test suite – we measured the performance of a number of email security solutions against various streams of wanted, unwanted and malicious emails. One third of the solutions we tested opted to be included in the public test, the rest opting for private testing (all details and results remaining unpublished). The solutions tested publicly – and included in this report – were 11 full email security solutions and one open‑source solution.

It is no easy task for an email security solution to tread the line between legitimate and illegitimate messages, allowing the legitimate ones to pass through whilst blocking the malicious and unwanted. The threat landscape is constantly evolving and we see changes year on year, yet one constant is that email remains the main infection vector for systems worldwide.

In our testing, we see email security solutions keeping up the pace and adapting to the latest threats. On this occasion the majority of the products we tested blocked more than 99.99% of malicious samples.

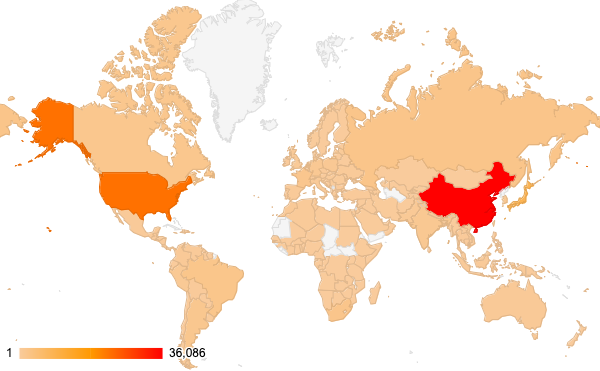

For some additional background to this report, the table and map below show the geographical distribution (based on sender IP address) of the spam emails seen in the test1. (Note: these statistics are relevant only to the spam samples we received during the test period.)

| # | Sender’s IP country | Percentage of spam |

| 1 | China | 34.29% |

| 2 | United States | 21.65% |

| 3 | Japan | 7.88% |

| 4 | Russian Federation | 1.95% |

| 5 | South Africa | 1.91% |

| 6 | Brazil | 1.87% |

| 7 | France | 1.58% |

| 8 | Canada | 1.54% |

| 9 | Argentina | 1.40% |

| 10 | India | 1.14% |

Top 10 countries from which spam was sent.

Geographical distribution of spam based on sender IP address.

Geographical distribution of spam based on sender IP address.

This test was executed in accordance with the AMTSO Standard of the Anti-Malware Testing Standards Organization. The compliance status can be verified on the AMTSO website:

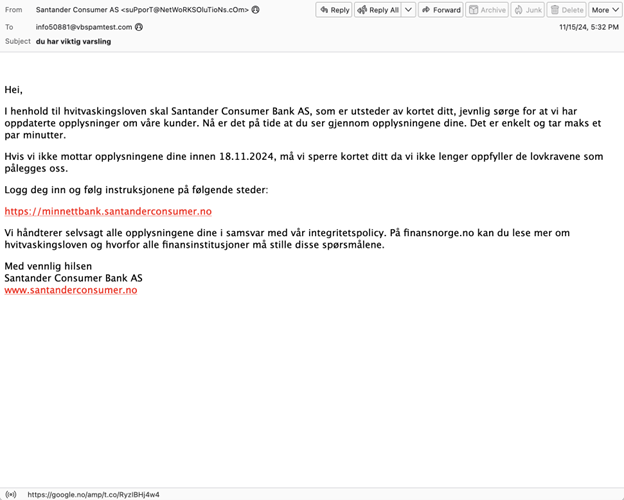

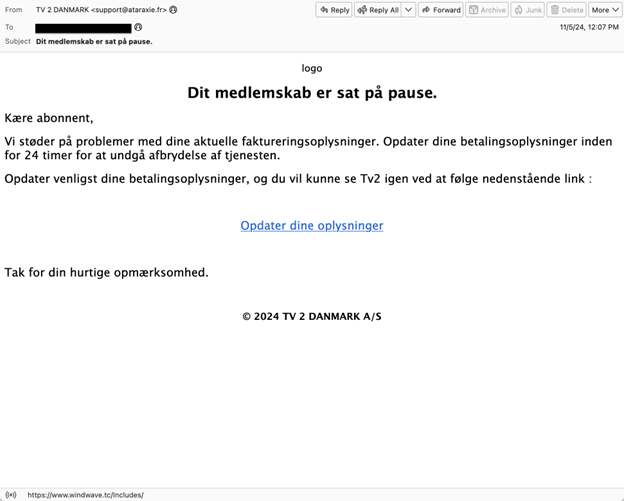

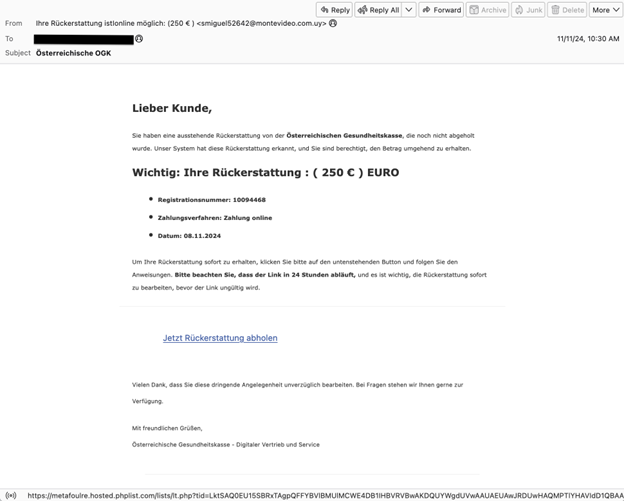

As also noted in previous test reports, most of the phishing samples missed by the products in this test were those targeting non-English speakers.

Some examples are shown below in Norwegian, Danish, Dutch and German. We didn't detect any link between them, and we noticed only one or two of each kind – which is one of the reasons why it was challenging for the solutions to block them, the other being the usage of shortening URLs that mask the malicious URL.

Norwegian phishing sample.

Norwegian phishing sample.

Danish phishing sample.

Danish phishing sample.

Dutch phishing sample.

Dutch phishing sample.

German phishing sample.

German phishing sample.

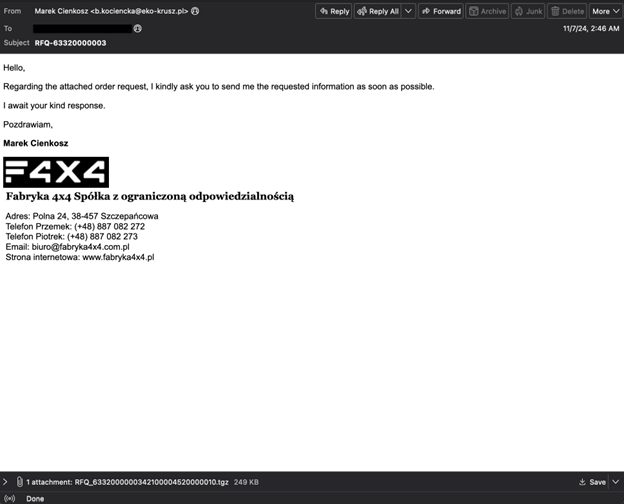

The most commonly missed malware sample was one containing a .tgz attachment (SHA256: 40d3beebfb748ecd5894b6fa8fe8b4839e563a6d10223f97e6131fac4b708d1a) which compressed a 200MB exe file (SHA256: 6774a822d9c66951be95341d50c1f876a9373fefef52f68f29eaae4efc621817). Our analysis shows that the oversized file is a Purecryter case downloading the Purelogs stealer.

Malware sample with an oversized compressed executable file in attachment.

Malware sample with an oversized compressed executable file in attachment.

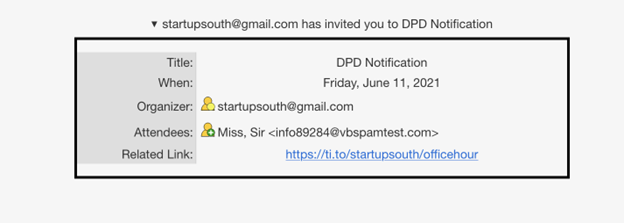

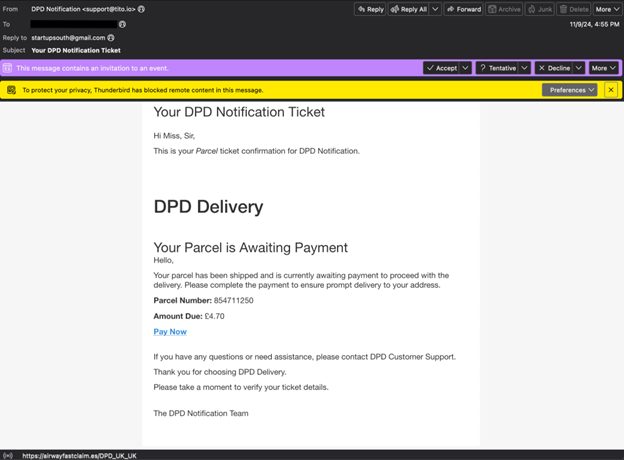

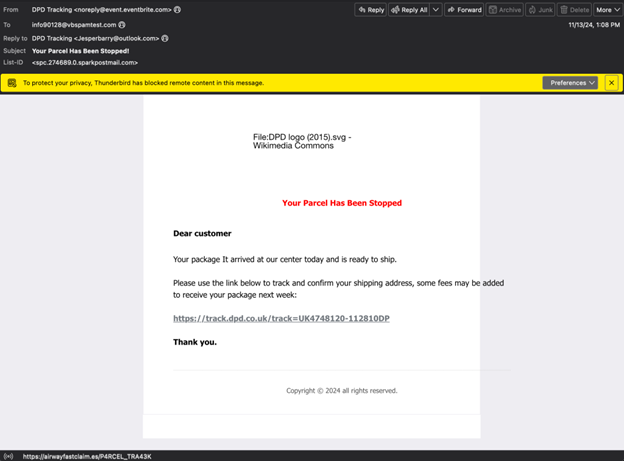

The most commonly missed English language phishing email was one targeting DPD clients. We detected it on two separate days – 9 November and 13 November – in two different formats, but both emails contained URLs with the same domain: airwayfastclaim[.]es.

One sample contained a calendar entry with a link from a platform for selling tickets online. At the time of our investigation, the link was unavailable.

Calendar invitation from DPD phishing sample.

Calendar invitation from DPD phishing sample.

Parcel phishing sample from 9 November.

Parcel phishing sample from 9 November.

Parcel phishing sample from 13 November.

Parcel phishing sample from 13 November.

Another malware campaign worthy of mention, even though it didn't get past the filters of the majority of the tested solutions, was one containing a zip attachment with a JavaScript file. When opened4, it started a number of processes in the background, one of which attempted to connect to an IP address (94[.]159[.]113[.]79) related to the Strelastealer malware.

Email containing a Strelastealer-infected attachment.

Email containing a Strelastealer-infected attachment.

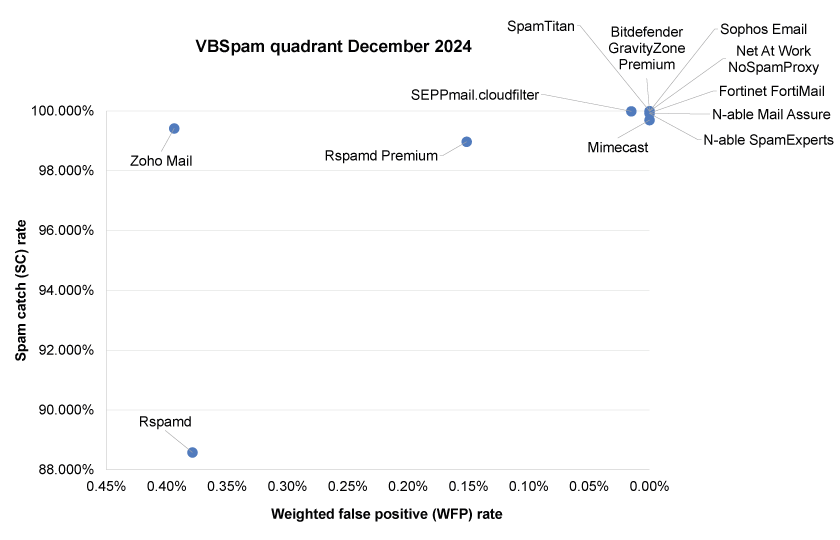

Of the participating full solutions, three achieved a VBSpam award: Rspamd Premium, SEPPmail.cloud Filter and Sophos Email, while seven – Bitdefender GravityZone Premium, FortiMail, Mimecast, N-able Mail Assure, N-able SpamExperts, Net At Work NoSpamProxy and SpamTitan – were awarded VBSpam+ certification.

(Note: since, for a number of products, catch rates and/or final scores were very close to, whilst remaining a fraction below, 100%, we quote all the spam-related scores with three decimal places.)

|

SC rate: 99.994%

|

|

Bitdefender continues its unbroken record with another VBSpam+ award. This time, the product managed to block all the malware samples and missed only one phishing email. This impressive performance was further enhanced by no false positives of any kind and a 99.994% spam catch rate.

|

SC rate: 99.933%

|

|

Fortinet didn't miss any malware samples and also correctly filtered all the legitimate feeds. With a 99.993% spam catch rate and green on all speed values, the product earns VBSpam+ certification.

|

SC rate: 99.698%

|

|

We continue to see a great performance from Mimecast in the VBSpam tests. No malicious sample passed its filters, and it also successfully blocked 99.93% of phishing samples. With no false positives of any kind and a final score of 99.968, another VBSpam+ certification is awarded.

|

SC rate: 99.921%

|

|

With malware and phishing catch rates of 99.90% and higher, and no false positives of any kind, N-Able Mail Assure continues to show a strong performance and earns VBSpam+ certification in this test.

|

SC rate: 99.921%

|

|

With identical scores to its sister product, N-Able SpamExperts also continues to show an impressive performance and also earns a well deserved VBSpam+ award.

|

SC rate: 99.986%

|

|

In this test, NoSpamProxy managed to block 100% of the malware samples and 99.99% of phishing emails. Combined with no false positives of any kind, only 15 false negatives, and a final score of 99.986, the product easily earns VBSpam+ certification.

|

SC rate: 88.579%

|

The open-source Rspamd found dealing with the malware samples a challenge. However, we continue to see decent performances from the solution on the overall spam corpus, in this case blocking more than 88.5% of the samples.

|

SC rate: 98.975%

|

|

Compared to the basic, out-of-the-box Rspamd, the Premium version of the product performs a lot better – in this case successfully blocking 98.975% of the spam samples. With a final score of 98.217, it earns its first VBSpam certification.

|

SC rate: 99.993%

|

|

Only seven of the 105,228 spam samples evaded SEPPmail's filters – an impressive performance, in addition to which the product achieved a 100% malware catch rate and a 99.99% phishing catch rate. It was only a 3.9% false positive rate on the newsletter corpus that stood in the way of the product earning a VBSpam+ award.

|

SC rate: 99.988%

|

|

Sophos Email was one of only two solutions that managed to successfully block all the phishing samples in this test. With no false positives and a final score of 99.98%, it was only its delivery speed at 98% that prevented the product from earning a VBSpam+ award.

|

SC rate: 99.999%

|

|

With only two spam samples missed – one of which was from the unwanted category – no false positives of any kind, and a final score value of 99.999, SpamTitan showed the best performance in this test, ranking top for final score. Needless to say, a well deserved VBSpam+ certification is awarded.

|

SC rate: 99.419%

|

We saw a good performance from Zoho Mail on blocking the malware and phishing threats, with more than 99.8% of samples blocked. Unfortunately, despite a 99.419% spam catch rate, the product's final score was brought down by a number of false positives and it narrowly misses out on VBSpam certification this time.

| True negatives | False positives | FP rate | False negatives | True positives | SC rate | Final score | VBSpam | |

| Bitdefender GravityZone Premium | 1315 | 0 | 0.00% | 6.2 | 105206.6 | 99.994% | 99.994 | |

| Fortinet FortiMail | 1315 | 0 | 0.00% | 71 | 105141.8 | 99.933% | 99.933 | |

| Mimecast | 1315 | 0 | 0.00% | 317.4 | 104895.4 | 99.698% | 99.698 | |

| N-able Mail Assure | 1315 | 0 | 0.00% | 83 | 105129.8 | 99.921% | 99.921 | |

| N-able SpamExperts | 1315 | 0 | 0.00% | 83 | 105129.8 | 99.921% | 99.921 | |

| Net At Work NoSpamProxy | 1315 | 0 | 0.00% | 15 | 105197.8 | 99.986% | 99.986 | |

| Rspamd | 1310 | 5 | 0.38% | 12016.8 | 93196 | 88.579% | 86.685 | |

| Rspamd Premium | 1313 | 2 | 0.15% | 1078.6 | 104134.2 | 98.975% | 98.217 | |

| SEPPmail.cloudfilter | 1315 | 0 | 0.00% | 7 | 105205.8 | 99.993% | 99.918 | |

| Sophos Email | 1315 | 0 | 0.00% | 12.4 | 105199.4 | 99.988% | 99.988 | |

| SpamTitan | 1315 | 0 | 0.00% | 1.2 | 105211.6 | 99.999% | 99.999 | |

| Zoho Mail | 1310 | 5 | 0.38% | 611.8 | 104601 | 99.419% | 97.449 |

| Newsletters | Malware | Phishing | Project Honey Pot | Abusix | MXMailData | STDev† | |||||||

| False positives | FP rate | False negatives | SC rate | False negatives | SC rate | False negatives | SC rate | False negatives | SC rate | False negatives | SC rate | ||

| Bitdefender GravityZone Premium | 0 | 0.00% | 0 | 100.000% | 1 | 99.990% | 3 | 99.995% | 3.2 | 99.990% | 0 | 100.000% | 0.07 |

| Fortinet FortiMail | 0 | 0.00% | 0 | 100.000% | 19 | 99.890% | 44 | 99.927% | 24 | 99.923% | 3 | 99.980% | 0.19 |

| Mimecast | 0 | 0.00% | 0 | 100.000% | 12 | 99.930% | 280.2 | 99.534% | 37.2 | 99.881% | 0 | 100.000% | 0.7 |

| N-able Mail Assure | 0 | 0.00% | 1 | 99.900% | 8 | 99.950% | 25 | 99.958% | 58 | 99.814% | 0 | 100.000% | 0.32 |

| N-able SpamExperts | 0 | 0.00% | 1 | 99.900% | 8 | 99.950% | 25 | 99.958% | 58 | 99.814% | 0 | 100.000% | 0.32 |

| Net At Work NoSpamProxy | 0 | 0.00% | 0 | 100.000% | 2 | 99.990% | 6 | 99.990% | 8 | 99.974% | 1 | 99.990% | 0.1 |

| Rspamd | 0 | 0.00% | 416 | 60.190% | 629 | 96.260% | 4392.4 | 92.692% | 1233.4 | 96.054% | 6391 | 53.880% | 8.96 |

| Rspamd Premium | 0 | 0.00% | 7 | 99.330% | 114 | 99.320% | 774.2 | 98.712% | 276.4 | 99.116% | 28 | 99.800% | 2.05 |

| SEPPmail.cloudfilter | 1 | 3.85% | 0 | 100.000% | 1 | 99.990% | 6 | 99.990% | 1 | 99.997% | 0 | 100.000% | 0.06 |

| Sophos Email | 0 | 0.00% | 7 | 99.330% | 0 | 100.000% | 5 | 99.992% | 6.4 | 99.980% | 1 | 99.990% | 0.1 |

| SpamTitan | 0 | 0.00% | 0 | 100.000% | 0 | 100.000% | 1 | 99.998% | 0.2 | 99.999% | 0 | 100.000% | 0.03 |

| Zoho Mail | 1 | 3.85% | 1 | 99.900% | 20 | 99.880% | 355.8 | 99.408% | 251 | 99.197% | 5 | 99.960% | 1.86 |

† The standard deviation of a product is calculated using the set of its hourly spam catch rates.

| Speed | ||||

| 10% | 50% | 95% | 98% | |

| Bitdefender GravityZone Premium | ||||

| Fortinet FortiMail | ||||

| Mimecast | ||||

| N-able Mail Assure | ||||

| N-able SpamExperts | ||||

| Net At Work NoSpamProxy | ||||

| Rspamd | ||||

| Rspamd Premium | ||||

| SEPPmail.cloud Filter | ||||

| Sophos Email | ||||

| SpamTitan | ||||

| Zoho Mail | ||||

| 0-30 seconds | 30 seconds to two minutes | two minutes to 10 minutes | more than 10 minutes |

| Products ranked by final score | |

| SpamTitan | 99.999 |

| Bitdefender GravityZone Premium | 99.994 |

| Sophos Email | 99.988 |

| Net At Work NoSpamProxy | 99.986 |

| Fortinet FortiMail | 99.933 |

| N-able Mail Assure | 99.921 |

| N-able SpamExperts | 99.921 |

| SEPPmail.cloudfilter | 99.918 |

| Mimecast | 99.698 |

| Rspamd Premium | 98.217 |

| Zoho Mail | 97.449 |

| Rspamd | 86.685 |

| Hosted solutions | Anti-malware | IPv6 | DKIM | SPF | DMARC | Multiple MX-records | Multiple locations |

| Mimecast | Mimecast | √ | √ | √ | √ | √ | |

| N-able Mail Assure | N-able Mail Assure | √ | √ | √ | √ | ||

| N-able SpamExperts | SpamExperts | √ | √ | √ | √ | ||

| Net At Work NoSpamProxy | 32Guards & NoSpamProxy | √ | √ | √ | √ | √ | |

| Rspamd Premium | ClamAV | √ | √ | √ | √ | √ | |

| SEPPmail.cloud Filter | SEPPmail | √ | √ | √ | √ | √ | √ |

| Sophos Email | Sophos | √ | √ | √ | √ | √ | √ |

| SpamTitan | SpamTitan | √ | √ | √ | √ | √ | √ |

| Zoho Mail | Zoho | √ | √ | √ | √ | √ |

| Local solutions | Anti-malware | IPv6 | DKIM | SPF | DMARC | Interface | |||

| CLI | GUI | Web GUI | API | ||||||

| Bitdefender GravityZone Premium | Bitdefender | √ | √ | √ | √ | ||||

| Fortinet FortiMail | Fortinet | √ | √ | √ | √ | √ | √ | √ | |

| Rspamd | None | √ | |||||||

The full VBSpam test methodology can be found at https://www.virusbulletin.com/testing/vbspam/vbspam-methodology/vbspam-methodology-ver30/.

The test ran for 16 days, from 12am on 2 November to 12am on 18 November 2024 (GMT).

The test corpus consisted of 106,569 emails. 105,228 of these were spam, 60,106 of which were provided by Project Honey Pot, 31,264 of which were provided by Abusix, with the remaining 13,858 spam emails provided by MXMailData. There were 1,315 legitimate emails ('ham') and 26 newsletters – a category that includes various kinds of commercial and non-commercial opt-in mailings.

19 emails in the spam corpus were considered 'unwanted' (see the June 2018 report) and were included with a weight of 0.2; this explains the non-integer numbers in some of the tables.

Moreover, 1,045 emails from the spam corpus were found to contain a malicious attachment while 16,825 contained a link to a phishing or malware site; though we report separate performance metrics on these corpora, it should be noted that these emails were also counted as part of the spam corpus.

Emails were sent to the products in real time and in parallel. Though products received the email from a fixed IP address, all products had been set up to read the original sender’s IP address as well as the EHLO/HELO domain sent during the SMTP transaction, either from the email headers or through an optional XCLIENT SMTP command5.

For those products running in our lab, we all ran them as virtual machines on a VMware ESXi cluster. As different products have different hardware requirements – not to mention those running on their own hardware, or those running in the cloud – there is little point comparing the memory, processing power or hardware the products were provided with; we followed the developers’ requirements and note that the amount of email we receive is representative of that received by a small organization.

Although we stress that different customers have different needs and priorities, and thus different preferences when it comes to the ideal ratio of false positive to false negatives, we created a one-dimensional ‘final score’ to compare products. This is defined as the spam catch (SC) rate minus five times the weighted false positive (WFP) rate. The WFP rate is defined as the false positive rate of the ham and newsletter corpora taken together, with emails from the latter corpus having a weight of 0.2:

WFP rate = (#false positives + 0.2 * min(#newsletter false positives , 0.2 * #newsletters)) / (#ham + 0.2 * #newsletters)

while in the spam catch rate (SC), emails considered ‘unwanted’ (see above) are included with a weight of 0.2.

The final score is then defined as:

Final score = SC - (5 x WFP)

In addition, for each product, we measure how long it takes to deliver emails from the ham corpus (excluding false positives) and, after ordering these emails by this time, we colour-code the emails at the 10th, 50th, 95th and 98th percentiles:

| (green) = up to 30 seconds | |

| (yellow) = 30 seconds to two minutes | |

| (orange) = two to ten minutes | |

| (red) = more than ten minutes |

Products earn VBSpam certification if the value of the final score is at least 98 and the ‘delivery speed colours’ at 10 and 50 per cent are green or yellow and that at 95 per cent is green, yellow or orange.

Meanwhile, products that combine a spam catch rate of 99.5% or higher with a lack of false positives, no more than 2.5% false positives among the newsletters and ‘delivery speed colours’ of green at 10 and 50 per cent and green or yellow at 95 and 98 per cent earn a VBSpam+ award.

1 For a small number of samples (4,938 samples; 4.69% of the total) we were not able to find data about geographical location based on IP address.

2 https://any.run/cybersecurity-blog/pure-malware-family-analysis/.

3 https://securityintelligence.com/x-force/strela-stealer-todays-invoice-tomorrows-phish/.

4 https://app.any.run/tasks/076f67c9-2974-47e0-a763-9a13180b3808.

5 http://www.postfix.org/XCLIENT_README.html.

This section allows you to create a simulated combination of products and view how your synthetized combination would have performed in this test. This is primarily useful for complementary (partial) products, which are rarely used in isolation, but rather they are added on top of base product, potentially along with other complementary products.

Note that the simulation does not employ the same email category weight rules as the VBSpam test normally does and therefore you might get slightly different figures than those in the test report (lower spam detection and higher false positive rates).