Virus Bulletin

Copyright © Virus Bulletin 2016

Spam isn't that much of a problem these days – which may sound like an unusual statement coming from an organization that started testing spam filters precisely because it was such a problem. Yet it is no secret that there are many products that help to mitigate the spam problem; the VBSpam tests have been testament to that.

However, 'not much of a problem' isn't the same as no problem. The problems that remain with email exist mostly in the margins: phishing emails and, especially, emails with malicious attachments. These get blocked in the vast majority of cases, but are those block rates good enough? After all, it takes just one malicious attachment to be opened by the user to get infected with ransomware. (Which is why we, like most security experts, recommend running an endpoint security product next to an email security product.)

In these tests we have always focused on spam as a problem of volume – and this should remain an important focus for any product in the email security market: it's still not reasonable to expect anyone to use email without their inbox being protected by a spam filter, it probably never will be.

However, we now plan also to focus on the explicitly malicious aspect of spam. In this test report, we will give a sneak preview of how we will be doing this; in future test reports, we will report on the ability of email security products to block malicious attachments.

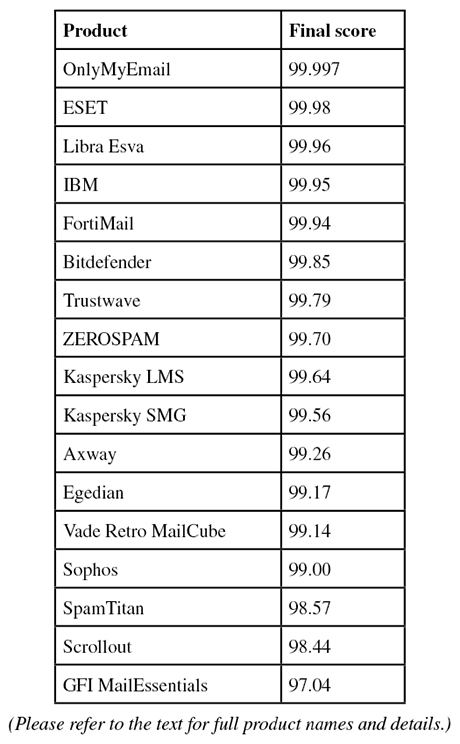

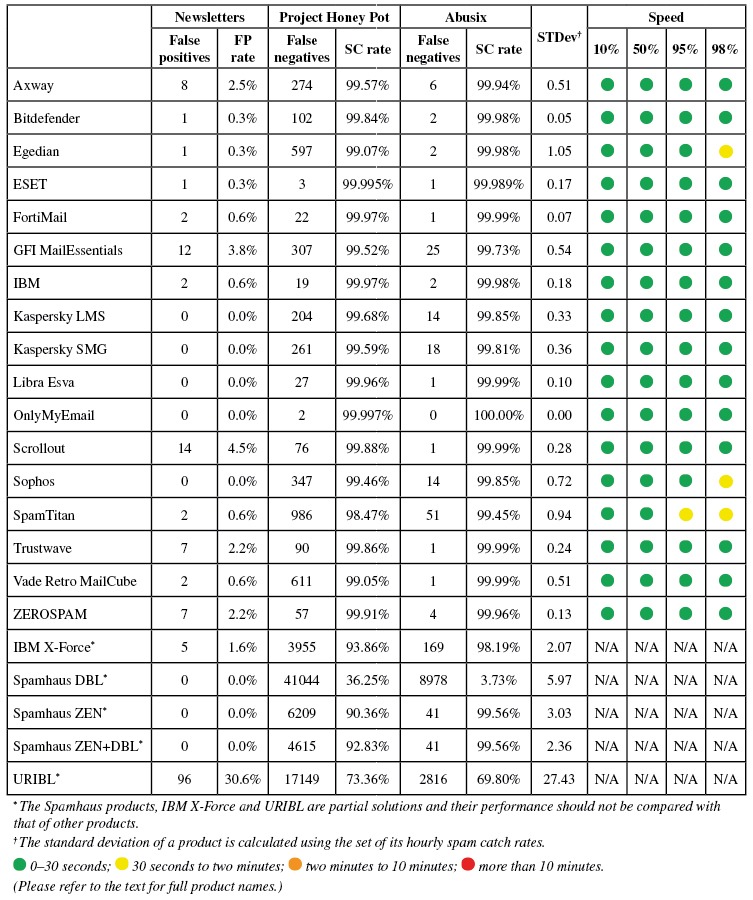

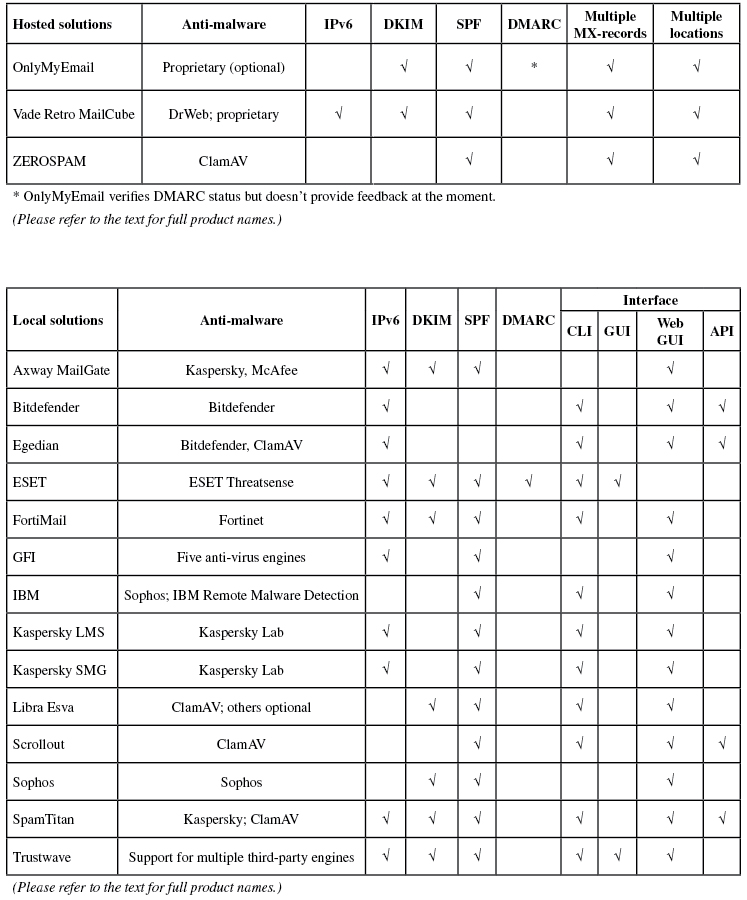

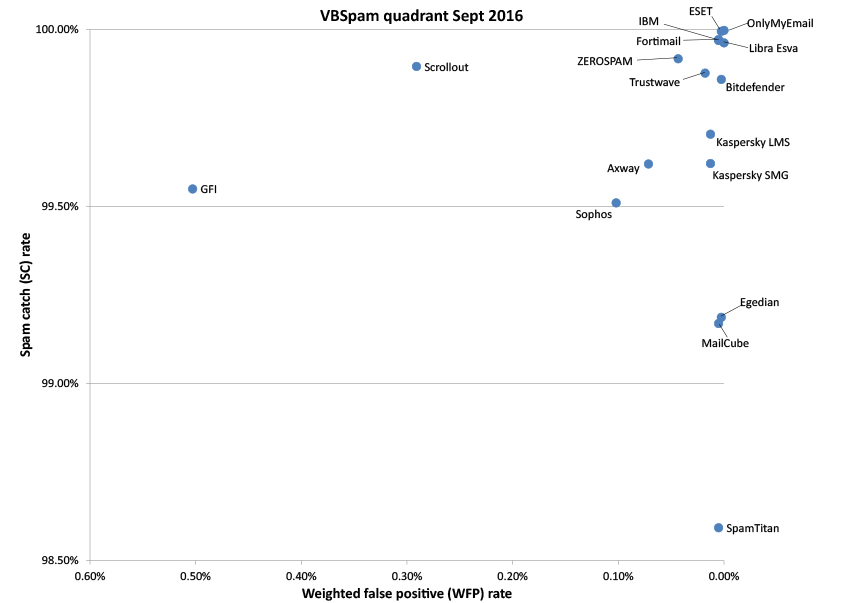

A total of 17 full email security (or anti-spam) solutions took part in this test, all of which achieved VBSpam certification. Seven of them performed well enough to earn the VBSpam+ accolade. We also tested five DNS-based blocklists.

The VBSpam test methodology can be found at https://www.virusbulletin.com/testing/vbspam/vbspam-methodology/. As usual, emails were sent to the products in parallel and in real time, and products were given the option to block email pre-DATA (that is, based on the SMTP envelope and before the actual email was sent). However, on this occasion no products chose to make use of this option.

For those products running on our equipment, we use Dell PowerEdge machines. As different products have different hardware requirements – not to mention those running on their own hardware, or those running in the cloud – there is little point comparing the memory, processing power or hardware the products were provided with; we followed the developers' requirements and note that the amount of email we receive is representative of that received by a small organization.

To compare the products, we calculate a 'final score', which is defined as the spam catch (SC) rate minus five times the weighted false positive (WFP) rate. The WFP rate is defined as the false positive rate of the ham and newsletter corpora taken together, with emails from the latter corpus having a weight of 0.2:

WFP rate = (#false positives + 0.2 * min(#newsletter false positives , 0.2 * #newsletters)) / (#ham + 0.2 * #newsletters)

Final score = SC - (5 x WFP)

In addition, for each product, we measure how long it takes to deliver emails from the ham corpus (excluding false positives) and, after ordering these emails by this time, we colour-code the emails at the 10th, 50th, 95th and 98th percentiles:

| (green) = up to 30 seconds | |

| (yellow) = 30 seconds to two minutes | |

| (orange) = two to ten minutes | |

| (red) = more than ten minutes |

Products earn VBSpam certification if the value of the final score is at least 98 and the 'delivery speed colours' at 10 and 50 per cent are green or yellow and that at 95 per cent is green, yellow or orange.

Meanwhile, products that combine a spam catch rate of 99.5% or higher with a lack of false positives, no more than 2.5% false positives among the newsletters and 'delivery speed colours' of green at 10 and 50 per cent and green or yellow at 95 and 98 per cent earn a VBSpam+ award.

The test ran for 16 days, from 12am on 3 September to 12am on 19 September 2016.

The test corpus consisted of 81,796 emails. 73,710 of these were spam, 64,384 of which were provided by Project Honey Pot, with the remaining 9,326 spam emails provided by spamfeed.me, a product from Abusix. They were all relayed in real time, as were the 7,772 legitimate emails ('ham') and 314 newsletters.

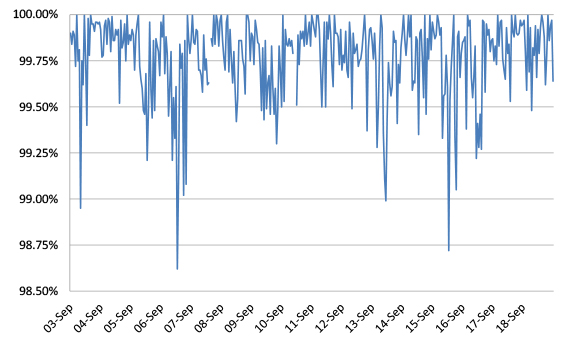

Figure 1 shows the catch rate of all full solutions throughout the test. To avoid the average being skewed by poorly performing products, the highest and lowest catch rates have been excluded for each hour.

Figure 1: Spam catch rate of all full solutions throughout the test period.

Figure 1: Spam catch rate of all full solutions throughout the test period.

Compared to the last test, which ran in July, the average spam catch rate dropped by a probably insignificant 0.02%, the most important point of note of which is that catch rates remain lower than they were in the first months of the year.

The most difficult to filter spam emails were, unsurprisingly, those that came from what are likely to be legitimate companies making promises that seem only a little bit too good to be true, and are probably a nuisance at worst. Still, none of these messages were missed by every participating product, which demonstrates that there was something in each of them that could be used to block them.

There were more false positives than in the last test, though this was caused mainly by two outlying products.

Among the emails in our spam feed, 2,076, or a little under three per cent, contained a malicious attachment. (Spam is notoriously volatile. This ratio of malicious spam could be many times higher during different periods, or for other recipients.)

Spam has long been a delivery mechanism for malware. Initially, malicious executables were attached directly to the emails, which made them relatively easy to block. These days, however, the attachments are more often than not malware downloaders that come in a variety of formats, from JScript files to Office documents containing macros, the latter being a file format few organizations can afford to block.

The particular malware that ends up being downloaded often isn't determined by the downloader alone, and the same downloader could lead to different kinds of malware (and sometimes no malware at all) being downloaded. Still, most of the downloaders we saw were those commonly associated with ransomware, in particular Locky, once again confirming that this is the most important threat at the moment.

Thankfully, most of the emails were blocked by the spam filters in our tests. However, the block rates for messages containing malware were lower than for the overall spam corpus: while five full solutions blocked all 2,076 emails, four others missed significantly more than one per cent of the emails. For a small organization, this would have meant a few dozen malicious emails making it to users' inboxes. There is certainly room for improvement here.

Interestingly, while ransomware downloaders were among the malicious emails that some products missed, the most difficult to filter email contained the Adwind RAT [1], a relatively rarely seen remote access trojan, written in Java. This emphasizes the obvious point that malware is harder to block when it is seen less often.

Note: Vendors have not received feedback on malicious spam in particular, and thus have not been able to contest our claims. We therefore do not feel it would be fair to report on the performance of individual products against malicious spam on this occasion. Moreover, some developers may want to adjust their products' settings as malicious spam becomes more of a focus in these tests.

Two products, OnlyMyEmail and ESET, stood out for missing just two and four emails from the spam corpus respectively. Neither product blocked any legitimate emails, earning them each VBSpam+ awards. VBSpam+ awards were also earned by Bitdefender, Fortinet, IBM, Libra Esva and Trustwave. 'Clean sheets' – in which no legitimate emails were blocked either in the ham corpus or in the newsletter feed – were achieved by OnlyMyEmail and Libra Esva.

SC rate: 99.62%

FP rate: 0.05%

Final score: 99.26

Project Honey Pot SC rate: 99.57%

Abusix SC rate: 99.94%

Newsletters FP rate: 2.5%

| 10% | 50% | 95% | 98% |

SC rate: 99.86%

FP rate: 0.00%

Final score: 99.85

Project Honey Pot SC rate: 99.84%

Abusix SC rate: 99.98%

Newsletters FP rate: 0.3%

| 10% | 50% | 95% | 98% |

SC rate: 99.19%

FP rate: 0.00%

Final score: 99.17

Project Honey Pot SC rate: 99.07%

Abusix SC rate: 99.98%

Newsletters FP rate: 0.3%

| 10% | 50% | 95% | 98% |

SC rate: 99.99%

FP rate: 0.00%

Final score: 99.98

Project Honey Pot SC rate: 99.995%

Abusix SC rate: 99.989%

Newsletters FP rate: 0.3%

| 10% | 50% | 95% | 98% |

SC rate: 99.97%

FP rate: 0.00%

Final score: 99.94

Project Honey Pot SC rate: 99.97%

Abusix SC rate: 99.99%

Newsletters FP rate: 0.6%

| 10% | 50% | 95% | 98% |

SC rate: 99.55%

FP rate: 0.48%

Final score: 97.04

Project Honey Pot SC rate: 99.52%

Abusix SC rate: 99.73%

Newsletters FP rate: 3.8%

| 10% | 50% | 95% | 98% |

SC rate: 99.97%

FP rate: 0.00%

Final score: 99.95

Project Honey Pot SC rate: 99.97%

Abusix SC rate: 99.98%

Newsletters FP rate: 0.6%

| 10% | 50% | 95% | 98% |

SC rate: 99.70%

FP rate: 0.01%

Final score: 99.64

Project Honey Pot SC rate: 99.68%

Abusix SC rate: 99.85%

Newsletters FP rate: 0.0%

| 10% | 50% | 95% | 98% |

SC rate: 99.62%

FP rate: 0.01%

Final score: 99.56

Project Honey Pot SC rate: 99.59%

Abusix SC rate: 99.81%

Newsletters FP rate: 0.0%

| 10% | 50% | 95% | 98% |

SC rate: 99.96%

FP rate: 0.00%

Final score: 99.96

Project Honey Pot SC rate: 99.96%

Abusix SC rate: 99.99%

Newsletters FP rate: 0.0%

| 10% | 50% | 95% | 98% |

SC rate: 99.997%

FP rate: 0.00%

Final score: 99.997

Project Honey Pot SC rate: 99.997%

Abusix SC rate: 100.00%

Newsletters FP rate: 0.0%

| 10% | 50% | 95% | 98% |

SC rate: 99.90%

FP rate: 0.26%

Final score: 98.44

Project Honey Pot SC rate: 99.88%

Abusix SC rate: 99.99%

Newsletters FP rate: 4.5%

| 10% | 50% | 95% | 98% |

SC rate: 99.51%

FP rate: 0.10%

Final score: 99.00

Project Honey Pot SC rate: 99.46%

Abusix SC rate: 99.85%

Newsletters FP rate: 0.0%

| 10% | 50% | 95% | 98% |

SC rate: 98.59%

FP rate: 0.00%

Final score: 98.57

Project Honey Pot SC rate: 98.47%

Abusix SC rate: 99.45%

Newsletters FP rate: 0.6%

| 10% | 50% | 95% | 98% |

SC rate: 99.88%

FP rate: 0.00%

Final score: 99.79

Project Honey Pot SC rate: 99.86%

Abusix SC rate: 99.99%

Newsletters FP rate: 2.2%

| 10% | 50% | 95% | 98% |

SC rate: 99.17%

FP rate: 0.00%

Final score: 99.14

Project Honey Pot SC rate: 99.05%

Abusix SC rate: 99.99%

Newsletters FP rate: 0.6%

| 10% | 50% | 95% | 98% |

SC rate: 99.92%

FP rate: 0.03%

Final score: 99.70

Project Honey Pot SC rate: 99.91%

Abusix SC rate: 99.96%

Newsletters FP rate: 2.2%

| 10% | 50% | 95% | 98% |

The products listed below are 'partial solutions', which means they only have access to part of the emails and/or SMTP transaction, and are intended to be used as part of a full spam solution. As such, their performance should neither be compared with those of the full solutions listed previously, nor necessarily with each other's.

New to the test this month is URIBL, a DNS-based blocklist which, as its name suggests, is used to check URLs (or, more precisely, domain names) found in the body of emails. It is thus comparable with Spamhaus DBL, though it should be noted that no two blocklists have the same use case.

Its spam catch rate was close to 73%, which is certainly impressive, though this may in part have been a consequence of its use of a 'grey' list containing domains found in unsolicited, but not necessarily illegal, bulk emails, which the product itself warns could cause false positives [2]. Indeed, it 'blocked' 30 per cent of the emails in our newsletter feed.

SC rate: 94.41%

FP rate: 0.00%

Final score: 94.34

Project Honey Pot SC rate: 93.86%

Abusix SC rate: 98.19%

Newsletters FP rate: 1.6%

SC rate: 32.14%

FP rate: 0.00%

Final score: 32.14

Project Honey Pot SC rate: 36.25%

Abusix SC rate: 3.73%

Newsletters FP rate: 0.0%

SC rate: 91.52%

FP rate: 0.00%

Final score: 91.52

Project Honey Pot SC rate: 90.36%

Abusix SC rate: 99.56%

Newsletters FP rate: 0.0%

SC rate: 93.68%

FP rate: 0.00%

Final score: 93.68

Project Honey Pot SC rate: 92.83%

Abusix SC rate: 99.56%

Newsletters FP rate: 0.0%

SC rate: 72.91%

FP rate: 0.00%

Final score: 72.18

Project Honey Pot SC rate: 73.36%

Abusix SC rate: 69.80%

Newsletters FP rate: 30.6%

Spam remains a well mitigated security problem. However, as this test now clearly shows, when it comes to malicious attachments, there is certainly some room for improvement, especially since this is an area where spam is far more than a nuisance.

We are already looking forward to the next test – to be published mid-December – when we will report in more detail on this aspect. Those interested in submitting a product should contact [email protected].

[1] https://virustotal.com/en/file/504da9f2866c1b78c71237a4e190354340c0801fb34a790baa4b00dedcd46475/analysis/.