2015-12-01

Abstract

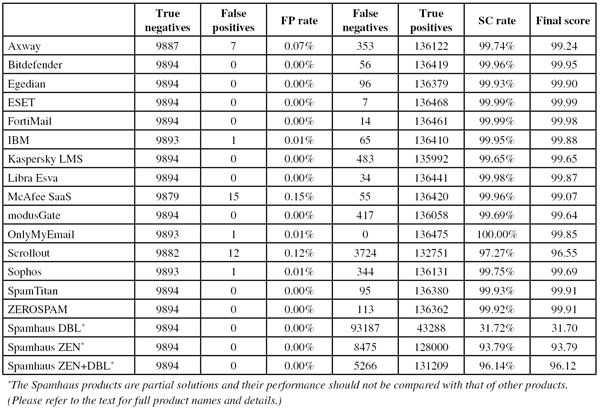

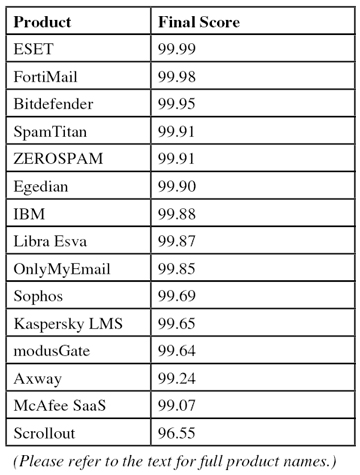

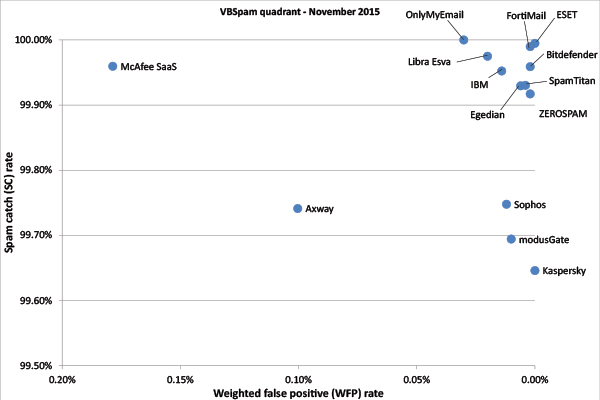

Fifteen full solutions and three DNS-based blacklists lined up on the test bench for this VBSpam test and all but one of the full solutions reached the performance level required to earn a VBSpam award. Perhaps more impressively, eight of them achieved a VBSpam+ award. Martijn Grooten reports.

Copyright © 2015 Virus Bulletin

In a typical organization, end-users can’t run FTP or SSH servers on their desktop machines, they are prevented from playing most computer-based games, and peer-to-peer software is blocked. While security isn’t always the motivation for these policies, they certainly reduce the attack vector.

However, few organizations are able to block the two most prominent vectors: web and email traffic.

There are many solutions that aim to block malicious web traffic, and we are excited to announce that the very first VBWeb test, in which the accuracy of such products will be measured, will start shortly after the publication of this report. Meanwhile, in this report we focus on solutions whose aim is to keep the email flow free from malicious and irrelevant emails – which, for most organizations, still form the majority of the email traffic they receive.

Fifteen full solutions and three DNS-based blacklists lined up on the test bench for this VBSpam test and all but one of the full solutions reached the performance level required to earn a VBSpam award. Perhaps more impressively, eight of them achieved a VBSpam+ award.

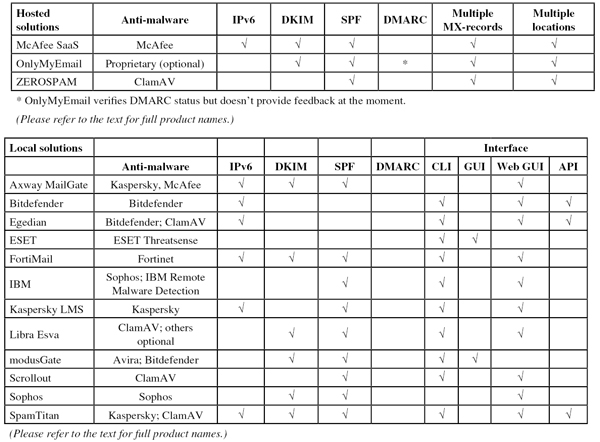

The VBSpam test methodology can be found at http://www.virusbtn.com/vbspam/methodology/. As usual, emails were sent to the products in parallel and in real time, and products were given the option to block email pre-DATA (that is, based on the SMTP envelope and before the actual email was sent). However, on this occasion no products chose to make use of this option.

For those products running on our equipment, we use Dell PowerEdge machines. As different products have different hardware requirements (not to mention those running on their own hardware, or those running in the cloud), there is little point comparing the memory, processing power or hardware the products were provided with; we followed the developers’ requirements and note that the amount of email we receive is representative of that received by a small organization.

To compare the products, we calculate a ‘final score’, which is defined as the spam catch (SC) rate minus five times the weighted false positive (WFP) rate. The WFP rate is defined as the false positive rate of the ham and newsletter corpora taken together, with emails from the latter corpus having a weight of 0.2:

WFP rate = (#false positives + 0.2 * min(#newsletter false positives , 0.2 * #newsletters)) / (#ham + 0.2 * #newsletters)

Final score = SC - (5 x WFP)

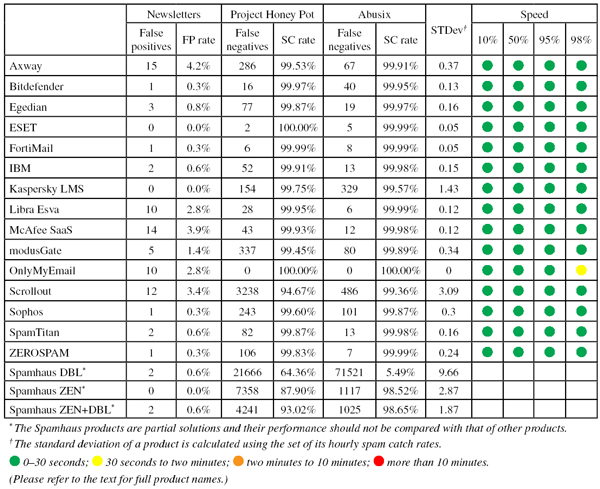

In the last test, extra criteria for the VBSpam award were added, based on the speed of delivery of emails in the ham corpus. For each product, we measured how long it took to deliver emails from the ham corpus (excluding false positives) and, after ordering these emails by the time it took to deliver them, we colour-coded the emails at the 10, 50, 95 and 98 percentiles: green for up to 30 seconds; yellow for 30 seconds to two minutes; orange for two to ten minutes; and red for more than ten minutes.

To qualify for a VBSpam award, the value of the final score must be at least 98. In addition, the speed of delivery at 10 and 50 percent must fall into the green or yellow categories, and at 95 percent it must fall within the green, yellow or orange categories. To earn a VBSpam+ award, products must combine a spam catch rate of 99.5% or higher with a lack of false positives and no more than 2.5% false positives among the newsletters, and in addition, the speed of delivery at 10 and 50 percent must fall into the green category, while at 95 and 98 percent it must fall into the green or yellow category.

The test started on Saturday 24 October at 12am and finished 16 days later, on Monday 9 November at 12am. There were no significant issues affecting the test.

The test corpus consisted of 146,727 emails. 136,475 of these emails were spam, 60,799 of which were provided by Project Honey Pot, with the remaining 75,676 spam emails provided by spamfeed.me, a product from Abusix. They were all relayed in real time, as were the 9,894 legitimate emails (‘ham’) and 358 newsletters.

Figure 1 shows the catch rate of all full solutions throughout the test. To avoid the average being skewed by poorly performing products, the highest and lowest catch rates have been excluded for each hour. Once again this month we see high catch rates – indeed, ignoring one outlier, spam catch rates remained high throughout.

The average catch rate dropped below 99% only once – in this case, it was caused by a number of emails that contained a few lines of text seemingly randomly grabbed from Wikipedia articles and which lacked a payload.

It is worth noting that all but one participating solution saw their ‘speed colours’ in green – and for the one solution that fell outside the green category, delivery speeds had only just nudged into the yellow category. This shows that, while spam filters could potentially improve their performance significantly by delaying emails for an unreasonable amount of time, they don’t do so in our test – and are unlikely to do so in a real environment either.

It is easy to overstate the importance of anti-spam legislation. Most spam breaks laws in many ways other than the fact that it is unsolicited email in bulk, and if law enforcement could trace the spammers and those providing infrastructure to them, they probably wouldn’t need anti-spam laws to prosecute them.

However, it is also easy to understate the important of anti-spam legislation. If anything, it means a website on which you registered once isn’t allowed to email you every day with its latest offers – or at the very least, you are able to opt out of receiving such emails.

That makes US political email an interesting case study. Such emails are exempt from the country’s CAN-SPAM Act, a law that since its inception has been criticized for not being strong enough against spammers.

Much as most anti-spam experts believe the exemption is a very bad idea (I certainly think it is), and much as this has led to a lot of unwanted US political email, there are certainly many people who like to read daily updates from their favourite candidate for the 2016 presidential elections (even if the emails do quite often beg for money). At the same time, for many other recipients the emails will be unwanted and unsolicited and those people, quite rightly, will consider them spam.

We have subscribed to many political mailings in the past, and on this occasion we added mailings from 15 US presidential candidates to our newsletter feed. Restricting the feed to three emails per subscription, we ended up with 23 emails from 10 different candidates. Interestingly, the only two candidates who asked us to confirm the subscription (George Pataki and John Kasich) didn’t send any emails during the test period.

While 23 is a rather small corpus on which to base any firm conclusions, these 23 emails were seven times more likely than other emails in the newsletter corpus to be blocked by a participating spam filter. (It is worth noting that the fact that the emails were new to the feed might have contributed to their high block rate.) The average presidential campaign email had a ten per cent probability of a spam filter blocking it, which is high in a context in which many products are set to avoid false positives.

The most blocked email was one from candidate Marco Rubio, asking his alleged supporters to participate in a survey. Rick Santorum was the only politician whose emails (he sent two) weren’t blocked by any full solution.

Following feedback from a number of participants, who said that in some cases their customers explicitly ask them to block emails of this type, we decided to exclude these emails from future tests.

In the text that follows, unless otherwise specified, ‘ham’ or ‘legitimate email’ refers to email in the ham corpus – which excludes the newsletters – and a ‘false positive’ refers to a message in that corpus that has been erroneously marked by a product as spam.

SC rate: 99.74%

FP rate: 0.07%

Final score: 99.24

Project Honey Pot SC rate: 99.53%

Abusix SC rate: 99.91%

Newsletters FP rate: 4.2%

Speed: 10%:  ; 50%:

; 50%:  ; 95%:

; 95%:  ; 98%:

; 98%:

Axway’s MailGate virtual appliance performed slightly worse in all three categories than it did in the last test, which is always a little sad to see, but at the same time this could well just be a case of bad luck. Of course, ‘slightly worse’ than pretty good is still good – and the product easily earns its 11th VBSpam award.

SC rate: 99.96%

FP rate: 0.00%

Final score: 99.95

Project Honey Pot SC rate: 99.97%

Abusix SC rate: 99.95%

Newsletters FP rate: 0.3%

Speed: 10%:  ; 50%:

; 50%:  ; 95%:

; 95%:  ; 98%:

; 98%:

This is the 40th VBSpam test, the 40th test in which Bitdefender has participated, and the 40th test in which the product has achieved a VBSpam award. In fact, with a spam catch rate of 99.96%, no false positives and just one missed newsletter (from US presidential candidate Bernie Sanders), it once again achieved a VBSpam+ award – its 16th in the last 18 tests.

SC rate: 99.93%

FP rate: 0.00%

Final score: 99.90

Project Honey Pot SC rate: 99.87%

Abusix SC rate: 99.97%

Newsletters FP rate: 0.8%

Speed: 10%:  ; 50%:

; 50%:  ; 95%:

; 95%:  ; 98%:

; 98%:

Egedian Mail Security, a virtual appliance which first joined the VBSpam tests in May last year, has been improving steadily, reaching an impressive spam catch rate of more than 99.90% in the last two tests. In this test, it not only repeated that performance, but combined it with a lack of false positives, thus earning the product its first VBSpam+ award.

SC rate: 99.99%

FP rate: 0.00%

Final score: 99.99

Project Honey Pot SC rate: 100.00%

Abusix SC rate: 99.99%

Newsletters FP rate: 0.0%

Speed: 10%:  ; 50%:

; 50%:  ; 95%:

; 95%:  ; 98%:

; 98%:

ESET took a short break and decided to sit out the last test. On its return, the product’s ‘speed colours’ are all green, showing that emails are returned instantly by the product. More important, though, is the fact that the product not only managed a clean sheet in both the ham and newsletter corpora, but it also missed just seven spam emails – fewer than all but one other product. Thanks to this outstanding performance, ESET finishes with the highest final score of all products, and a very well-deserved VBSpam+ award.

SC rate: 99.99%

FP rate: 0.00%

Final score: 99.98

Project Honey Pot SC rate: 99.99%

Abusix SC rate: 99.99%

Newsletters FP rate: 0.3%

Speed: 10%:  ; 50%:

; 50%:  ; 95%:

; 95%:  ; 98%:

; 98%:

For FortiMail it was just one political newsletter (from Hillary Clinton) that prevented it from getting a clean sheet in both legitimate corpora. But that’s really a tiny detail in what was otherwise an impressive performance: the appliance missed just 14 spam emails and ends up with its seventh VBSpam+ award and the second highest final score.

SC rate: 99.95%

FP rate: 0.01%

Final score: 99.88

Project Honey Pot SC rate: 99.91%

Abusix SC rate: 99.98%

Newsletters FP rate: 0.6%

Speed: 10%:  ; 50%:

; 50%:  ; 95%:

; 95%:  ; 98%:

; 98%:

This month, IBM’s Lotus Protector for Mail Security saw its spam catch rate increase to 99.95%, which means that the product missed just one in 2,000 spam emails. Apart from that it missed just two newsletters and a single email from the ham corpus – the latter meaning that it missed out on a VBSpam+ award this time. Another VBSpam award is still well-deserved though.

SC rate: 99.65%

FP rate: 0.00%

Final score: 99.65

Project Honey Pot SC rate: 99.75%

Abusix SC rate: 99.57%

Newsletters FP rate: 0.0%

Speed: 10%:  ; 50%:

; 50%:  ; 95%:

; 95%:  ; 98%:

; 98%:

In the last test, we found that Kaspersky’s Linux Mail Security product returned a small minority of emails with a small delay, but this has been rectified in this test and all four speed markers are in green.

It would be tempting to see this as a reason for the drop in the spam catch rate, but that probably isn’t the case: most of the spam missed was on the last Friday of the test when the product had issues with the spam campaign that incorporated random Wikipedia sentences. Still, even with this glitch, the catch rate was well above 99.5% – and with a completely clean sheet in the ham and newsletter corpora, the product earns a VBSpam+ award.

SC rate: 99.98%

FP rate: 0.00%

Final score: 99.87

Project Honey Pot SC rate: 99.95%

Abusix SC rate: 99.99%

Newsletters FP rate: 2.8%

Speed: 10%:  ; 50%:

; 50%:  ; 95%:

; 95%:  ; 98%:

; 98%:

Neither the lack of false positives in the ham corpus nor the very high spam catch rate – the product missed just 34 spam emails – will come as a surprise to anyone who has been following Libra Esva in the VBSpam tests. Such a performance would usually have been enough to earn the product a VBSpam+ award, but unfortunately it missed ten emails in the newsletter corpus (none of which were from politicians), pushing the corresponding FP rate to above the threshold for the VBSpam+ award. Nevertheless, the products’ developers end the year with a standard VBSpam award.

SC rate: 99.96%

FP rate: 0.15%

Final score: 99.07

Project Honey Pot SC rate: 99.93%

Abusix SC rate: 99.98%

Newsletters FP rate: 3.9%

Speed: 10%:  ; 50%:

; 50%:  ; 95%:

; 95%:  ; 98%:

; 98%:

Last month, Intel Security announced its intention to discontinue its email security solutions, including McAfee SaaS Email Protection. For that reason, this is the product’s final appearance in the public VBSpam tests, ending more than six years of working together with the people at Intel Security/McAfee. (More details can be found at the following resource page provided by Intel Security: http://mcaf.ee/y6j1tq.) Existing customers will continue to receive support for the next few years and we will continue to work with the product’s developers to help them ensure this happens as smoothly as possible, but this will be outside the public view.

McAfee ends its participation in our tests with another VBSpam award – the product’s 21st, not counting those awarded to two other previously tested McAfee products. The spam catch rate of 99.96% was very good, even by the product’s standards, although the relatively high false positive rates are a minor concern. Even without awards to be won, the product’s developers will no doubt be working hard to improve on this aspect.

SC rate: 99.69%

FP rate: 0.00%

Final score: 99.64

Project Honey Pot SC rate: 99.45%

Abusix SC rate: 99.89%

Newsletters FP rate: 1.4%

Speed: 10%:  ; 50%:

; 50%:  ; 95%:

; 95%:  ; 98%:

; 98%:

Those with a good memory may remember Vircom’s modusGate from the early years of the VBSpam tests – it last participated in a VBSpam test in July 2010.

Now the product is back with a vengeance. With an international email feed like the one used in our tests, false positives aren’t as easy to avoid as one might think, especially for what is essentially a new participant in the tests. modusGate did exactly that though, managing to avoid misclassifying any of the legitimate emails in the ham feed. This, combined with a good spam catch rate, just a few newsletter false positives and a straight run of green ‘lights’ in the speed measures, means that modusGate easily qualifies for a VBSpam+ award on its return.

SC rate: 100.00%

FP rate: 0.01%

Final score: 99.85

Project Honey Pot SC rate: 100.00%

Abusix SC rate: 100.00%

Newsletters FP rate: 2.8%

Speed: 10%:  ; 50%:

; 50%:  ; 95%:

; 95%:  ; 98%:

; 98%:

Blocking the first 99% of spam isn’t the difficult part, neither is it necessarily blocking the next 0.5% (though that is where avoiding false positives becomes tricky). The really difficult part is in blocking those spam emails that give filters very few reasons to block them. Yet in this test, OnlyMyEmail did just that: it blocked all of the more than 136,000 emails in the spam corpus.

Against that stood only one false positive – which meant that, unfortunately, the product missed out on a VBSpam+ award this time. Of the ten newsletters blocked by the Michigan-based solution, seven were from US presidential candidates, so this was really a one-off. All in all, it was yet another impressive performance from OnlyMyEmail.

SC rate: 97.27%

FP rate: 0.12%

Final score: 96.55

Project Honey Pot SC rate: 94.67%

Abusix SC rate: 99.36%

Newsletters FP rate: 3.4%

Speed: 10%:  ; 50%:

; 50%:  ; 95%:

; 95%:  ; 98%:

; 98%:

Scrollout F1 – a free and open-source solution – started the test well, but after the first five days its performance dropped due to a large amount of missed dating spam, and it never really recovered. It ended the test with a spam catch rate of 97.27% and, due to some false positives in both ham and newsletters corpora, a final score of well below 97. We thus had to deny Scrollout a VBSpam award this month.

Note: after completion of the test, Scrollout’s developers informed us that many of the missed spam emails were sent in a way that makes them indistinguishable from actual missed spam in our set-up. In a real-world set-up, users would have been able to distinguish between these emails.

SC rate: 99.75%

FP rate: 0.01%

Final score: 99.69

Project Honey Pot SC rate: 99.60%

Abusix SC rate: 99.87%

Newsletters FP rate: 0.3%

Speed: 10%:  ; 50%:

; 50%:  ; 95%:

; 95%:  ; 98%:

; 98%:

Sophos has traditionally had low false positive rates, but in this test it erroneously blocked one email in each of the two corpora. It’s a shame as with a very decent catch rate – the product missed just 1 in 400 spam emails, with a noticeable amount of spam in German among those missed – the product would otherwise easily have achieved a VBSpam+ award. Another VBSpam award, the product’s 35th in a row, is still something to be pleased with though.

SC rate: 99.93%

FP rate: 0.00%

Final score: 99.91

Project Honey Pot SC rate: 99.87%

Abusix SC rate: 99.98%

Newsletters FP rate: 0.6%

Speed: 10%:  ; 50%:

; 50%:  ; 95%:

; 95%:  ; 98%:

; 98%:

SpamTitan’s spam catch rate was actually a little lower on this occasion than it was in the last test, but at 99.93% it remained very high – and certainly higher than the average for this test. What didn’t change was the number of false positives: once again there weren’t any. Of the newsletters just two were blocked – both from the same sender – and thus SpamTitan not only achieves its 36th VBSpam award, but with the fourth highest final score earns a VBSpam+ for the eighth time.

SC rate: 99.92%

FP rate: 0.00%

Final score: 99.91

Project Honey Pot SC rate: 99.83%

Abusix SC rate: 99.99%

Newsletters FP rate: 0.3%

Speed: 10%:  ; 50%:

; 50%:  ; 95%:

; 95%:  ; 98%:

; 98%:

ZEROSPAM’s developers chose to sit out the last test, but it returns quite impressively. Not only did it achieve a 99.92% spam catch rate, it also didn’t block any legitimate emails and blocked just a single newsletter – from US presidential candidate Ted Cruz. This is the best performance thus far for the Canadian hosted solution, which easily earns the product another VBSpam+ award for its efforts.

SC rate: 31.72%

FP rate: 0.00%

Final score: 31.70

Project Honey Pot SC rate: 64.36%

Abusix SC rate: 5.49%

Newsletters FP rate: 0.6%

SC rate: 93.79%

FP rate: 0.00%

Final score: 93.79

Project Honey Pot SC rate: 87.90%

Abusix SC rate: 98.52%

Newsletters FP rate: 0.0%

SC rate: 96.14%

FP rate: 0.00%

Final score: 96.12

Project Honey Pot SC rate: 93.02%

Abusix SC rate: 98.65%

Newsletters FP rate: 0.6%

The single email sent by Republican candidate Ted Cruz during the test period was sent from an email service provider that had found one of its domains on Spamhaus’s DBL blacklist. That’s pretty bad for said ESP, but in our test it meant a newsletter false positive for the DBL as the email was legitimate after all.

Apart from this and another newsletter false positive for the DBL, Spamhaus stood out in this test for blocking more than 96% of emails using just IP addresses and domains; hence its categorization as a partial solution. It finishes what was already a successful year for the blacklist provider on a high note.

(Click here to view a larger version of the table.)

(Click here to view a larger version of the table.)

(Click here to view a larger version of the table.)

(Click here to view a larger version of the chart.)

The 40th VBSpam test was a successful one for most products, with the one-off inclusion of US presidential emails providing an interesting perspective. We are already looking ahead though: our growing team has some interesting plans for 2016 that should make for some interesting reading material, and of course for some good ways to measure products’ performance.

The next VBSpam test will run in December 2015 and January 2016, with the results scheduled for publication in January. Developers interested in submitting products, or who want to know more about how Virus Bulletin can help your company measure or improve its spam filter performance, should email [email protected].