2015-09-08

Abstract

Martijn Grooten reports on what proved to be a good month for products taking part in the VBSpam test, with 14 VBSpam awards among the 15 participating solutions, and five of them achieving a VBSpam+ award.

Copyright © 2015 Virus Bulletin

Times are exciting for Virus Bulletin in general and for the VBSpam test in particular. A new member has just joined the team to help run the VBSpam tests, we’re building a new environment on which to run the tests, and we’re also working on an extra vector to be tested.

Thanks to the combined effects of a holiday and the ongoing preparations for the annual VB Conference, this report appears later than planned – though we have already published a summary of the results. Alongside a test summary, however, we believe it is important to detail the individual performance of each product, which is what you will find in this report.

As already mentioned in the summary, the overall performance in this test was good, resulting in 14 VBSpam awards among the 15 participating solutions, with five of them achieving a VBSpam+ award.

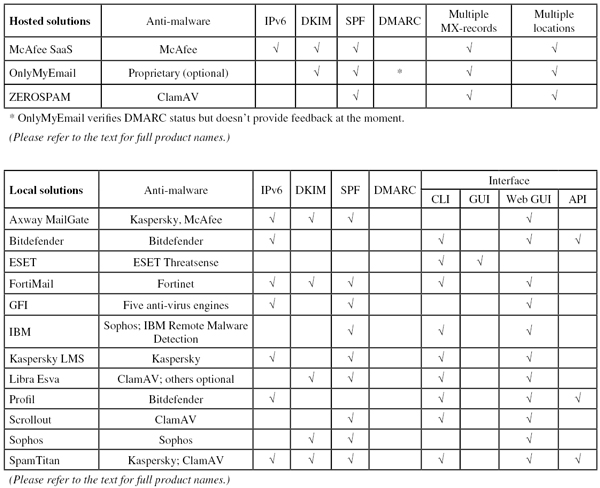

The VBSpam test methodology can be found at http://www.virusbtn.com/vbspam/methodology/. As usual, emails were sent to the products in parallel and in real time, and products were given the option to block email pre-DATA (that is, based on the SMTP envelope and before the actual email was sent). However, on this occasion no products chose to make use of this option.

For those products running on our equipment, we use Dell PowerEdge machines. As different products have different hardware requirements – not to mention those running on their own hardware, or those running in the cloud – there is little point comparing the memory, processing power or hardware the products were provided with; we followed the developers’ requirements and note that the amount of email we receive is representative of that received by a small organization.

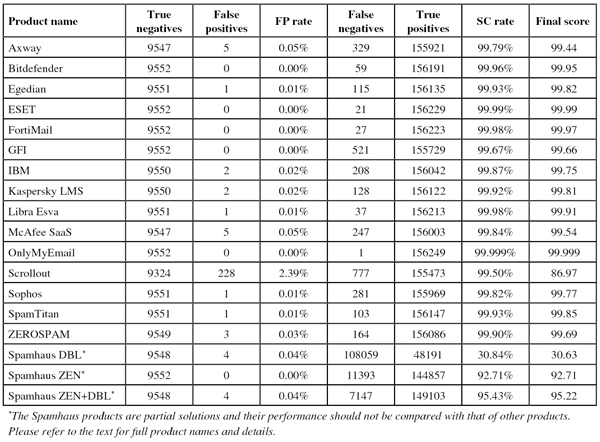

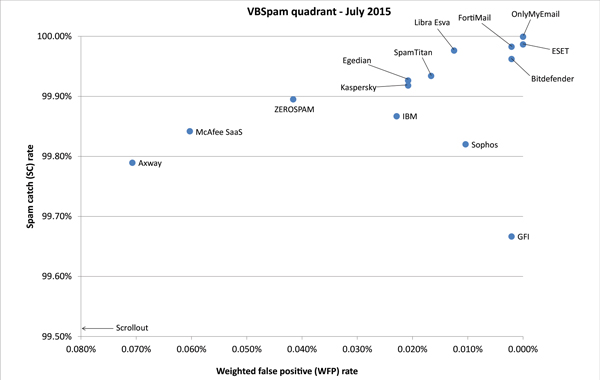

To compare the products, we calculate a ‘final score’, which is defined as the spam catch (SC) rate minus five times the weighted false positive (WFP) rate. The WFP rate is defined as the false positive rate of the ham and newsletter corpora taken together, with emails from the latter corpus having a weight of 0.2:

WFP rate = (#false positives + 0.2 * min(#newsletter false positives , 0.2 * #newsletters)) / (#ham + 0.2 * #newsletters)

Products earn VBSpam certification if the value of the final score is at least 98:

SC - (5 x WFP) ≥ 98

Meanwhile, products that combine a spam catch rate of 99.5% or higher with a lack of false positives and no more than 2.5% false positives among the newsletters earn a VBSpam+ award.

The test started on Saturday 23 June at 12am and finished 16 days later, on Monday 8 July at 12am. On this occasion there were no serious issues affecting the test.

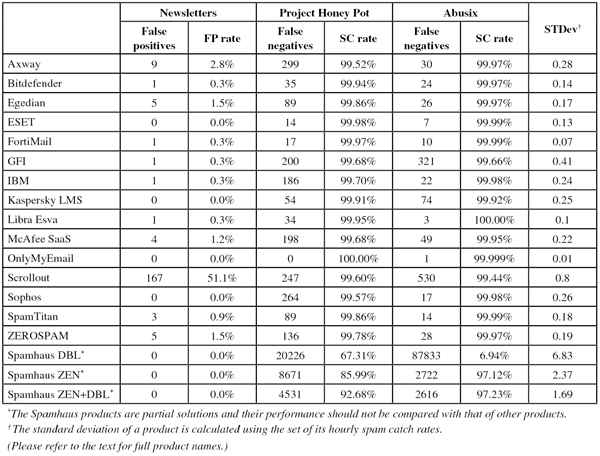

The test corpus consisted of 166,129 emails. 156,250 of these emails were spam, 61,870 of which were provided by Project Honey Pot, with the remaining 94,380 spam emails provided by spamfeed.me, a product from Abusix. They were all relayed in real time, as were the 9,552 legitimate emails (‘ham’) and 327 newsletters.

Figure 1 shows the catch rate of all full solutions throughout the test. To avoid the average being skewed by poorly performing products, the highest and lowest catch rates have been excluded for each hour. Comparing this chart with that published in May, one can immediately see that there were higher catch rates on this occasion.

In the text that follows, unless otherwise specified, ‘ham’ or ‘legitimate email’ refers to email in the ham corpus – which excludes the newsletters – and a ‘false positive’ refers to a message in that corpus that has been erroneously marked by a product as spam.

SC rate: 99.79%

FP rate: 0.05%

Final score: 99.44

Project Honey Pot SC rate: 99.52%

Abusix SC rate: 99.97%

Newsletters FP rate: 2.8%

For Axway’s MailGate virtual appliance, the July test was good on all fronts: the product saw its spam catch rate increase to almost 99.8% – the highest it has ever been in our tests – and with just five false positives, the glitch the product experienced in the May test was proven to have been a one-off occurrence. With a decrease in the false positive rate among the newsletters as well, Axway’s developers have plenty to be pleased about and the product earns its eighth VBSpam award in a row.

SC rate: 99.96%

FP rate: 0.00%

Final score: 99.95

Project Honey Pot SC rate: 99.94%

Abusix SC rate: 99.97%

Newsletters FP rate: 0.3%

A VBSpam participant and award winner since the very first test, Bitdefender has already notched up 13 VBSpam+ awards. In this test, the product blocked 99.96% of spam emails and no legitimate emails, achieving the fourth highest final score – and earning its 14th VBSpam+ award.

SC rate: 99.93%

FP rate: 0.01%

Final score: 99.82

Project Honey Pot SC rate: 99.86%

Abusix SC rate: 99.97%

Newsletters FP rate: 1.5%

Egedian has been a VBSpam participant since the spring of 2014, and we have seen a gradual improvement in the product’s performance over time. On this occasion there was further improvement, with the product blocking no fewer than 99.93% of the emails in our spam feeds (having blocked only just over 99% in the last test). While such significant increases in catch rate are often accompanied by an increase in false positive rate, the virtual solution blocked just one legitimate email, meaning that it easily earns its seventh VBSpam award.

SC rate: 99.99%

FP rate: 0.00%

Final score: 99.99

Project Honey Pot SC rate: 99.98%

Abusix SC rate: 99.99%

Newsletters FP rate: 0.0%

ESET’s VBSpam history goes back three years – and its VB100 history much longer than that – but for this test, the company submitted a different solution from the one we have tested before: one that also works with Microsoft’s popular Exchange Server mail server, but which includes, as the company described it, a ‘completely remodelled anti-spam engine developed internally by ESET’.

It is thus a little unfair to compare this month’s test results with those of the other ESET solution we have tested, but in fact this new product would have looked good against just about any other product: it misclassified a mere 21 spam emails (out of more than 155,000), and there were no false positives in either feed. The second highest final score this month and a VBSpam+ award make this a fantastic debut for ESET’s Mail Security for Microsoft Exchange Server.

SC rate: 99.98%

FP rate: 0.00%

Final score: 99.97

Project Honey Pot SC rate: 99.97%

Abusix SC rate: 99.99%

Newsletters FP rate: 0.3%

In its long VBSpam test history, which goes all the way back to the second VBSpam test, Fortinet’s FortiMail appliance has always performed well, never missing a VBSpam award, and earning several VBSpam+ awards along the way. But a 99.98% catch rate is impressive even by these standards. Moreover, the appliance didn’t block any of the more than 9,500 legitimate emails and blocked just a single newsletter, resulting in the third highest final score and the company’s fifth VBSpam+ award.

SC rate: 99.67%

FP rate: 0.00%

Final score: 99.66

Project Honey Pot SC rate: 99.68%

Abusix SC rate: 99.66%

Newsletters FP rate: 0.3%

GFI’s MailEssentials is one of the products for which this month’s test only brings good news. The Windows-based solution saw its performance improve in all three vectors, with most notably a total lack of false positives. The product’s seventh VBSpam+ award is thus very well deserved.

SC rate: 99.87%

FP rate: 0.02%

Final score: 99.75

Project Honey Pot SC rate: 99.70%

Abusix SC rate: 99.98%

Newsletters FP rate: 0.3%

Like almost all products in this month’s test, IBM saw its spam catch rate improve slightly – to 99.87%. However, it also blocked more legitimate emails than it did in May’s test: two, compared to a clean sheet back then. It thus misses out on a VBSpam+ award for the first time this year, nevertheless it easily achieved a VBSpam award.

SC rate: 99.92%

FP rate: 0.02%

Final score: 99.81

Project Honey Pot SC rate: 99.91%

Abusix SC rate: 99.92%

Newsletters FP rate: 0.0%

Until this month, Kaspersky’s Linux-based anti-spam solution had the honour of being the product with the current longest unbroken run of VBSpam+ awards, having not missed any since November last year. Unfortunately, that record was broken this month when the product missed two legitimate emails – both written in English. This doesn’t mean this wasn’t yet another decent set of results for the product, but on this occasion a standard VBSpam award has to suffice.

SC rate: 99.98%

FP rate: 0.01%

Final score: 99.91

Project Honey Pot SC rate: 99.95%

Abusix SC rate: 100.00%

Newsletters FP rate: 0.3%

More often than not in the past two years, Libra Esva has achieved a VBSpam+ award. So the results of this test, when a single legitimate email prevented it from doing so, will no doubt be seen as a disappointment. A small one, hopefully, as the virtual appliance blocked an impressive 99.98% of spam and, amid fierce competition, ended up with the fifth highest final score.

SC rate: 99.84%

FP rate: 0.05%

Final score: 99.54

Project Honey Pot SC rate: 99.68%

Abusix SC rate: 99.95%

Newsletters FP rate: 1.2%

You win some, you lose some. The spam catch rate of McAfee’s SaaS solution increased by almost a percentage point to 99.84% – we noticed quite a few emails in French and Spanish among those that were missed – but against that stood five false positives (from three different senders). Nevertheless, the product’s final score improved significantly and easily earns McAfee another VBSpam award.

SC rate: 99.999%

FP rate: 0.00%

Final score: 99.999

Project Honey Pot SC rate: 100.00%

Abusix SC rate: 99.999%

Newsletters FP rate: 0.0%

A dubious advertisement for a financial product in German – that we believe should be classified as spam – was the only one of more than 165,000 emails that OnlyMyEmail classified incorrectly. It’s the sort of thing we’ve come to expect from this hosted solution, but it’s still impressive. Of course, the product ends up with the highest final score and another VBSpam+ award – the product’s tenth.

SC rate: 99.50%

FP rate: 2.39%

Final score: 86.97

Project Honey Pot SC rate: 99.60%

Abusix SC rate: 99.44%

Newsletters FP rate: 51.1%

Scrollout F1 isn’t having a good run in our tests. While a spam catch rate of 99.50% is certainly decent enough (albeit the lowest among all full solutions in this test), the product’s false positive rate – well over 2% – is simply poor. So poor, that we’ve come to suspect that the product might not be fully adjusted to the test environment – a view that is shared by the product’s developers. While they are looking into the issue, we are forced to deny the open-source solution a VBSpam award.

SC rate: 99.82%

FP rate: 0.01%

Final score: 99.77

Project Honey Pot SC rate: 99.57%

Abusix SC rate: 99.98%

Newsletters FP rate: 0.0%

A single legitimate email in this test was blocked by Sophos’s Email Appliance. The reason is often unclear for us as testers, but in this case it might have been the use of various (legitimate) URL shorteners. In any case, the false positive prevents the product from achieving another VBSpam+ award in what was otherwise a very good test, in which its catch rate increased slightly and its newsletter false positive rate dropped to zero. Another VBSpam award, Sophos’s 33rd, is thus more than well deserved.

SC rate: 99.93%

FP rate: 0.01%

Final score: 99.85

Project Honey Pot SC rate: 99.86%

Abusix SC rate: 99.99%

Newsletters FP rate: 0.9%

It was also a single false positive for SpamTitan that prevented it from achieving another VBSpam+ award in an otherwise decent performance – the product missed just 103 spam emails in a variety of languages and blocked three newsletters. Another VBSpam award should keep the developers motivated to get that ‘plus’ back though.

SC rate: 99.90%

FP rate: 0.03%

Final score: 99.69

Project Honey Pot SC rate: 99.78%

Abusix SC rate: 99.97%

Newsletters FP rate: 1.5%

With the exception of the newsletters – which is a small corpus that contributes little overall – ZEROSPAM’s performance on this occasion was very similar to that in the last test. Three false positives in as many different languages prevented the product from earning another VBSpam+ award, but after missing slightly fewer than one in a thousand spam emails, its 21st VBSpam award is well deserved.

SC rate: 30.84%

FP rate: 0.04%

Final score: 30.63

Project Honey Pot SC rate: 67.31%

Abusix SC rate: 6.94%

Newsletters FP rate: 0.0%

SC rate: 92.71%

FP rate: 0.00%

Final score: 92.71

Project Honey Pot SC rate: 85.99%

Abusix SC rate: 97.12%

Newsletters FP rate: 0.0%

SC rate: 95.43%

FP rate: 0.04%

Final score: 95.22

Project Honey Pot SC rate: 92.68%

Abusix SC rate: 97.23%

Newsletters FP rate: 0.0%

The performance of Spamhaus in these tests remains volatile, which is natural for the partial solution: spam filtering is a multi-layered approach and Spamhaus only offers two of these layers (IP and domain blocking); hence we don’t count it as a full product.

In this test, the DBL domain blacklist performed significantly better than it did in the last test, although there were four false positives due to the inclusion of two domains that, our feeds show, were also used in legitimate emails. The catch rate for the ZEN aggregated IP-based blacklist decreased slightly, but there were no false positives, while the catch rate for the combined list remained well over 95%.

(Click here to view a larger version of the table.)

(Click here to view a larger version of the table.)

(Click here to view a larger version of the table.)

(Click here to view a larger version of the chart.)

July 2015 was a good month for products in the VBSpam test. As always, one is right to wonder whether this was a mere coincidence – behind the decimal point, the performance of spam filters tends to be volatile – or whether this is part of a trend. The next test will tell – and will also introduce a new metric that shows how much the various filters delay the emails that are sent through them.

The next VBSpam test will have finished by the time this report is published, with the results scheduled for publication in September. The test following that will run in October, with the results scheduled for publication in November. Developers interested in submitting products should email [email protected].