2015-07-28

Abstract

This month VB lab team put 14 business products and 30 consumer products to the test on Windows 8.1 Pro. The VB100 pass rate was decent, although not quite up to the perfect or near-perfect fields seen in a few recent tests. John Hawes has the details.

Copyright © 2015 Virus Bulletin

This month’s comparative pays another visit to Windows 8.1, which for the time being remains Microsoft’s flagship desktop platform pending the release of Windows 10 in late July. As usual on desktop platforms, a wide range of products were submitted for testing, promising to keep the team busy for quite some time. To ease the burden slightly, we decided to make some adjustments to the test components, dropping some of the more time-consuming elements and expanding some areas where automation allows us to fit more useful data into less time.

Windows 8.1 still feels fairly fresh and new despite the imminence of its upcoming replacement, and users have been rather slow to adopt it – the latest stats put it on between 7% and 13% of all systems browsing the web, which is pretty close to the aged, defunct but still popular Windows XP.

Preparation for the test was fairly straightforward, with the images used for the previous Windows 8.1 comparative recycled with a few adjustments, mainly affecting our performance tests which saw some significant upgrades this month.

Regular readers will note the absence this month of our usual figures for scanning speed and simple file access lag times. To reduce the time taken to complete tests in the face of an ever-growing field of participants, we have decided to drop these components from our desktop comparatives. In the desktop space, on-demand scans are mostly run overnight or whenever the system is not busy, rendering the speed of scans of rather limited interest. We will continue to include this data in our server tests though, where such factors are more important. We will also keep our file access lag measurements in server tests, as although the measurement is somewhat academic, it remains relevant to the throughput of servers handling large amounts of file access traffic.

For all tests, we will focus our performance testing mainly on our set of standard activities, which since its introduction has included common tasks such as moving, copying, archiving and un-archiving of files. Last year we added installation of common software, and for this test we have expanded the tests further to include launch time for a selection of popular packages, including web browsers, media players and office tools. We continue to work on a new standalone performance test which will report more regularly and in a more digestible format.

With the set-up for this new range of measures in place, we continued preparing the test systems by loading them with our sample sets. With the deadline for submissions set for 18 February, the certification component used version 4.014 of the WildList, which was finalized on the deadline date itself, and the latest version of our collection of clean files, which this month weighed in at just under 700,000 samples or 140GB of data. Alongside these test sets we compiled our usual daily sets for use in the RAP/Response tests, with an average of just under 4,000 samples per day making it into the final sets and a total of a little over 150,000 individual items over the test period.

On the deadline day the products flooded in as expected, with a total of over 50 accepted for testing, although a number of those did not make it all the way to this report.

Main version: 150218065431

Update versions: 150312130131, 150323081034, 15041063439

Last 6 tests: 4 passed, 0 failed, 2 no entry

Last 12 tests: 5 passed, 0 failed, 7 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Fair

Since Arcabit’s return to our test a little over a year ago with an all-new product, it has enjoyed a pleasing string of successes. The solution includes the Bitdefender engine and takes a fair while to install, but presents a nice simple interface with clean, sharp lines and minimal clutter, although a good depth of configuration is available.

Stability was mostly decent, although we saw a few odd issues with settings changes failing to stick, and on one install the on-access component was completely non-functional, requiring several reboots of the test machine to get it to start up. Resource usage was not excessive, but we did observe some slowdown on executing our set of tasks.

Detection was decent in the reactive sets, dropping somewhat into the proactive parts of the RAP test, but there were no issues in the certification sets and a VB100 award is easily earned.

Main version: 14.0.7.468

Update versions: 8.11.210.200, 8.11.215.110, 15.0.8.656/8.11.218.116, 8.11.221.30

Last 6 tests: 6 passed, 0 failed, 0 no entry

Last 12 tests: 11 passed, 0 failed, 1 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Fair

Avira’s history in our tests is exemplary, with a long string of passes going back to 2009 and very few tests missed. The latest version of the product installs rapidly and looks attractive, with a sensible and orderly layout and a very thorough range of configuration options.

Stability was somewhat suspect this month, unusually for Avira, with a number of issues experienced getting to the end of scans. Several scans either crashed out part way through or else appeared to complete, only to produce no final notification and incomplete logging. However, as this affected only our rather specialized jobs handling large volumes of malware, the stability score remains within the bounds of the acceptable. Performance was good though, with low use of resources and a fairly low impact on our set of tasks.

Detection was excellent, as usual, with high scores across the board and no issues in the certification sets, thus earning Avira a VB100 award for its ‘Pro’ product.

Main version: 9.1

Update versions: 4557.701.1951 build 1055, build 8, build 15, build 20

Last 6 tests: 5 passed, 0 failed, 1 no entry

Last 12 tests: 7 passed, 0 failed, 5 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Stable

The Defenx product is closely related to that of Agnitum, sharing a GUI design as well as an engine, but has a few tweaks and quirks of its own, and it has done well over the last year or so. The installation process is a little slow, with much time taken getting the latest set of updates, but once completed another pleasantly clean and clear interface is presented with a good range of controls available. There is also a ‘Quick Tune’ browser plug-in provided, with easy controls to block certain types of web content.

Stability was good with only a few very minor issues noted in the GUI, while performance measures showed reasonable use of memory and a high impact on our set of tasks, making the CPU use measure (taken periodically throughout the set of tasks and averaged out) look very low.

Detection was not the best, but remained respectable for the most part, and the core sets were dealt with properly, earning Defenx another VB100 award.

Main version: 3.0.0.4

Update versions: 13.3.21.1/546658.2015021715/7.59332/6402786.20150218, 13.3.21.1/552205.2015031301/7.59653/6648662.20150312, 13.3.21.1/553185.2015032322/7.59799/6541817.20150323, 13.3.21.1/553738.2015033019/7.59884/6311749.20150330

Last 6 tests: 5 passed, 0 failed, 1 no entry

Last 12 tests: 10 passed, 0 failed, 2 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Stable

The last couple of years have been good ones for ESTsoft in our tests, with a clean sweep of passes in all tests apart from those on Linux (which were not entered). The install process is rather slow, as ever, with most of the time taken up by initial updating, and the interface remains rather busy with a lot of sections shown at once and a cute cartoon pill to add some light relief.

Stability was good, with just some issues firing off updates on the first attempt and a single scan job not completing cleanly, but nothing serious to report. Performance measures show very low use of RAM, CPU use a little high and impact on our set of tasks noticeable but not excessive.

Detection, supported as usual by the Bitdefender engine, was solid with just a slight decline into the later parts of the sets, and the core certification sets were handled very nicely with no issues. A VB100 award is well deserved.

Main version: 5.0.9.1347

Update versions: 5.158/23.858, 25.021, 25.072, 25.142

Last 6 tests: 4 passed, 1 failed, 1 no entry

Last 12 tests: 9 passed, 1 failed, 2 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Stable

Fortinet products have appeared in every Windows VB100 comparative since 2008 at least, and have a strong pass rate with only a handful of slips. The current product installs very quickly and presents a fairly basic, minimalist interface with only a very limited set of configuration options made available to the end-user. Operation is fairly simple and intuitive though.

Stability was good, with just a few minor wobbles, and with fairly average use of resources, our set of tasks ran through in good time.

Detection was once again strong with a slight dip into the later parts of the sets, and the certification sets were handled without issues, earning Fortinet a VB100 award.

Main version: 2.7.30

Update versions: 90665, 90933, 91028, 2.8.9/9144

Last 6 tests: 4 passed, 1 failed, 1 no entry

Last 12 tests: 6 passed, 4 failed, 2 no entry

ItW on demand: 99.76%

ItW on access: 99.76%

False positives: 0

Stability: Stable

We’ve seen a nice run of passes from Ikarus in the last year or so, seemingly putting its long-running problems with false positives firmly in the past. The installation process isn’t the fastest but completes in reasonable time, and the .NET-based interface, always a little on the angular side, looks very much at home on the sharp-cornered Windows 8.1. Configuration options are sparse, but fairly obvious and easily navigable.

Stability was reasonable, with a couple of scans failing and some odd issues properly disabling protection on one occasion, but nothing too major. Our set of tasks took a very long time to get through, but resource use remained around average throughout.

Detection was solid with just a gentle downward slope into the proactive sets. The clean sets were once again well handled, but in the WildList sets a couple of items went undetected in the earlier parts of the test. This means that despite clean runs later on, no VB100 award can be granted to Ikarus this month.

Main version: 5.4.1.0000

Update versions: 5.4.0/3.71, 5.5.1

Last 6 tests: 5 passed, 0 failed, 1 no entry

Last 12 tests: 10 passed, 0 failed, 2 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Solid

Our test history for iSheriff’s product line is entangled with previous incarnations of the company behind the product, including CA and Total Defense – something which we may try to address when we next revamp our online result display system, but it will still show a good run of passes over the last few years. Installation of the product has become rather easier with familiarity, and doesn’t take too long once you know where to locate the required buttons. The interface to the cloud-based portal and local controls is reasonably responsive for a browser-based GUI, and provides a decent basic set of controls.

Stability was impeccable this month, with no issues of note, and performance was good too, with low use of resources and not much impact on our set of tasks.

Detection, aided as usual by the Bitdefender engine, was pretty decent with a slight decline into the later weeks. The core certification sets presented no issues, and iSheriff adds another VB100 award to its recent haul.

Main version: 10.2.1.23

Update versions: 10.2.1.23(a)

Last 6 tests: 5 passed, 0 failed, 1 no entry

Last 12 tests: 9 passed, 1 failed, 2 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Stable

Kaspersky Lab’s enterprise product fits nicely into the company’s main history line in our records, which shows a long trail of test results going all the way back with very few not recording passes. The latest edition took quite some time to get set up this month, with almost all of that time spent updating. The interface is the usual Kaspersky green, with a slightly unusual layout which quickly becomes clear and intuitive with a little exploration and provides a comprehensive set of controls.

Stability was almost perfect, with just a single oddity: an unexpected shutdown of Internet Explorer during one of our performance measures. These showed some reasonable use of resources and not much of an impact on our set of tasks.

Detection was decent, with a dip into the proactive sets where the company’s KSN cloud look-up system is unavailable. No problems were noted in the core certification sets, and a VB100 award is well deserved.

Main version: 15.0.2.361

Update versions: N/A

Last 6 tests: 4 passed, 0 failed, 2 no entry

Last 12 tests: 5 passed, 0 failed, 7 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Solid

The small office offering from Kaspersky has made only a handful of appearances in our tests, all in the past couple of years and all resulting in passes. Set-up was rather quicker for this version, with the interface looking rather dashing in its dark colour scheme trimmed with the odd flash of the company’s standard green. A tiled design reflects the Windows 8 styling and seemed simple to use, with the usual complete range of controls provided, and alongside the usual components are an encryption tool and a range of browser add-ins including a virtual keyboard and a module called ‘Safe Money’.

Stability was good, with only a few minor issues, and performance was decent too, with resource use around average and our set of tasks noticeably slower but not by too much.

Detection was again decent although, much to our surprise, it was a little lower than that of the Endpoint version. When we looked into this, the KSN cloud look-up system seemed not to be functioning in any of the test installs despite having been activated during start-up. We are investigating the cause of this in collaboration with the developers.

This didn’t upset any of the certification tests though, which ran through cleanly and earned Kaspersky its second VB100 award this month.

Main version: 4.7.205.0

Update versions: 1.1.11400.0/1.193.42.0, 1.193.2100.0, 1.195.100.0, 1.195.344.0

Last 6 tests: 5 passed, 0 failed, 1 no entry

Last 12 tests: 9 passed, 0 failed, 3 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Solid

Microsoft’s business solution has become something of a fixture in our tests over the last few years and has a very strong record of passes. It installs rapidly and simply, presenting a fairly simple if word-heavy interface with a basic set of configuration options available.

Stability was impeccable, with no problems noted, and performance was good too with resource use very close to that of sibling product Defender, although our set of tasks did run a little slower.

Detection was a little flimsy with a slow downward trend through the sets, but the core sets were dealt with well and a VB100 award is earned by Microsoft.

Main version: 7.59329

Update versions: 6375733, 7.59656/6583609, 7.59755/6593254, 7.59851/6340352

Last 6 tests: 4 passed, 1 failed, 1 no entry

Last 12 tests: 7 passed, 3 failed, 2 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Stable

There has been much change at Norman of late, with the company dividing in two and a chunk of it being acquired by another firm. There has also been much change in the company’s product, which is more or less unrecognizable here from previous incarnations. Deployed and operated through a web-based portal, the local control system is very minimal indeed, limited to ‘scan’ and ‘disable’ for the most part, with a little more detailed configuration available via the portal.

Stability was reasonable, although the portal was a little laggy and occasionally lost connection, as seems to be the way with these things. The local interface also dropped out at times, and a single scan run failed to complete properly. Performance impact was very light though, with minimal resource use and a very short time taken to complete our set of tasks.

Detection was decent, aided by the integrated Bitdefender engine, showing just a slight downward trend through the sets, and the core sets were well dealt with, earning Norman a VB100 award.

Main version: 6.8.11

Update versions: 7.00.00

Last 6 tests: 5 passed, 0 failed, 1 no entry

Last 12 tests: 8 passed, 2 failed, 2 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Stable

After a long absence, Panda’s return to the VB100 tests a couple of years back has led to a good string of success for the firm after some initial wobbles, with a clean sweep in the last year. The business solution is very quick to set up and presents another very minimalist GUI with only a few basic controls, but it proved simple and reliable to operate.

Stability wasn’t quite perfect, with a few minor issues mainly related to the updating. Performance impact was fairly low, with low resource use and a reasonable time taken to complete our set of tasks.

Detection was reasonable too in the reactive sets, with no measurement possible in the proactive sets as the product relies entirely on Internet connectivity to function. The core sets were well handled with no issues to report, and Panda’s recent run of passes is extended with another VB100 award.

Main version: 16.00(9.0.0.2)

Update versions: N/A

Last 6 tests: 1 passed, 0 failed, 5 no entry

Last 12 tests: 1 passed, 0 failed, 11 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Stable

There are two products from Quick Heal in this month’s comparative, with the company’s Seqrite business offering appearing for the first time. Installation took a fair while, with updates taking the bulk of the time, and once up the interface had a similar look and feel to other products we’ve seen from the firm, albeit with a rather stark monochrome colour scheme. The layout is clear with nice large buttons leading through to more detailed configuration, at a reasonable level of completeness.

Stability was a whisker away from flawless, with just a single, rather minor issue: a freezing up of one of the update dialogs. Performance impact was a little high, especially RAM use, and our set of tasks was noticeably slower to complete.

Detection was pretty decent though, only falling away in the very last week of the RAP test, and there were no issues in the certification sets, earning Quick Heal a VB100 award for its business edition.

Main version: 10.0.39052

Update versions: 10.0.40642

Last 6 tests: 2 passed, 0 failed, 4 no entry

Last 12 tests: 2 passed, 0 failed, 10 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Fair

This was the second outing for ITbrain, after making its debut (with a pass) in the last comparative of 2014. Once again we saw a rapid install process and a control system operated mainly from a web-based portal and closely integrated with the main TeamViewer application suite. Configuration options are limited to the basics, but fairly usable and mostly fairly responsive.

There were some stability issues though, with a number of scans crashing out, error messages from time to time in a number of areas, and occasional logging issues too. Performance impact was pretty light, with a very short time taken to complete our set of activities.

Detection is provided by the Bitdefender engine, which produced some good scores once again in the response sets, with no proactive data thanks to the online-only operation. The certification sets were properly handled with no problems, and a second VB100 award goes to ITbrain.

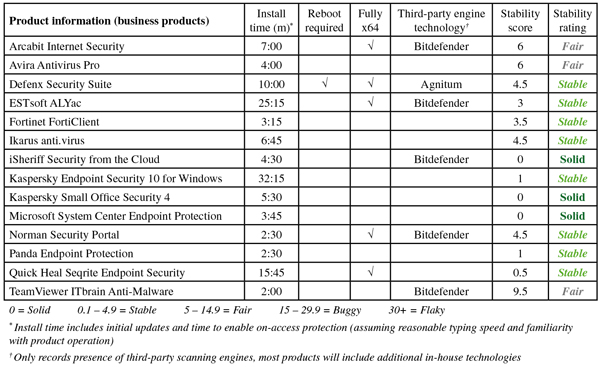

(Click for a larger version of the table)

(Click for a larger version of the table)

(Click for a larger version of the table)

(Click for a larger version of the table)

(Click for a larger version of the table)

(Click for a larger version of the chart)

(Click for a larger version of the chart)

Main version: 9.1

Update versions: 4654.701.1951 build 1055, build 8, build 14, build 20

Last 6 tests: 5 passed, 0 failed, 1 no entry

Last 12 tests: 9 passed, 0 failed, 3 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Solid

Agnitum’s Outpost suite has a history in the VB100 tests dating back to 2007, with a strong ratio of passes over the years. The current version takes a few minutes to set up, with updating the main drag, but once up presents a familiar layout with a little extra sharpness around the corners in keeping with its surroundings on Windows 8.1. Navigation is fairly straightforward, with easy access to a decent set of controls.

Stability was very good indeed without even any trivial annoyances, but performance impact was rather high with a very slow time indeed taken to complete our set of activities and noticeable RAM use increase – CPU use looks low thanks to being averaged out over a long period, implying that much of the time added during the activities test was spent idly waiting for a response.

Detection was a little mediocre, with a gentle decrease through the weeks, but the core sets were managed well, with no issues to report, and another VB100 award going to Agnitum.

Main version: 3.2.0.12 (build 579)

Update versions: 2015.02.17.04, 2015.03.13.05, 2015.03.19.06, 2015.04.01.00

Last 6 tests: 1 passed, 0 failed, 5 no entry

Last 12 tests: 2 passed, 0 failed, 10 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Fair

AhnLab’s products have been regulars on the VB100 test bench for over a decade, although in the last few years they have appeared only sporadically, making it hard to comment on the reliability of their results of late. The current solution installs quickly, and presents an interface sporting the tiled styling of Windows 8 in a moody grey colour scheme. The layout is clear and easily figured out though, with a decent set of configuration controls available.

Stability was dented by some problems completing a few scans, and also some logging issues, and our performance measures were somewhat hampered by a tendency to block the activities of some of the tools used in the test. Eventually usable data was gathered, showing a noticeable but not massive impact on our set of tasks, with fairly low resource use.

Detection was reasonable, dropping off quite a bit into the later weeks, and the core sets presented no issues, earning AhnLab a VB100 award once again after a lengthy absence.

Main version: N/A

Update versions: 2015.10.2.2214/150306-0, 150316-0, 2015.10.2.2215/150324-0

Last 6 tests: 5 passed, 1 failed, 0 no entry

Last 12 tests: 9 passed, 2 failed, 1 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Stable

Avast’s recent history in our tests shows something of a blip, with a couple of failed tests and one missed after several years of flawless successes, but the last few comparatives have turned up no problems and it seems likely that another run of good times is well under way. The latest edition of the product installs in a few minutes and presents another angular, tiled design with good clear access to a wide range of tools, plus a little advertising for other products from the firm. Some browser add-ins are included, and configuration is in fine detail.

Stability was dented only by a small issue to do with logging settings which seemed to be ignored, but was otherwise fine. Performance impact was a little high over our set of activities, although resource use was not too heavy.

Detection rates were strong in the response sets, but some issues during the submission process meant that no usable proactive data was available. The core sets ran through with no upsets though, and Avast earns another VB100 award to maintain that new run of success.

Main version: 3.4.3

Update versions: 45718, 46063, 46194, 46287

Last 6 tests: 1 passed, 1 failed, 4 no entry

Last 12 tests: 1 passed, 1 failed, 10 no entry

ItW on demand: 99.61%

ItW on access: 99.61%

False positives: 0

Stability: Fair

A relative newcomer to the VB100 tests, Avetix so far has only a single previous entry, a pass in August 2014. The set-up this month was a little on the slow side, but the GUI once accessible proved bright and colourful with good clear information and decent configuration options.

Stability was something of an issue here, with many of our scan jobs crashing out and some proving impossible to complete, making for a lot of extra work for the lab team in getting enough data together. However, a major new version was released not long after testing finished, which should reduce these problems for most users. Performance impact for this version was pretty light, on the good side of average at least.

Detection from the Bitdefender engine was strong when it could be made to work properly, with good scores in all sets we managed to complete. There were no issues in the clean sets, but in the WildList sets we encountered a small handful of items which seemed to be missed repeatedly on each run, denying Avetix a VB100 award on this occasion.

Main version: 2015.0.5646

Update versions: 4284/9125, 4299/9259, 4306/9315, 2015.0.5751/4315/9373

Last 6 tests: 6 passed, 0 failed, 0 no entry

Last 12 tests: 11 passed, 1 failed, 0 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Solid

AVG’s history in the VB100 tests is long and illustrious, with only a handful each of skipped tests and failed attempts in the last decade and a clean sweep in the last year. The latest suite edition took a little while to set up, and offered us another tiled GUI – a little brighter than previous versions albeit still with a lot of dark grey, and a thorough set of configuration options in easy reach.

Stability was impeccable, with no issues noted, and performance impact and resource use was all fairly light.

Detection was very good indeed with only a slight dropping off into the later parts of the sets, and the core sets were dealt with admirably, earning AVG another VB100 award.

Main version: 14.0.7.468

Update versions: 8.11.210.200, 15.0.8.650/8.11.215.174, 15.0.8.656/8.11.218.106, 8.11.221.30

Last 6 tests: 3 passed, 0 failed, 3 no entry

Last 12 tests: 6 passed, 0 failed, 6 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Fair

Avira’s free personal edition has appeared in all our desktop tests for the past couple of years, and has passed every one of them. This month, after a fairly speedy set-up we got to operate a pleasantly clear and well laid out interface with plenty of fine-tuning provided, which was mostly fairly responsive through testing.

We did have some stability issues though, with several scans causing problems, and occasional logging problems too, but only during very heavy stress. Performance impact was light throughout, with a good time taken to complete our set of tasks.

Detection was excellent with good scores throughout, and with no issues in the certification sets, a VB100 award is comfortably earned.

Main version: 18.17.0.1227

Update versions: 7.59328/6376149, 7.59656/6583609, 7.59759/6597927, 7.59852/6342352

Last 6 tests: 6 passed, 0 failed, 0 no entry

Last 12 tests: 12 passed, 0 failed, 0 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Stable

Bitdefender, whose engine graces a wide range of other solutions in this and most other recent tests, has an exemplary record in the VB100 tests, and assuming all goes well will shortly reach five years of consistent passes in every single comparative. The latest product, which seems to insist on the shouty name, is pretty quick to install and looks dark and mysterious, adorned at times with a shimmery ‘dragon-wolf’ emblem. Alongside the usual components are a selection of extras including a ‘Wallet’ module and a file shredder.

The layout is a standard tiled affair, nice and clear and simple to use but providing a good depth of configuration, and it seemed pretty stable throughout testing. The only issue of note was a single instance where the log exporting seemed to freeze on 98%. Performance impact was once again rather odd, mostly quite light but very slow indeed in some parts of our activities test, seeming to sit and wait for long periods before moving on. Resource use was low throughout.

Detection was as strong as ever with a very gradual downward gradient through the weeks. There were no problems in the certification sets, and Bitdefender’s long run of passes continues unbroken.

Main version: 1.5.0.25

Update versions: 6749, 7096, 1.5.027/7217, 1.5.025/7318

Last 6 tests: 3 passed, 0 failed, 3 no entry

Last 12 tests: 3 passed, 0 failed, 9 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Fair

Only an occasional participant in VB100 tests so far, BluePex’s previous two appearances have both resulted in passes, although several other submissions have failed to make it as far as the report stage. The set-up is a little slow, although that may be a result of the distance of the test lab from the company’s main market in Brazil, and the interface shows some signs of incomplete localization in places, but it looks quite clear and nicely designed, with a decent basic set of controls.

Stability was rather shaky, with a number of failed scan jobs and on one occasion a dreaded blue screen incident. Performance impact wasn’t too bad, with fairly hefty slowdown over our set of activities but reasonable use of resources.

Detection, aided by the ThreatTrack engine, was decent too, very even in the earlier sets with just a slight dip into the final week. There were no issues in the certification sets, and BluePex notches up its third VB100 award in the space of a year.

Main version: 15.0.295.1

Update versions: 7.59328, 15.0.297/7.59656, 7.59754, 15.0.298/7.59852

Last 6 tests: 4 passed, 0 failed, 2 no entry

Last 12 tests: 9 passed, 0 failed, 3 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Solid

Another long-term regular, BullGuard’s VB100 history is solid with a good four years of reliable passes. The latest edition is a little quirky in design but more or less follows the trend for tiling, presenting plenty of information in an easily accessible format, and a good range of configuration too.

Stability was excellent with no issues observed, and performance impact was very good too, with resource use below average and our set of tasks blasting through in superb time.

Detection was splendid, with good scores everywhere including the certification sets which were brushed aside effortlessly, easily earning another VB100 award for BullGuard.

Main version: 13.4.255.000

Update versions: 8.5.0.79/1179802976, 1181374048, 1182215872, 1182482880

Last 6 tests: 3 passed, 0 failed, 3 no entry

Last 12 tests: 5 passed, 2 failed, 5 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Stable

Check Point’s ZoneAlarm has become ever more of a regular sight on the VB100 test bench lately, with a decent run of passes in the last year or so. The installation process isn’t too drawn out, and the interface seems to have changed little for a long time – so much so that the tiled effect it has long clung onto has come back into fashion. It offers a reasonable range of configuration options, which are mostly fairly easy to find and operate.

Stability was pretty decent, with some minor logging issues noted, and also what seemed to be a broken web link on completing the installation. Performance impact was a little on the high side in our set of tasks, with RAM use also a little above average.

Detection, backed up by the Kaspersky engine, was pretty decent in the response sets, a little lower in the proactive sets with no web connection. The WildList and clean sets were properly dealt with though, and Check Point earns another VB100 award.

Main version: 5.1.31

Update versions: 5.4.11, 201502190900, 201503091320, 201503161437, 201503250814

Last 6 tests: 1 passed, 4 failed, 1 no entry

Last 12 tests: 2 passed, 7 failed, 3 no entry

ItW on demand: 99.89%

ItW on access: 98.34%

False positives: 8

Stability: Solid

The ancient Command name lives on under CYREN, and continues to battle for a stable run of VB100 performances – a struggle which will need to carry on a little longer. The product remains unchanged from the last several years, with a very lightweight main package which completes its business in very quick time. The interface is simple and minimal with only the basic options provided, but worked very nicely throughout.

There were no stability issues to report, and our performance measures showed fairly low RAM use, CPU use quite high and our set of activities quite heavily impacted, taking almost twice as long to complete as on our baseline systems.

Detection was superb in the reactive sets with very little missed, somewhat lower in the proactive sets without access to cloud look-ups, but fairly respectable even here. Things did not go so well in the certification sets sadly, with some WildList misses and a handful of false positives meaning there is once again no VB100 award for CYREN this month.

Main version: 9.0.0.4799

Update versions: 9.0.0.4985, 9.0.0.5066

Last 6 tests: 5 passed, 0 failed, 1 no entry

Last 12 tests: 9 passed, 1 failed, 2 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Solid

Emsisoft’s VB100 history shows a clean run in the last year with only the Linux comparative not entered, and a good rate of passes in the longer term. This month, installation wasn’t too time-consuming, and the interface looked clean and professional with good clear status information in some nice large tiles on the home screen. Settings are available in reasonable depth.

Stability was flawless with no problems to report, and performance impact was pretty light, with a below-average slowdown through our set of activities and barely detectable change in resource consumption.

Detection, aided by the integrated Bitdefender engine, was strong, with good scores into the proactive sets, and with no issues once again in the core certification sets, Emsisoft earns another VB100 award.

Main version: 14.0.1400.1714 DB

Update versions: N/A

Last 6 tests: 6 passed, 0 failed, 0 no entry

Last 12 tests: 11 passed, 1 failed, 0 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Stable

Our test history for eScan shows an appearance in every comparative since 2009 and many more before that, with a strong pass rate and a clean sweep over the course of the last year. The latest edition took a fair while to set up, with updates taking much of the time, and presented a rather brighter and more colourful interface than we’ve been used to, with the now standard tiled layout and the old dark grey colour scheme still showing up in the background and deeper into the configuration dialogs, which are as comprehensive as ever.

Stability was good, with just some minor oddities concerning window behaviour after install and, on a single occasion, the on-access component needing a restart to get going. Performance impact was good too, with below average resource use and not much slowdown through our set of tasks.

Detection, again helped along by the Bitdefender engine, was strong as usual, with no problems in the certification sets and a VB100 award is easily earned.

Main version: 8.0.304.0

Update versions: 11194, 11291, 11328, 11374

Last 6 tests: 6 passed, 0 failed, 0 no entry

Last 12 tests: 12 passed, 0 failed, 0 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Solid

ESET’s VB100 record remains unrivalled, with an unbroken string of passes stretching back into the mists of time. The company’s latest edition has the usual slick and professional feel, with a stark, minimalist layout on the main screen but the expected wealth of fine-tuning options readily available.

Stability was once again impeccable with no problems observed at all, but performance impact was a little high with our set of tasks slowed down noticeably.

Detection was solid across the board, and with yet another clean run over the certification sets, ESET’s epic record of passes continues to build.

Main version: 25.0.2.2

Update versions: AVA 25.645/GD 25.4790, AVA 25.310/GD 25.4680, AVA 25.744/GD 25.4824, AVA 25.841/GD 25.4854

Last 6 tests: 4 passed, 0 failed, 2 no entry

Last 12 tests: 9 passed, 0 failed, 3 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Stable

G Data’s products appear in most of our tests, and routinely do well, with their dual-engine approach regularly scoring very highly in detection without undue side-effects. The set-up is a little drawn out, eventually bringing up another solid and businesslike interface, detailed in the company’s trademark red, with a nice roomy layout and the usual ample range of controls and options.

Stability was dented only by a very slight and rather odd issue, with the screen blanking out when trying to access scan logs, but for the most part it was firm and dependable. Performance impact was reasonable too, with all our measures showing discernible increases but nothing excessive.

Detection was superb, as always, with all our sets just about demolished, the strong coverage extending comfortably into the certification sets where all went smoothly, thus earning G Data another VB100 award.

Main version: 14.2.0250

Update versions: 9.196.15010, 14.2.0250/9.200.15206, 14.2.0261/9.202.15312, 9.202.15387

Last 6 tests: 4 passed, 0 failed, 2 no entry

Last 12 tests: 6 passed, 1 failed, 5 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Solid

K7 first appeared in the VB100 tests back in 2007 and has been a semi-regular participant since then, growing ever more likely to appear over the last year or two, with a decent rate of passes which has become very solid of late. The install process is speedy, the product interface with its military styling is appealing and nicely laid out, with a good selection of configuration options.

Stability was impeccable, and performance impact decent too with a lowish slowdown through our set of tasks, average RAM use and very low CPU use.

Detection was decent, tailing off somewhat into the proactive weeks, but the certification sets were handled impeccably and K7 adds another VB100 award to its tally.

Main version: 15.0.2.361

Update versions: N/A

Last 6 tests: 5 passed, 0 failed, 1 no entry

Last 12 tests: 6 passed, 0 failed, 6 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Stable

Kaspersky Lab’s main consumer suite solution doesn’t always appear in our tests, but when it does it generally performs well. The latest iteration installs rapidly but takes a while getting its initial updates in place, and presents a simple and rather pale interface with only a few dabs of the usual green. Configuration options are in comprehensive depth and reasonably simple to access and operate, and there are a number of additional modules included, the most visible of which are the ‘Safe Money’ and parental control sections, both of which are given a tile of their own on the main screen.

Stability was mostly good, although we did note a single instance of the machine freezing up temporarily shortly after installation. Performance impact measures showed a fair hit on the completion time for our set of tasks, with slightly higher than usual resource usage.

Detection was decent, although not quite as good as we would expect, and once again closer inspection showed limited results from the company’s cloud look-up system, with some rounds clearly making good use of it while in others it appeared to be inactive. Again, we continue to delve into this issue in collaboration with the developers. The core certification sets were not affected however, and with a clean sweep in those sets, Kaspersky’s IS product also earns a VB100 award.

Main version: 9.0.6.9

Update versions: 90778, 90933, 91048, 91144

Last 6 tests: 0 passed, 2 failed, 4 no entry

Last 12 tests: 0 passed, 2 failed, 10 no entry

ItW on demand: 99.78%

ItW on access: 99.05%

False positives: 0

Stability: Stable

KYROL products, which are based on the MSecure product, have only made a single appearance in VB100 comparative reports so far, but have been submitted for a handful of other tests, not quite making it as far as the final write-up. This month, we got things set up very quickly. The product interface has a warm orange-and-blue colour scheme on a dark background, with large simple tiles for the main components, one of which is entitled ‘USB Guard’. Configuration is provided in good depth, with a layout that is sensible and easy to navigate.

Stability was decent, with just some minor issues with the interface freezing up at the end of large jobs. Performance impact was minimal, with very little additional resource consumption and our set of activities actually ran through slightly faster than the baseline taken with Windows Defender active.

Detection was strong in the reactive sets, with no proactive data available due to issues at submission time. There were no problems in the clean sets, but in the WildList sets a handful of items were undetected throughout the test period. The numbers improved very slightly into the later runs, but that was not enough to earn KYROL a VB100 award this time around, despite a good effort.

Main version: 11.5.202.7299

Update versions: 11.6.306.7947

Last 6 tests: 3 passed, 0 failed, 3 no entry

Last 12 tests: 6 passed, 0 failed, 6 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Stable

Lavasoft’s Ad-Aware has become a very regular participant in our tests of late, entering and passing every desktop test over the last couple of years. The current version is a little slow to install but in time produces a very clean and professional-looking interface with ample information and a decent level of fine-tuning. The package includes a ‘Web Companion’ component, and also sets the default search to an in-house secure search service.

Stability was good with just a single temporary problem – a message shortly after one install claiming that a required service was unavailable, which went away after a few minutes. Performance impact was rather high, our set of tasks taking a very long time to complete with most of the overhead linked to downloading files over HTTP; memory use was also rather high throughout.

Detection, aided by the Bitdefender engine, was very good with a slight drop into the later sets, and there were no issues in the certification sets, earning Lavasoft another VB100 award and continuing its run of success.

Main version: 1.1.107.0

Update versions: 90778, 90933, 91028, 91144

Last 6 tests: 0 passed, 4 failed, 2 no entry

Last 12 tests: 0 passed, 7 failed, 5 no entry

ItW on demand: 99.72%

ItW on access: 99.12%

False positives: 0

Stability: Fair

MSecure has been trying to regain VB100 certified status for some time now, having passed on its first attempt a few years back but not doing so well on recent attempts, with the main issue being a problem with the coverage of important file types. This time, we saw the usual speedy install, and a new interface with a simple, text-heavy design which provides a good amount of information and proved reasonably simple to navigate.

Stability was a little shaky, with a number of scans crashing out and on one occasion a blue screen incident. Performance impact wasn’t bad at all, with a reasonable time taken to get through our set of tasks, below average RAM use and very low CPU use.

Detection, assisted by an engine which has been kept unidentified at the request of the developers, was pretty good in the reactive sets, with no full RAP scores thanks to problems at the time of submission. Previous problems covering file types seem to have been resolved, but like related product KYROL, a scattering of misses in the WildList sets were enough to deny MSecure a VB100 award this time around.

Main version: 11.1.2

Update versions: 0.12.0.163

Last 6 tests: 1 passed, 0 failed, 5 no entry

Last 12 tests: 3 passed, 0 failed, 9 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Fair

Not the most regular of participants in the VB100 tests, Optenet products nevertheless seem to fare pretty well when they do take part, with a handful of passes scattered through the last few years. This time, installation didn’t take too long, and the GUI presented was another browser-based affair with the usual laggy moments. The layout is reasonably simple to operate, with a good basic set of controls.

Stability was a little shaky, with a number of crashes and errors, and on occasions there were some issues getting the protection operational. Performance impact measures showed a fairly noticeable slowdown through our set of activities, and somewhat high memory use.

Detection, aided by the Kaspersky engine, looked good in the reactive sets, but the proactive sets run offline proved a bridge too far, with repeated crashes meaning no data could be gathered. The core sets were handled better though, with no problems to report and a VB100 award is earned.

Main version: 15.1.0

Update versions: N/A

Last 6 tests: 3 passed, 0 failed, 3 no entry

Last 12 tests: 3 passed, 0 failed, 9 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Solid

Panda’s second product this month has only appeared in our tests in the last year or so, but has managed to pass each time it has been entered so far. The installation process is very quick and easy, and the interface is another tiled effort which closely resembles the standard Windows 8 home screens. It provides some good info and basic configuration options, and seemed responsive and usable throughout testing.

Stability was impeccable, with no issues encountered. Performance data shows a fairly high impact on our set of tasks, but minimal use of memory and CPU cycles.

Detection in the reactive sets was a little below the high levels seen recently from Panda, but still respectable. With the product only able to function with a live Internet connection, our proactive test was skipped once again. The core sets presented no problems though, and Panda’s IS product also earns a VB100 award this month.

Main version: 1.0.0.53

Update versions: 1.0.0.53, 1.0.0.54

Last 6 tests: 1 passed, 2 failed, 3 no entry

Last 12 tests: 3 passed, 3 failed, 6 no entry

ItW on demand: 98.68%

ItW on access: 99.97%

False positives: 840

Stability: Fair

We’ve been testing PC Matic for a couple of years now, with varying results. With the standard approach, relying mainly on the ThreatTrack engine, we’ve seen a number of passes, while operating the tests in reverse (at the request of the developers in order to exercise the product’s built-in whitelisting component) has brought less success, mainly due to false positives, which are always an issue with whitelisting approaches. This month we were asked once again to try out the whitelisting method.

Installation of the product isn’t too slow, and the interface is much as we’ve seen in previous tests, emphasizing vulnerability warnings with minimal information on the anti-malware side up front. Configuration is also fairly minimal, but the basics are there.

Stability was not the best, with a number of unexpected restarts during high-stress testing, and a number of occasions when the protection shut down, although mercifully the machine managed to stay on. Performance impact wasn’t bad, with resource use and slowdown through our set of tasks both noticeable but well within acceptable bounds.

Detection was very strong indeed, with only a slight dip into the very last sets, but in the core sets there were a high number of false alarms, as well as a handful of WildList items not detected, meaning there was no VB100 award for PC Pitstop once again, but it was another interesting performance.

Main version: 1.1.4553.0

Update versions: 2.0.0.2071, 2.0.0.2093, 2.0.0.2103, 2.0.0.2116

Last 6 tests: 0 passed, 1 failed, 5 no entry

Last 12 tests: 0 passed, 1 failed, 11 no entry

ItW on demand: 75.76%

ItW on access: 73.71%

False positives: 68

Stability: Solid

This is our first look at a product from the Prodot group, which best known in India as a major supplier of printer paper and ink and is now branching out into anti-malware. The product installed in a decent time and presented a clean and pleasant GUI with a fairly standard layout and lots of information presented clearly. A decent selection of configuration settings is also provided.

Stability was impeccable, the product behaving well throughout our tests including the high-stress sections. Performance impact was reasonable too, with our set of tasks running through fairly quickly and resource use not much above average.

Detection was not so great though, with fairly mediocre scores throughout our main detection test sets, and similar numbers in the WildList sets. There were a number of false positives too, meaning there is no VB100 award for Prodot at this time, but things look promising for the near future.

Main version: 16.00(9.0.0.16)

Update versions: N/A

Last 6 tests: 3 passed, 0 failed, 3 no entry

Last 12 tests: 8 passed, 1 failed, 3 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Solid

A rather more familiar product for the test team, Quick Heal has only missed a handful of comparatives since its first appearance way back in 2002, and has maintained a good rate of passes over the years with things looking good for this time too after the success of the company’s business version. The current consumer incarnation takes quite a while to install and has a clean and simple green-on-white design with the usual tiled layout and ample configuration provided.

Stability was flawless, with no problems noted, while our set of tasks ran a little slowly with a fair amount of RAM and CPU usage.

Detection was decent, with a gradual downward trend through the main sets, and the core certification sets were handled nicely with no issues, thus earning Quick Heal its second VB100 award this month.

Main version: 9.0.6.9

Update versions: 1.1.107.0, 90778, 90933, 91028. 91144

Last 6 tests: 0 passed, 1 failed, 5 no entry

Last 12 tests: 0 passed, 1 failed, 11 no entry

ItW on demand: 99.52%

ItW on access: 99.18%

False positives: 0

Stability: Fair

Another sibling product from the MSecure family, SecureLive has been submitted for testing a few times lately but so far has only reached one final report with no certifications earned as yet. Installation is rapid and easy, the interface clear and pleasant with a reasonable degree of fine-tuning available.

Stability was far from perfect, with a number of large scans failing to complete happily, but nothing more serious, and most of the issues only occurred under heavy stress. Performance impact was minimal, with all our measures very close to our Windows Defender baseline.

Detection was strong where it could be measured, with once again no offline statistics, and there were no issues in the clean sets either, but as expected a handful of WildList samples were undetected, meaning there was no VB100 award for SecuraLive this month, but chances look good for a pass sometime soon.

Main version: 8.0

Update versions: 5649, 5670, 5682, 5689

Last 6 tests: 0 passed, 2 failed, 4 no entry

Last 12 tests: 0 passed, 2 failed, 10 no entry

ItW on demand: 20.26%

ItW on access: 3.25%

False positives: 8

Stability: Fair

Another relative newcomer, SmartCop also has only a single appearance under its belt so far, although again it has been submitted on a number of other occasions without progressing far into the testing process. This time the set-up was pretty speedy, bringing up a rather lurid orange interface, a little old-school in design but clear and intuitive to operate, with a decent range of controls.

Stability was not perfect, with a number of issues with updating and some problems with the protection component including a single blue screen incident, although all were only apparent when under heavy stress. Performance measures show RAM use and time taken to complete our set of tasks very close to the baselines, although CPU use was off the chart.

Detection was once again fairly poor with very little coverage in either our detection sets or the official WildList set. On-access scores were worryingly lower than those on demand, and there were a number of false alarms in our clean sets too, including items from Adobe, Sony and parts of the popular OpenOffice suite. So there is still no VB100 certification for SmartCop, but we did at least manage to complete a full set of tests.

Main version: 8.0.6.2

Update versions: 3.9.2600.2/37674, 3.9.2623.2/38502, 8.2.059/38720, 38934

Last 6 tests: 2 passed, 1 failed, 3 no entry

Last 12 tests: 6 passed, 1 failed, 5 no entry

ItW on demand: 99.98%

ItW on access: 99.98%

False positives: 0

Stability: Fair

ThreatTrack’s VIPRE has been doing pretty well in VB100 tests over the last few years, with several strings of passes, usually interspersed with periods of absence rather than failed tests. This month, we set up the product without much difficulty or waiting, and got to see another very slick and professional interface with the fashionable sharp corners everywhere. Configuration remains limited, but accessible.

Stability was a little questionable, with a number of scans failing, crashes and other odd behaviours throughout the test period. Our set of activities took a long time to get through, although resource use was not excessive.

Detection was very strong in the reactive sets, tailing off somewhat into the later weeks. The clean sets were well handled, but in the WildList set there were a couple of items missed in the first round of testing, meaning there can be no VB100 award for ThreatTrack this time despite better coverage in later runs.

Main version: 9.0.0.338

Update versions: 3.0.2.1015/2015.2.18.9, 9.0.0.344/2015.3.13.9, 2015.3.23.8, 9.0.0.338/2015.3.27.14

Last 6 tests: 2 passed, 0 failed, 4 no entry

Last 12 tests: 2 passed, 2 failed, 8 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Stable

Our history for Total Defense is a little convoluted, thanks to the product’s evolution from earlier CA solutions, but recently at least it has shown a return to form after a couple of less impressive performances last year. Set up was a little drawn out but got there eventually, showing a new look with large tiles covering security, parental controls and PC performance tuning, among other things.

Stability was decent, with a few issues with some updates but nothing very serious at all. Performance impact was OK too, with average memory consumption and slowdown of our set of tasks, and very low use of CPU.

Detection was also decent, with good levels across the sets, and with no issues in the core certification sets a VB100 award is well deserved by Total Defense.

Main version: 15.0.1.5424

Update versions: N/A

Last 6 tests: 4 passed, 0 failed, 2 no entry

Last 12 tests: 9 passed, 0 failed, 3 no entry

ItW on demand: 100.00%

ItW on access: 100.00%

False positives: 0

Stability: Stable

Finally this month, we come to TrustPort, another old stager with a pretty reliable record of passes and some exceptionally high detection scores through the years. Getting set up was fairly speedy and painless, and the interface once again uses the tiled style, looking pretty simple and clean on the surface but providing a good depth of configuration underneath.

Stability was OK, although we observed a few issues with logging at times and some strange windowing behaviour. Performance impact was good, with low resource use and a reasonable time taken to complete our set of tasks.

Detection is what it’s all about for TrustPort fans though, and once again they will not be disappoint-ed with some excellent detection rates across our sets. With the core certification sets handled nicely too, a VB100 award is easily earned by TrustPort.

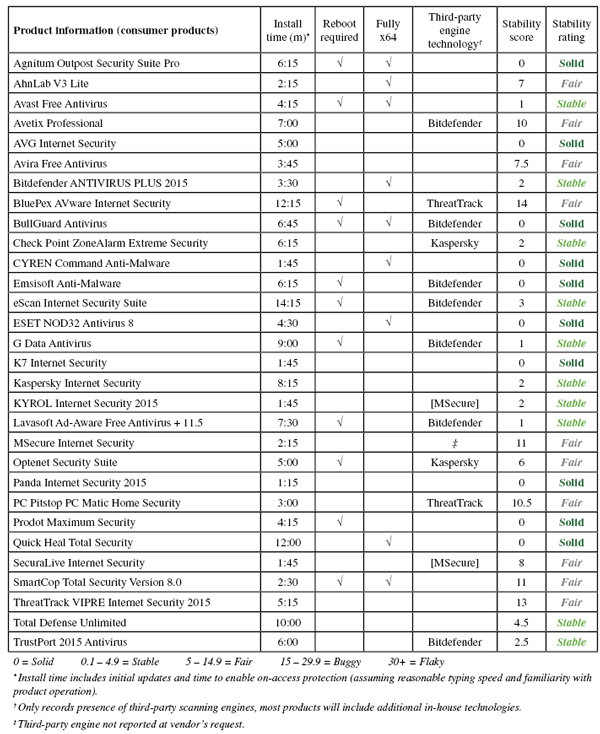

(Click for a larger version of the table)

(Click for a larger version of the table)

(Click for a larger version of the table)

(Click for a larger version of the table)

(Click for a larger version of the table)

(Click for a larger version of the table)

(Click for a larger version of the chart)

(Click for a larger version of the chart)

As usual, a number of solutions were submitted for testing but did not make their way to the final report, this month including products from Qihoo and Tencent.

This report is published rather late thanks to a number of issues, not least a rather higher than usual number of stability problems across a wide range of products, many of which meant lots of extra work for the lab team. Streamlining our performance measures somewhat should help us deal with this sort of thing in future, but as always when changes need to be made, there is always plenty to go wrong the first time.

The VB100 pass rate was decent once again, although not quite up to the perfect or near-perfect fields we’ve seen in a few recent tests. Unexpectedly, we saw quite a few products not managing to cover the full WildList set, and as usual false positives contributed to several fails.

With this report finally wrapped up, we have a vast amount of much more granular data from our expanded performance tests, which we can now start to analyse and try to figure out how to present in the clearest and simplest way. As this report is being wrapped up, the next comparative, on Windows 2012 Server, has already been completed and the results are being processed, while the following one, on Windows 7, is well under way. With Windows 10 on the horizon we could be in for some big changes, and we will keep working on our testing processes to keep up.

Test environment. All tests were run on identical systems with AMD A6-3670K Quad Core 2.7GHz processors, 4GB DUAL DDR3 1600MHz RAM, dual 500GB and 1TB SATA hard drives and gigabit networking, running Microsoft Windows 8.1 64-bit Professional Edition.

Any developers interested in submitting products for VB's comparative reviews, or anyone with any comments or suggestions on the test methodology, should contact [email protected]. The current schedule for the publication of VB comparative reviews can be found at http://www.virusbtn.com/vb100/about/schedule.xml.

Some minor adjustments were made to our stability classification scheme this month, and as the full details of the methodology have not yet made their way into our main online descriptions, they are included here for reference.

The aim of this system is to provide a guide to the stability and quality of products participating in VB100 comparative reviews. It is designed only to cover areas of product performance observed during VB100 testing, and all bugs noted must be observed during the standard process of carrying out VB100 comparative tests; thus all products should have an equal chance of displaying errors or problems present in the areas covered.

The system classifies products on a five-level stability scale. The five labels indicate the following stability status:

Solid – the product displayed no issues of any kind during testing.

Stable – a small number of minor or very minor issues were noted, but the product remained stable and responsive throughout testing.

Fair – a number of minor issues, or very few serious but not severe issues, were noted but none that threatened to compromise the functioning of the product or the usability of the system.

Buggy – many small issues, several fairly serious ones or a few severe problems were observed; the product or system may have become unresponsive or required rebooting when under heavy stress.

Flaky – there were a number of serious or severe issues which compromised the operation of the product, or rendered the test system unusable.

Bugs and problems are classified as very minor, minor, serious, severe and very severe. The following is an incomplete list of examples of each category:

Very minor – error messages displayed but errors not impacting product operation or performance; updates or other activities failing cleanly (with alert to user) but working on second attempt; display issues.

Minor – minor (non-default) product options not functioning correctly; product interface becoming unresponsive for brief periods (under 30 seconds).

Serious – scan crashes or freezes; product interface freezes, or interface becoming unresponsive for long periods (more than 30 seconds, with protection remaining active); scans failing to produce accurate reporting of findings; product ignoring configuration in a way which could damage data.

Severe – system becoming unresponsive; system requiring reboot; protection being disabled or rendered ineffective.

Very severe – BSOD; system unusable; product non-functional.

Bugs will be counted as the same issue if a similar outcome is noted under similar circumstances. For each bug treated as unique, a raw score of one point will be accrued for very minor problems, two points for minor problems, five points for serious problems, 10 points for severe problems, and 20 points for very severe problems. These raw scores will then be adjusted depending on two additional factors: bug repeatability and bug circumstances.

All issues should be double-checked to test reproducibility. Issues will be classed as ‘reliably reproducible’ if they can be made to re-occur every time a specific set of circumstances is applied; ‘partially reproducible’ if the problem happens sometimes but not always in similar situations; ‘occasional’ if the problem occurs in less than 10% of similar tests; and ‘one-off’ if the problem does not occur more than twice during testing, and not more than once under the same or similar circumstances. One-off and occasional issues will have a points multiplier of x0.5; reliably reproducible issues will have a multiplier of x2.

As some of our tests apply unusually high levels of stress to products, this will be taken into account when calculating the significance of problems. Those that occur only during high-stress tests using unrealistically large numbers of malware samples will be given a multiplier of x0.5.