2015-04-24

Abstract

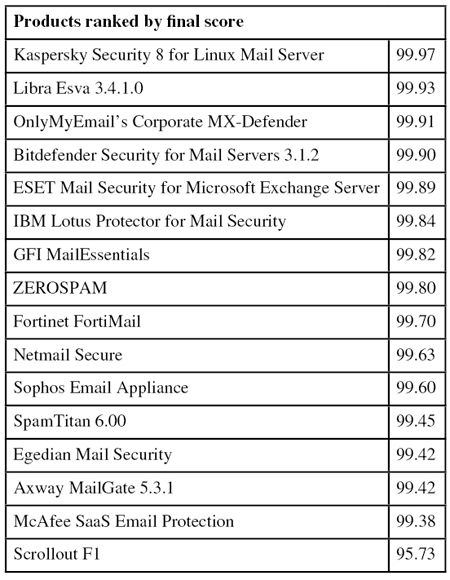

This VBSpam test completes six full years of VB's comparative anti-spam testing. Sixteen full solutions and a number of DNS-based blacklists were submitted on this occasion, and all but one of the full solutions achieved a VBSpam award, with seven of them achieving a VBSpam+ award. Martijn Grooten has the details.

Copyright © 2015 Virus Bulletin

This month we complete six years of comparative anti-spam testing. Actually, it is six years and a month – a number of issues have caused this report to be delayed by several weeks, so I will keep the introduction short.

In these six years we have seen the spam landscape change in a number of ways. Firstly, while most spam continues to be sent from compromised machines, these days, those machines are often compromised Linux servers rather than hijacked Windows PCs. It’s no longer your grandparents’ XP machine that is responsible for the sending of spam, but your geeky cousin’s web server.

Secondly, spammers are using more and more hijacked resources, from webmail accounts – an example of which we saw in this test – to domains. This makes it a lot harder for a spam filter to be absolutely certain that an email is spam.

I have said on a number of occasions in these reports that spam as a problem is actually very well mitigated: the threat of spam making email unusable doesn’t seem particularly realistic at the moment. Still, being mitigated successfully isn’t the same as being solved; hence we continue to need spam filters as much as before.

With our VBSpam setup, we continue to provide customers with information on which products perform particularly well. At the same time, we help developers of those products to make their products better.

Sixteen full solutions and a number of DNS-based blacklists were submitted for this test. All but one of the full solutions achieved a VBSpam award, and seven of them achieved a VBSpam+ award.

The VBSpam test methodology can be found at http://www.virusbtn.com/vbspam/methodology/. As usual, emails were sent to the products in parallel and in real time, and products were given the option to block email pre-DATA (that is, based on the SMTP envelope and before the actual email was sent). However, no products chose to make use of this option on this occasion.

For those products running on our equipment, we use Dell PowerEdge machines. As different products have different hardware requirements – not to mention those running on their own hardware, or those running in the cloud – there is little point comparing the memory, processing power or hardware the products were provided with; we followed the developers’ requirements and note that the amount of email we receive is representative of that received by a small organization.

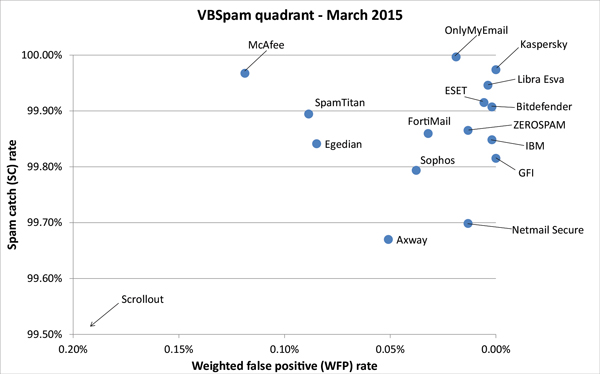

To compare the products, we calculate a ‘final score’, which is defined as the spam catch (SC) rate minus five times the weighted false positive (WFP) rate. The WFP rate is defined as the false positive rate of the ham and newsletter corpora taken together, with emails from the latter corpus having a weight of 0.2:

WFP rate = (#false positives + 0.2 * min(#newsletter false positives , 0.2 * #newsletters)) / (#ham + 0.2 * #newsletters)

Products earn VBSpam certification if the value of the final score is at least 98:

SC - (5 x WFP) ≥ 98

Meanwhile, products that combine a spam catch rate of 99.5% or higher with a lack of false positives and no more than 2.5% false positives among the newsletters earn a VBSpam+ award.

The test started on Saturday 14 February at 12am and finished 16 days later, on Monday 3 March at 12am. This time there were no serious issues affecting the test.

The test corpus consisted of 136,904 emails. 126,046 of these emails were spam, 61,519 of which were provided by Project Honey Pot, with the remaining 64,527 emails provided by spamfeed.me, a product from Abusix. They were all relayed in real time, as were the 10,549 legitimate emails (‘ham’) and 309 newsletters.

Figure 1 shows the catch rate of all full solutions throughout the test. To avoid the average being skewed by poorly performing products, the highest and lowest catch rates have been excluded for each hour.

One immediately notices that there were a few periods during the test where the average performance was rather poor. In fact, this was the result of a single spam campaign (see Figure 2), which caused problems for almost all products in the test.

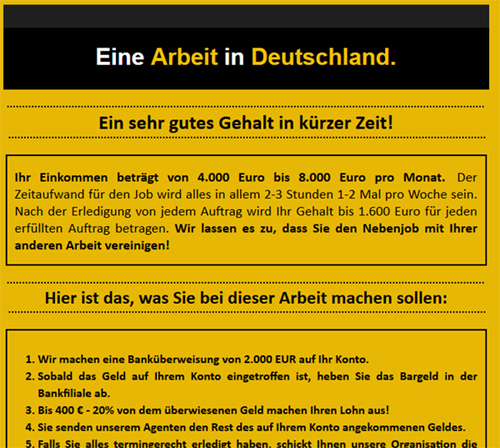

Figure 2. A single spam campaign targeting German users caused problems for almost all products in the test.

The campaign targets users in Germany and isn’t too hard to spot as job recruitment spam. In fact, the promise of receiving a fair amount of money in return for very little work suggests that the spammers are looking for money mules.

What makes these emails difficult to filter is that they were sent from Outlook.com accounts that were likely generated for the purpose (rather than taken over from actual users). Although we estimate that at least tens of thousands of email addresses were used in this campaign, this might be a small enough number to stay under the radar of both Microsoft’s outbound spam filters and most inbound filters like the ones in this test.

SC rate: 99.67%

FP rate: 0.03%

Final score: 99.42

Project Honey Pot SC rate: 99.56%

Abusix SC rate: 99.78%

Newsletters FP rate: 3.9%

Compared to the previous test, Axway’s MailGate virtual appliance saw its spam catch rate decrease slightly. What stood out among the missed spam were a few campaigns in (Brazilian) Portuguese, as well as a campaign in which the subject lines were two random English words and the content was nothing but a link to a page on a compromised website.

At 99.67%, the product still blocked more than 299 out of every 300 spam emails. There were three false positives, so the clean sheet it achieved in the last test wasn’t repeated, but the newsletter false positive rate went down. All in all, Axway fully deserves yet another VBSpam award, this one completing a full year of such awards.

SC rate: 99.91%

FP rate: 0.00%

Final score: 99.90

Project Honey Pot SC rate: 99.96%

Abusix SC rate: 99.86%

Newsletters FP rate: 0.3%

The last test saw Bitdefender missing out on a VBSpam+ award for the first time in more than two years. That appears only to have been a temporary glitch though, as on this occasion the Romanian product yet again avoided false positives, while missing only one email in the newsletter category: a notification from Twitter.

The spam catch rate did drop a little – most interesting among the 117 missed spam emails were several where the payload seemed to be missing – but at 99.91% remained very high. Not only does Bitdefender complete six full years of testing without missing a single VBSpam award, the company also achieves its 13th VBSpam+ award.

SC rate: 99.84%

FP rate: 0.08%

Final score: 99.42

Project Honey Pot SC rate: 99.84%

Abusix SC rate: 99.84%

Newsletters FP rate: 1.6%

Egedian has had a bit of an unlucky run recently, missing out on a VBSpam award twice in a row, first due to a high false positive rate and then because the product’s spam catch rate dropped significantly. It’s third time lucky for the French product though; or rather, the developers worked hard to get things right this time.

There were only 200 missed spam emails – most of which were emails that were missed by the majority of solutions – while neither the false positive rate nor the number of newsletters were too high. Hence Egedian is well deserving of a VBSpam award this time.

SC rate: 99.92%

FP rate: 0.00%

Final score: 99.89

Project Honey Pot SC rate: 99.87%

Abusix SC rate: 99.96%

Newsletters FP rate: 1.0%

ESET’s commendable performance in the last review was not a one-off occurrence, as this test shows. Yet again, the Exchange-based product didn’t miss any legitimate emails, while only three newsletters were erroneously blocked.

At the same time, there were only 107 emails among the eclectic mix of missed spam – a small improvement compared to the previous test. Thus another VBSpam+ award – already the product’s eighth – goes to ESET.

SC rate: 99.86%

FP rate: 0.03%

Final score: 99.70

Project Honey Pot SC rate: 99.84%

Abusix SC rate: 99.88%

Newsletters FP rate: 0.7%

The VBSpam history for Fortinet’s FortiMail appliance goes all the way back to the second test we ever ran, in June 2009, and we have run the very same appliance in all 35 tests. In none of these tests has FortiMail missed out on a VBSpam award, and since the introduction of the VBSpam+ awards a few years ago, the product has snatched up a few of those too.

In the last test, it was a relatively high number of false positives among the newsletters that prevented the product from winning another VBSpam+ award. That wasn’t a problem this time – there were only two misclassifications – but three false positives in the ham corpus meant that yet again, we had to deny the product a VBSpam+ award. However, with a spam catch rate of more than 99.85%, FortiMail had no problem achieving its 15th VBSpam award.

SC rate: 99.82%

FP rate: 0.00%

Final score: 99.82

Project Honey Pot SC rate: 99.72%

Abusix SC rate: 99.90%

Newsletters FP rate: 0.0%

This month, GFI MailEssentials completes two dozen participations in the VBSpam test. The product has never failed to achieve a VBSpam award, and recently has found itself among the better performers.

In this test, MailEssentials missed 233 spam emails – resulting in a decent spam catch rate of 99.82% – and that’s all that went wrong: it was one of only two products that had no false positives in either the ham corpus or the newsletter corpus. Another VBSpam+ award for GFI’s Maltese developers – their sixth already – is thus very well deserved.

SC rate: 99.85%

FP rate: 0.00%

Final score: 99.84

Project Honey Pot SC rate: 99.71%

Abusix SC rate: 99.98%

Newsletters FP rate: 0.3%

IBM’s Lotus Protector product missed fewer than 200 spam emails in this test, and it was interesting to see a fair amount of duplicates among those emails. We don’t give discounts for duplicates – after all, part of the problem of spam is its volume – but even with those duplicates, a 99.85% spam catch rate is very good.

What’s more, IBM didn’t miss a single legitimate email, and only missed one newsletter. Not only does that mean the industry giant achieves its second VBSpam+ award, but it does so with its best final score to date.

SC rate: 99.97%

FP rate: 0.00%

Final score: 99.97

Project Honey Pot SC rate: 99.96%

Abusix SC rate: 99.99%

Newsletters FP rate: 0.0%

The last test was a good one for Kaspersky’s Linux Mail Server product, as it combined a total lack of false positives with a spam catch rate of over 99.9%, but in this test it outdid itself. Yet again, the solution didn’t miss a single email from either the ham or the newsletter corpus, and it combined this with missing only 33 spam emails (about half of which were written in Japanese).

Clearly, this means that yet another VBSpam+ award is earned by Kaspersky, and the fact that it finished this test with the highest final score adds a little extra glitter to that award.

SC rate: 99.95%

FP rate: 0.00%

Final score: 99.93

Project Honey Pot SC rate: 99.96%

Abusix SC rate: 99.93%

Newsletters FP rate: 0.7%

Libra Esva missed 68 spam emails in this test. That’s more than the virtual appliance has missed in a while, but almost all of these were also missed by most other products. Moreover, the virtual appliance didn’t block a single legitimate email (yet again).

Add to that only two blocked newsletters – one from the US and one from Belgium – and with a second highest final score, Libra Esva completes its first full dozen VBSpam+ awards.

SC rate: 99.97%

FP rate: 0.11%

Final score: 99.38

Project Honey Pot SC rate: 99.94%

Abusix SC rate: 100.00%

Newsletters FP rate: 1.0%

Missing just 40 spam emails – fewer than all but two other solutions – the spam catch rate of McAfee’s SaaS solution was even higher than it was in the last test and certainly impressive.

Against this stood 12 false positives from six senders, which is on the high side, especially given how well most products have been dealing with the ham feed in recent tests. Nevertheless, the product still achieves a VBSpam award without any difficulty.

SC rate: 99.70%

FP rate: 0.00%

Final score: 99.63

Project Honey Pot SC rate: 99.52%

Abusix SC rate: 99.87%

Newsletters FP rate: 2.3%

There was a small decrease in the spam catch rate for Netmail Secure this month, but nothing really to worry about. Yet again I noticed spam in East Asian languages being prevalent among those spam emails that made it past the spam filter.

More importantly, Netmail didn’t block any emails from the ham corpus, while the newsletter false positive rate decreased a little (interestingly, all but one of the missed newsletters were sent through MailChimp), which meant that Netmail Secure earned a VBSpam+ award this time.

SC rate: 100.00%

FP rate: 0.02%

Final score: 99.91

Project Honey Pot SC rate: 99.99%

Abusix SC rate: 100.00%

Newsletters FP rate: 0.0%

It has been a while (November 2013, to be precise) since OnlyMyEmail last blocked an email from the ham corpus. This month, it blocked two, albeit both from the same sender.

That is neither worrying nor shocking, especially as the product didn’t block any newsletters and only missed four out of more than 125,000 emails from the spam feed – and these were in fact four instances of the same email. There may be no VBSpam+ award for the Michigan-based hosted solution this time, but with the third highest final score in the test, the product’s developers have plenty to be pleased about.

SC rate: 99.19%

FP rate: 0.58%

Final score: 95.73

Project Honey Pot SC rate: 99.30%

Abusix SC rate: 99.08%

Newsletters FP rate: 24.3%

Scrollout F1 is a free and open source anti-spam solution, one that we have been testing for a little over four years. It has picked up several VBSpam awards along the way, but showed some issues in the last test, in particular a high false positive rate.

This time, things actually got a little worse. More than one in every 200 legitimate emails was blocked by the product, while the spam catch rate fell too. 99.19% might not be too bad for a catch rate, but it was by far the lowest among participating solutions. We hope that some changes made to the product’s settings will be able to turn the tide for the next test; this time, we couldn’t give Scrollout a VBSpam award.

SC rate: 99.79%

FP rate: 0.04%

Final score: 99.60

Project Honey Pot SC rate: 99.73%

Abusix SC rate: 99.86%

Newsletters FP rate: 0.0%

Sophos’s Email Appliance has traditionally been strong when it comes to avoiding false positives among newsletters; in fact, in this test it avoided them altogether, while erroneously blocking four legitimate emails.

The latter means we couldn’t give Sophos a VBSpam+ award, but with an overall decent performance, the appliance continues its unbroken run of more than 30 VBSpam awards.

SC rate: 99.89%

FP rate: 0.08%

Final score: 99.45

Project Honey Pot SC rate: 99.90%

Abusix SC rate: 99.89%

Newsletters FP rate: 2.3%

A low catch rate meant that, earlier this year, SpamTitan missed its first VBSpam award in more than five years of VBSpam participation. In this test, the Irish virtual solution proved that this was really a one-off thing: the product missed just 133 spam emails – a catch rate of almost 99.9% – among which several fake tax refund emails stood out the most.

There were also eight false positives – all in English – which means that there was no VBSpam+ award this time, but the product’s 32nd VBSpam award is as well deserved as ever.

SC rate: 99.87%

FP rate: 0.01%

Final score: 99.80

Project Honey Pot SC rate: 99.85%

Abusix SC rate: 99.88%

Newsletters FP rate: 0.7%

In the VBSpam test, we take a liberal view of emails: if a real person meant to send an email – and if the recipient opted in to receiving it – we think it acceptable for the email to be included in the test, even if it didn’t follow best practices. This was certainly the case for the single false positive for ZEROSPAM in this test: an email from Costa Rica which was sent from an IP address without a valid PTR record.

ZEROSPAM doesn’t earn another VBSpam+ award, but with just 170 missed spam emails, the hosted solution that operates from Canada easily wins its 19th VBSpam award in as many tests.

SC rate: 57.67%

FP rate: 0.63%

Final score: 54.54

Project Honey Pot SC rate: 71.26%

Abusix SC rate: 44.71%

Newsletters FP rate: 0.7%

SC rate: 90.79%

FP rate: 0.00%

Final score: 90.79

Project Honey Pot SC rate: 83.81%

Abusix SC rate: 97.44%

Newsletters FP rate: 0.0%

SC rate: 95.98%

FP rate: 0.63%

Final score: 92.85

Project Honey Pot SC rate: 93.65%

Abusix SC rate: 98.20%

Newsletters FP rate: 0.7%

It has been mentioned in these reports before that URL shorteners are a huge pain for spam filters in general and domain-based blocklists in particular: they are commonly used in spam to hide the real destination of a link, but are also occasionally used by legitimate senders to make the links in their emails look prettier.

The high false positive rate for the Spamhaus DBL – and thus also for the combined ZEN+DBL list – was largely due to such shorteners. Thankfully, the blacklist sends a special response for such URLs, so administrators who include the DBL in their anti-spam solution could easily prevent such URLs from being blocked. This might of course come at the cost of some extra false negatives.

Of course, some may opt only to use the IP-based blacklists combined in ZEN. It is worth noting that those lists didn’t have any false positives, yet still blocked more than nine out of ten spam emails based on the sending IP address alone.

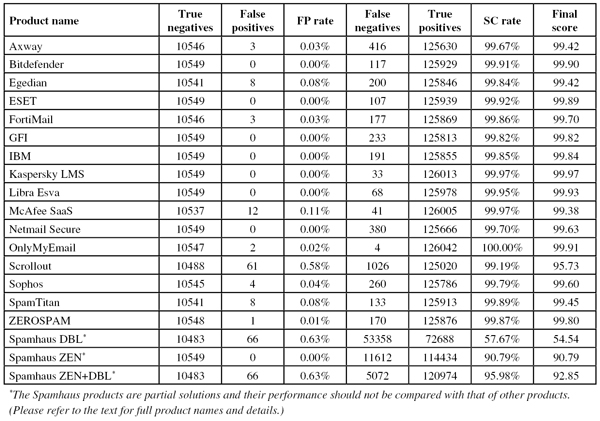

(Click here to view a larger version of the table.)

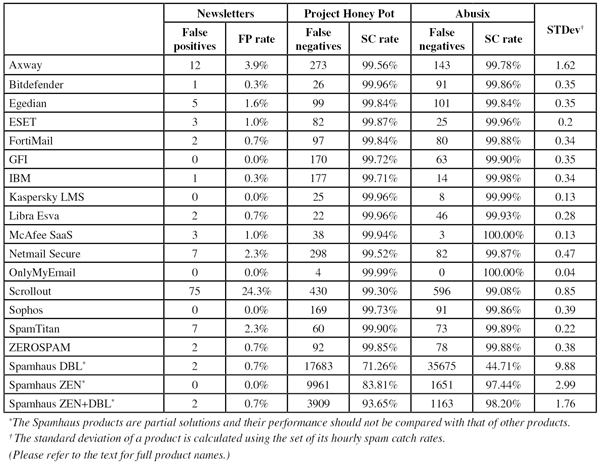

(Click here to view a larger version of the table.)

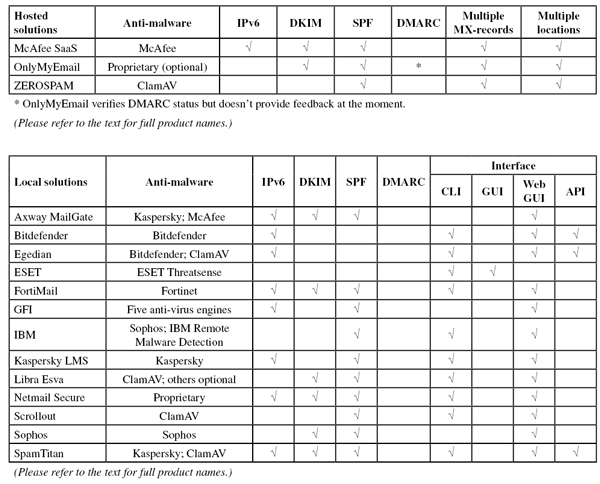

(Click here to view a larger version of the table.)

(Click here to view a larger version of the chart.)

With one exception, this test once again demonstrated how well anti-spam solutions block spam. However, the dozens of emails sent from specially created Outlook.com accounts show that there remain options for spammers to bypass most spam filters, even if these options might not scale too well.

The next VBSpam test will run in April 2015 (and is about to start at the time of writing this report), with the results scheduled for publication in May. Developers interested in submitting products should email [email protected].