2013-07-26

Abstract

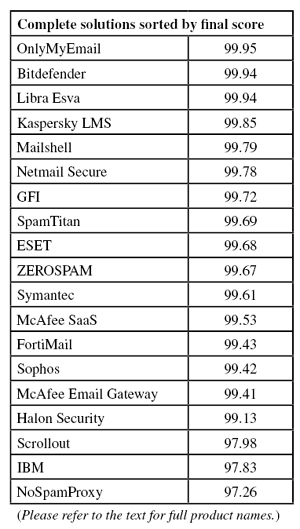

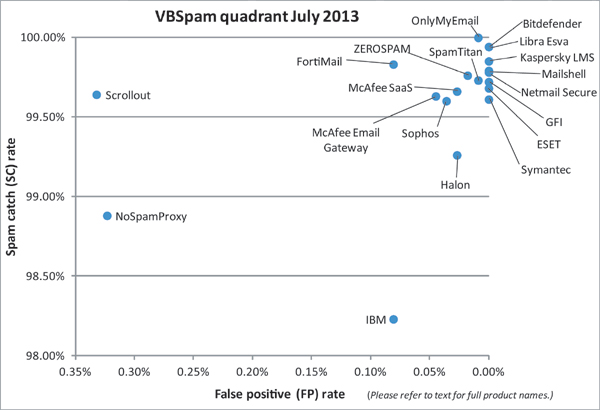

This month’s VBSpam results were a bit of a mixed bag, with no fewer than eight VBSpam+ awards (and eight standard VBSpam awards), but also three full solutions failing to meet the standard for certification

Copyright © 2013 Virus Bulletin

As I begin this review, the royal baby that has been keeping the United Kingdom guessing for almost nine months is reported to be on its way. And, with the world’s attention focusing on the Duchess of Cambridge, security experts are anticipating the inevitable new scams that will exploit interest in the soon-to-be-born prince or princess.

Indeed, it is to be expected that millions of emails and social media posts will be sent using the royal baby as bait and promising some new angle on the story – leading to a survey scam at best, and downloading malware in a worse, but perhaps more likely, scenario.

One would thus be forgiven for believing that those tasked with developing spam filters are keeping a close eye on their spam traps – adding new rules as soon as royal baby spam starts to arrive. However, this is unlikely to be the case.

Most spam filters block well over 99% of the spam contained in our corpus. Such high scores would not be achievable if new rules constantly needed to be added manually. Of course, in some cases manual intervention is necessary to make sure a particular campaign isn’t missed, but most spam filtering happens fully automatically, based on a large number of sensors and algorithms. Spam filters are constantly being worked on, but this is to improve the algorithms that proactively block new spam campaigns, rather than to reactively block what has already been sent.

The VBSpam tests don’t measure how quickly participating vendors are able to react to new campaigns, but rather how good their products are – which is what really matters. So even if the royal baby’s arrival is accompanied by a billion email spam run, some developers may not even notice these spam emails: they simply don’t have to.

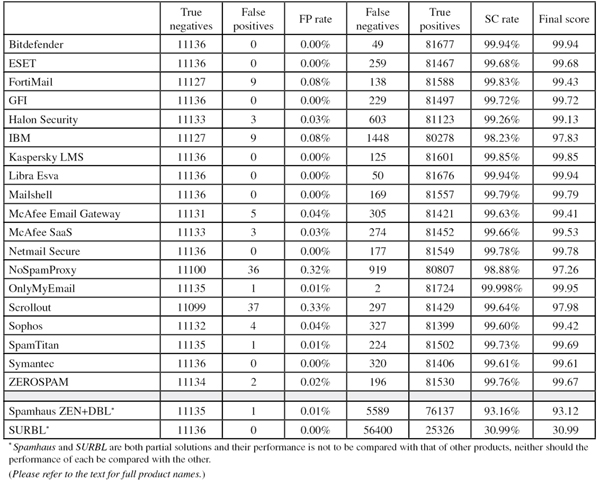

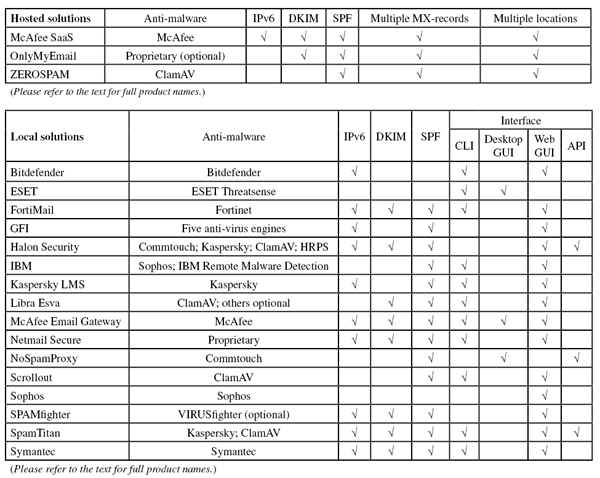

This month we tested 19 full anti-spam solutions, as well as two DNS-based blacklists. The results were a bit of a mixed bag: we handed out no fewer than eight VBSpam+ awards, while another eight full solutions achieved a standard VBSpam award. However, three full solutions failed to meet the standard for certification.

The VBSpam test methodology can be found at http://www.virusbtn.com/vbspam/methodology/. As usual, emails were sent to the products in parallel and in real time, and products were given the option to block email pre DATA – that is, based on the SMTP envelope and before the actual email was sent. Four products chose to make use of this option.

For the products that run on our equipment, we use Dell PowerEdge machines. As different products have different hardware requirements (not to mention those running on their own hardware, or those running in the cloud) there is little point comparing the memory, processing power or hardware the products were provided with; we followed the developers’ recommendations and note that the amount of email we receive is representative of a small organization.

To compare the products, we calculate a ‘final score’, which is defined as the spam catch (SC) rate minus five times the false positive (FP) rate. Products earn VBSpam certification if this value is at least 98:

SC - (5 x FP) ≥ 98

Meanwhile, those products that combine a spam catch rate of 99.50% or higher with a lack of false positives earn a VBSpam+ award.

The test ran for 16 consecutive days: two full weeks, plus an extra weekend. It started at 12am on Saturday 22 June and ended at the same time on Monday 8 July.

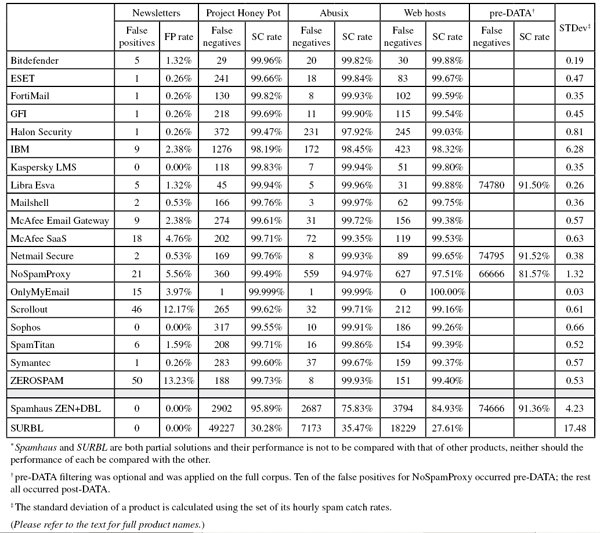

A total of 93,240 emails were sent as part of the test, 81,726 of which were spam. 70,610 of these were provided by Project Honey Pot, with the remaining 11,116 emails provided by spamfeed.me, a product from Abusix. They were all relayed in real time, as were the 11,136 legitimate emails (‘ham’) and 378 newsletters.

It is good to keep in mind that the spam we use is a random sample of two much larger feeds which cover the global spam landscape. Articles about spam tend to quote large numbers – sometimes millions of emails – nevertheless, the smaller number of emails in our corpus is a representative sample.

Figure 1 shows the catch rate of all full solutions throughout the test. To avoid the average being skewed by poorly performing products, the highest and lowest catch rates have been excluded for each hour.

Comparing this graph with that of the previous test, we notice that once again, there is a lot of variation without a clear trend. The average catch rate was slightly higher this time than in the previous test.

In the last review, we discussed how spam sent from (mostly) compromised hosts (which we defined as spam whose sending IP was listening on port 80) was significantly harder to filter. The same phenomenon was observed in this test.

In the text that follows, unless otherwise specified, ‘ham’ or ‘legitimate email’ refers to email in the ham corpus – which excludes the newsletters – and a ‘false positive’ is a message in that corpus that has been erroneously marked by a product as spam.

Because the size of the newsletter corpus is significantly smaller than that of the ham corpus, a missed newsletter will have a much greater effect on the newsletter false positive rate than a missed legitimate email will have on the false positive rate for the ham corpus (e.g. one missed email in the ham corpus results in an FP rate of less than 0.01%, while one missed email in the newsletter corpus results in an FP rate of more than 0.2%).

SC rate: 99.94%

FP rate: 0.00%

Final score: 99.94

Project Honey Pot SC rate: 99.96%

Abusix SC rate: 99.82%

Newsletters FP rate: 1.3%

Bitdefender retains its record for the highest number of VBSpam awards achieved, having won one in each of the 26 tests we have run. But the Romanian vendor also has another record: without having missed a single legitimate email in the whole of 2013, it has now won four VBSpam+ awards in a row. What is more, the product increased its catch rate once again, blocking well over 99.9% of the spam in our corpus. With the second highest final score, there should be plenty of reasons for Bitdefender to celebrate this month.

SC rate: 99.68%

FP rate: 0.00%

Final score: 99.68

Project Honey Pot SC rate: 99.66%

Abusix SC rate: 99.84%

Newsletters FP rate: 0.3%

Since joining the VBSpam tests, ESET has always found itself among the higher ranked products. It has edged close to achieving a VBSpam+ award (its third) in each of the last two tests – but has been denied the higher level award on each occasion by just a single false positive. We were therefore pleased to find that the product didn’t miss any of the more than 11,000 legitimate emails in this month’s corpus – and with yet another good spam catch rate, this time ESET earns its third VBSpam+ award.

SC rate: 99.83%

FP rate: 0.08%

Final score: 99.43

Project Honey Pot SC rate: 99.82%

Abusix SC rate: 99.93%

Newsletters FP rate: 0.3%

In May, FortiMail achieved a VBSpam+ award – which was well deserved based on its past performance in general, and that month’s performance in particular. Unfortunately, it was not able to repeat the achievement in this test: while the appliance maintained a high catch rate (among the misses were a surprisingly large number of Japanese spam emails), we counted nine false positives, thus seeing the product drop down the rankings. Nevertheless, Fortinet maintains an unbroken run of 25 VBSpam awards in as many tests.

SC rate: 99.72%

FP rate: 0.00%

Final score: 99.72

Project Honey Pot SC rate: 99.69%

Abusix SC rate: 99.90%

Newsletters FP rate: 0.3%

In the last test, GFI MailEssentials saw its performance drop a little because of some difficulties with the Abusix feed. We were thus pleased to see the product bounce back and catch 99.9% of Abusix spam – and 99.72% of all spam in general. Moreover, this improvement didn’t affect GFI’s false positive rate, and in fact it managed to avoid false positives altogether – thus the Maltese Windows-based solution earns its 14th VBSpam award and its second VBSpam+ award.

SC rate: 99.26%

FP rate: 0.03%

Final score: 99.13

Project Honey Pot SC rate: 99.47%

Abusix SC rate: 97.92%

Newsletters FP rate: 0.3%

A small drop in Halon’s spam catch rate (among the false negatives we noticed quite a few emails in non-Latin character sets) came along with a drop in its false positive rate, and as a result, the Swedish solution’s final score actually increased. Halon Security thus easily wins its 15th VBSpam award.

SC rate: 98.23%

FP rate: 0.08%

Final score: 97.83

Project Honey Pot SC rate: 98.19%

Abusix SC rate: 98.45%

Newsletters FP rate: 2.4%

Almost half of the false negatives for IBM in this month’s corpus were from a single spam campaign, promising ‘Summer sale! Get 60% OFF’. While it didn’t quite knock 60% off the product’s catch rate, it did help push it down to not much more than 98%. As the product also missed nine legitimate emails, its final score dropped below the 98 threshold and thus the product was denied a VBSpam award on this occasion.

SC rate: 99.85%

FP rate: 0.00%

Final score: 99.85

Project Honey Pot SC rate: 99.83%

Abusix SC rate: 99.94%

Newsletters FP rate: 0.0%

Kaspersky’s Linux Mail Security product missed out on a VBSpam+ award in each of the last two tests – first because of a low spam catch rate, and then because of a single false positive. However, it’s a case of third time lucky for the Russian solution, as this month it combined yet another high catch rate with a lack of false positives (including in the harder-to-filter newsletter corpus), achieving the fourth highest final score. Hence the seventh award for this particular Kaspersky product is its second VBSpam+ award.

.

SC rate: 99.94%

FP rate: 0.00%

Final score: 99.94

Project Honey Pot SC rate: 99.94%

Abusix SC rate: 99.96%

SC rate pre-DATA: 91.50%

Newsletters FP rate: 1.3%

Since joining the VBSpam tests back in 2010, Libra Esva has consistently ranked among the top performers. This test was no exception, as the Italian product achieved the third highest catch rate, the third highest final score and – thanks to a lack of false positives – its third VBSpam+ award.

SC rate: 99.79%

FP rate: 0.00%

Final score: 99.79

Project Honey Pot SC rate: 99.76%

Abusix SC rate: 99.97%

Newsletters FP rate: 0.5%

In the last test, Mailshell’s Mail Agent (the new version of the company’s SDK) came within an inch of earning a VBSpam+ award, which would have been the company’s second. This time around, the product had better luck – managing to combine a lack of false positives with an increase in its spam catch rate, and thus achieving its second VBSpam+ award, along with the fifth highest final score.

SC rate: 99.63%

FP rate: 0.04%

Final score: 99.41

Project Honey Pot SC rate: 99.61%

Abusix SC rate: 99.72%

Newsletters FP rate: 2.4%

This month’s test was a good one for McAfee’s Email Gateway appliance, as the product saw an increase in its spam catch rate (we couldn’t detect any pattern in the 300-odd spam emails it missed) while halving its false positive rate to just five missed legitimate emails. Its highest final score in a year earns McAfee another VBSpam award for this appliance.

SC rate: 99.66%

FP rate: 0.03%

Final score: 99.53

Project Honey Pot SC rate: 99.71%

Abusix SC rate: 99.35%

Newsletters FP rate: 4.8%

McAfee’s hosted anti-spam solution missed more spam emails this month than in the previous test – half of which were written in a foreign character set – but at 99.66%, its spam catch rate is nothing to be ashamed of. Nor are the three false positives (reduced from five in the last test), although they do of course get in the way of the product winning a VBSpam+ award. It easily earns another VBSpam award though.

SC rate: 99.78%

FP rate: 0.00%

Final score: 99.78

Project Honey Pot SC rate: 99.76%

Abusix SC rate: 99.93%

SC rate pre-DATA: 91.52%

Newsletters FP rate: 0.5%

The Netmail Secure virtual appliance is another product that has narrowly missed out on achieving a VBSpam+ award in the last two tests. This time was different, however, and with a lack of false positives and fewer than one in 460 spam emails missed, the product from Messaging Architects earns it third VBSpam+ award.

SC rate: 98.88%

FP rate: 0.32%

Final score: 97.26

Project Honey Pot SC rate: 99.49%

Abusix SC rate: 94.97%

SC rate pre-DATA: 81.57%

Newsletters FP rate: 5.6%

In the last test, Windows solution NoSpamProxy from Net At Work made its VBSpam debut. It earned a VBSpam award with a final score that just scraped in above the VBSpam threshold. Unfortunately, its performance deteriorated in this test, with both a lower catch rate and a higher false positive rate recorded. The low catch rate was partly caused by some Chinese-language spam in the Abusix corpus, while most of the false positives were written in English. We will of course work with the developers at Net At Work and provide them with detailed feedback – hopefully this will help to improve the product’s performance in time for the next test.

SC rate: 99.998%

FP rate: 0.01%

Final score: 99.95

Project Honey Pot SC rate: 99.999%

Abusix SC rate: 99.99%

Newsletters FP rate: 4.0%

OnlyMyEmail missed just two spam emails in this test – one from each source, and both were emails that were missed by the majority of the products. While one would imagine that this is an achievement to be proud of, the fact that the hosted solution has not failed to block a spam email in our tests since November 2012 means that it may be seen as a slight disappointment from the developers’ point of view. It shouldn’t be though, and there is more reason to be pleased: with just a single false positive, the product ends up with a final score of 99.96, the highest in this test. OnlyMyEmail thus continues its unbroken run of VBSpam awards.

SC rate: 99.64%

FP rate: 0.33%

Final score: 97.98

Project Honey Pot SC rate: 99.62%

Abusix SC rate: 99.71%

Newsletters FP rate: 12.2%

The free and open-source product Scrollout F1 saw a nice increase in its spam catch rate, a lot of the spam it missed being in Japanese. Unfortunately, the product’s false positive rate increased too: with 37 missed legitimate emails, Scrollout had more false positives than any other participating product. As a result, it saw its final score drop below the threshold of 98, and the product failed to earn its third VBSpam award.

SC rate: 99.60%

FP rate: 0.04%

Final score: 99.42

Project Honey Pot SC rate: 99.55%

Abusix SC rate: 99.91%

Newsletters FP rate: 0.0%

It was nice to see the results for Sophos’s Email Appliance: the product’s catch rate was a lot higher than previously – the second highest increase of all products – while the false positive rate remained the same. On top of that, it was one of only three full solutions that didn’t block any of the close to 400 newsletters. The vendor’s 21st VBSpam award is thus well deserved.

SC rate: 99.73%

FP rate: 0.01%

Final score: 99.69

Project Honey Pot SC rate: 99.71%

Abusix SC rate: 99.86%

Newsletters FP rate: 1.6%

There was a single legitimate email in this test that was incorrectly blocked by about half of all participating products. SpamTitan was one of them, which was a shame, as it was this that denied the vendor a VBSpam+ award. Still, there is no reason for the developers to be disappointed: an increased catch rate means the product easily wins its 23rd VBSpam award in as many tests.

SC rate: 99.61%

FP rate: 0.00%

Final score: 99.61

Project Honey Pot SC rate: 99.60%

Abusix SC rate: 99.67%

Newsletters FP rate: 0.3%

In the last test, Symantec’s Messaging Gateway virtual appliance missed 10 legitimate emails. This turned out to be a temporary glitch, as this month the product didn’t block any of the more than 11,000 legitimate emails. As the spam catch rate remained well above 99.5%, the security giant not only wins its 22nd VBSpam award but also its second VBSpam+ award.

SC rate: 99.76%

FP rate: 0.02%

Final score: 99.67

Project Honey Pot SC rate: 99.73%

Abusix SC rate: 99.93%

Newsletters FP rate: 13.2%

Compared with the previous test, ZEROSPAM’s catch rate increased by 0.02%, while its number of false positives decreased from five to two. It was these two emails – both written in Russian – that stood in the way of ZEROSPAM earning another VBSpam+ award, but the product’s ninth VBSpam award is still a good reason for the developers to celebrate.

SC rate: 93.16%

FP rate: 0.01%

Final score: 93.12

Project Honey Pot SC rate: 95.89%

Abusix SC rate: 75.83%

SC rate pre-DATA: 91.36%

Newsletters FP rate: 0.0%

The Spamhaus ZEN and DBL blacklists (the former blocks based on the sending IP address, the latter on domains seen inside the email) missed a single legitimate email because it contained a domain that was listed on the DBL. There may well have been good reason for this action, but as the domain was included in a legitimate email, it was classed as a false positive. It is perhaps more important to note that after a disappointing score in the last test, Spamhaus’s catch rate bounced back to well over 93%.

SC rate: 30.99%

FP rate: 0.00%

Final score: 30.99

Project Honey Pot SC rate: 30.28%

Abusix SC rate: 35.47%

Newsletters FP rate: 0.0%

A tendency among spammers to use links to compromised websites in the emails they send, rather than to domains they own themselves, is the likely explanation for the decline in the number of emails that contain a domain listed by SURBL (and hence the number of spam messages blocked by the product). The blacklist simply can’t (easily) block the compromised sites, or it would cause false positives. So while the 31% of spam blocked ‘by’ SURBL is significantly lower than the percentage we saw last year, it is still a significant chunk – and SURBL once again had no false positives.

(Click here for a larger version of the table)

(Click here for a larger version of the table)

(Click here for a larger version of the table)

(Click here for a larger version of the chart)

This month’s results were a bit of a mixed bag. In the next test, many participants will want to prove that their good performance this month wasn’t a one-off occurrence, while others will want to show that this month’s glitch was only a one-off occurrence – perhaps caused by incorrect configuration, or some issues with a single spam campaign.

The next VBSpam test will run in August 2013, with the results scheduled for publication in September. Developers interested in submitting products should email [email protected].