2012-01-19

Abstract

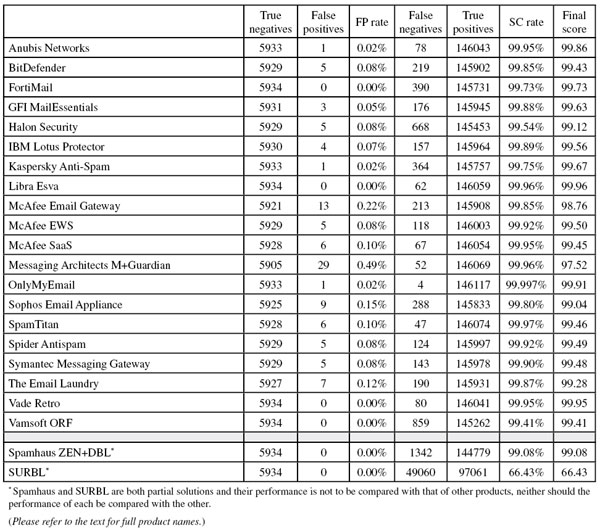

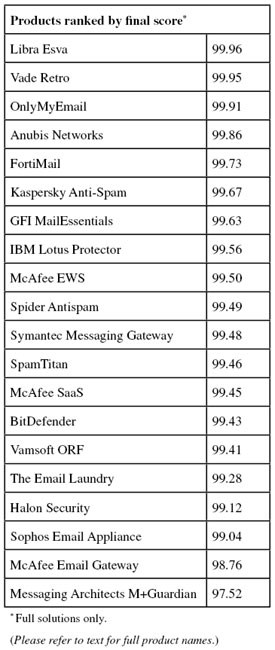

This month's VBSpam test saw 22 products on the test bench, 20 of which were full solutions and the other two were partial solutions (DNS blacklists). All of the full solutions achieved a VBSpam award but their performance differed greatly in the details. Martijn Grooten reveals more.

Copyright © 2012 Virus Bulletin

Intel recently published a fascinating infographic [1] entitled ‘What Happens in an Internet Minute’. It shows, for instance, that every minute, 1.3 million YouTube videos are being watched; over 2 million Google searches are being performed; 6 million people are viewing Facebook and 20 million people are viewing photos on Flickr. All of these numbers are dwarfed, however, by the 204 million emails that are being sent.

Intel doesn’t specify whether this number includes spam, but even if it does, that still means some 50 million legitimate emails are sent over the Internet every minute. That is over 70 billion legitimate emails every day. It puts the oft heard predictions that email is on its way out – soon to be replaced by social media – into perspective.

Does this mean that I believe email is here to stay forever? No, it doesn’t. It may be, and I actually believe that the protocol will be here for many decades to come, but I could be wrong. Things do, after all, change quickly on the Internet – who would have predicted a few years ago that today, millions of people using ever faster computers would communicate via a website that had a 140-character limit on messages?

From an anti-spam and more general security point of view, it doesn’t matter much how accurately you can predict the future; not even if you want your company to protect its customers in the future. What does matter is how well you can adapt to the changes that actually happen.

This is also one of the principles behind the VBSpam tests. In this report, we show how well products performed against email threats that were circulating throughout the holiday period. This is useful for potential customers reading this report in the first half of 2012, but will become less useful as time goes on – which is why we will continue to run tests and publish reports every other month.

We had 22 products on the test bench for this test, 20 of which were full solutions. The other two were partial solutions (DNS blacklists) which are designed to be used in conjunction with other products to provide anti-spam protection. All of the full solutions achieved a VBSpam award but their performance differed greatly in the details.

The VBSpam test methodology can be found at http://www.virusbtn.com/vbspam/methodology/. As usual, email was sent to the products in parallel and in real time, and products were given the option to block email pre-DATA. Four products chose to make use of this option.

As in previous tests, the products that needed to be installed on a server were installed on a Dell PowerEdge R200, with a 3.0GHz dual core processor and 4GB of RAM. The Linux products ran on SuSE Linux Enterprise Server 11; the Windows Server products ran on either the 2003 or the 2008 version, depending on which was recommended by the vendor.

To compare the products, we calculate a ‘final score’, which is defined as the spam catch (SC) rate minus five times the false positive (FP) rate. Products earn VBSpam certification if this value is at least 97:

SC - (5 x FP) ? 97

The test ran for 16 consecutive days, from 12am GMT on Wednesday 21 December 2011 until 12am GMT on Friday 6 January 2012.

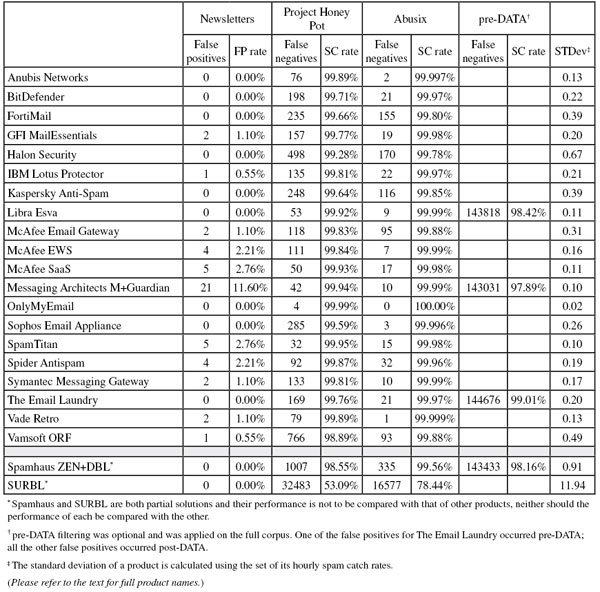

The corpus contained 152,236 emails, 146,121 of which were spam. Of these, 69,238 were provided by Project Honey Pot, and 76,883 were provided by Spamfeed.me, a product from Abusix. They were all relayed in real time, as were the 5,934 legitimate emails (‘ham’) and the remaining 181 emails, which were all legitimate newsletters.

Figure 1 shows the catch rate of all full solutions throughout the test. To avoid the average being skewed by poorly performing products, the highest and lowest catch rate have been excluded for each hour. It was nice to see that all of the products performed rather well – especially given that, in businesses, many employees will have been away during this holiday period. In the past, spammers have taken advantage of this fact and launched new spam campaigns over the Christmas and New Year holidays, in the hope that they would take longer to be blocked.

Taking into account the increased size of the ham corpus, the average false positive rate was slightly lower than in the previous test (note that the test rules prescribe that no more than four false positives are counted per sender and/or subject line). On average, participating products missed one in 1,240 legitimate emails. This may not sound like much, but it would mean that users would not be able to ignore their email quarantine, or trust the perimeter-level filter not to discard any legitimate emails.

Of course, we acknowledge that some legitimate emails are difficult to filter. Our corpus contains many emails that the average user does not receive, such as messages in (modern) Greek or Russian, or messages that discuss advanced scientific subjects or specialist computer software. But then, doesn’t most people’s email traffic contain messages that are in some way unusual for the average user?

Moreover, even the most ‘difficult’ legitimate emails were incorrectly blocked by only four products or fewer. As we have said before, the odd false positive is not a major issue and, much as it can be a nuisance to an end-user, is not the end of the world. But avoiding them altogether remains the goal. Kudos, therefore, to the six solutions that did so.

The VBSpam tests, as the name suggests, are about spam: unsolicited email that appears to have been sent in bulk. The main purpose of the tests is, and will always be, to determine which products are capable of stopping as much spam as possible, while at the same time managing to avoid blocking legitimate emails.

In reality, of course, the situation is more complicated than that and, rather than a black-and-white world of spam and ham, there are varying degrees of ‘greyness’. We believe that filters should adapt to this reality.

One of many ways in which this can be done is to include DKIM-based filtering in a product.

If an email contains a valid DKIM signature of the domain example.com, it means that the email is signed by example.com. This may seem like stating the obvious, but the important part of the sentence is the word ‘is’: other than the sending IP address, none of the properties of an email can be guaranteed to be correct. This means it is impossible to use such properties to whitelist on, or to use them in a reputation-based filter.

The IP address has historically been useful, but does not allow for some of the subtleties that DKIM can offer; also, it will become significantly harder (and probably impossible) to keep good IP-based whitelists and blacklists for IPv6. DKIM has the potential to solve these problems.

Several of the products participating in the test allow administrators to whitelist (and sometimes blacklist) emails based on the presence of a valid DKIM signature of a certain domain. We would be able to test for this. However, many other products use DKIM in a more subtle way as part of the filter – which we are not able to measure and, by only testing products that allow for whitelisting and/or blacklisting, we don’t feel we would do justice to the latter kind of product.

Moreover, for technical reasons, our test set-up is not very DKIM-friendly (DKIM is very sensitive to the smallest modifications to emails; unfortunately, we sometimes have to make such modifications), and as a result we decided against including DKIM in this report. We will continue to look for ways in which to test the products that include DKIM in their filtering.

Two tests ago, we introduced a feed of newsletters (this includes commercial emails - ‘these are our weekly offers’ - as well as mailings of a less (obvious) commercial nature - ‘this is what’s going on at our school next week’) and began to measure products’ performance against this corpus. Because newsletters – even those that have been subscribed to – are rarely considered important by their recipients, we exclude this measure from the final score. Some products actually have one or more separate categories for such newsletters, which allows end-users to filter them to a particular folder, thus avoiding a clogged inbox, while still being able to read the newsletters at their leisure.

For products that are capable of doing this, we have decided to look at how well they manage to correctly classify newsletters as such (newsletters classified as ‘ordinary ham’ or as ‘spam’ are considered to have been misclassified). In this test, only one participating product (Vade Retro) was both willing and able to turn this feature on; we consider it important enough to include this in the report.

In the text that follows, unless otherwise specified, ‘ham’ or ‘legitimate email’ refers to email in the ham corpus – which excludes the newsletters – and a ‘false positive’ is a message in that corpus that has been erroneously marked by a product as spam.

Because the size of the newsletter corpus is significantly smaller than that of the ham corpus, a missed newsletter will have a much greater effect on the newsletter false positive rate than a missed legitimate email will have on the false positive rate for the ham corpus (e.g. one missed email in the ham corpus results in an FP rate of 0.02%, while one missed email in the newsletter corpus results in an FP rate of 0.5%).

SC rate: 99.95%

FP rate: 0.02%

Final score: 99.86

Project Honey Pot SC rate: 99.89%

Abusix SC rate: 99.997%

Newsletters FP rate: 0.00%

With a single false positive in the ham corpus (none among the newsletters), and one of the highest spam catch rates in this month’s test, Anubis is back on top form this month, achieving the fourth highest final score and earning its ninth VBSpam award in as many tests.

SC rate: 99.85%

FP rate: 0.08%

Final score: 99.43

Project Honey Pot SC rate: 99.71%

Abusix SC rate: 99.97%

Newsletters FP rate: 0.00%

BitDefender prides itself on being the only product to have participated in every VBSpam test since the start, and to have won a VBSpam award on each occasion. With five false positives in this test, the Romanian product saw its final score drop, although its performance was still decent enough to add another VBSpam award to its tally. It will be up to the developers to demonstrate in the next test that this was just a temporary glitch.

SC rate: 99.73%

FP rate: 0.00%

Final score: 99.73

Project Honey Pot SC rate: 99.66%

Abusix SC rate: 99.80%

Newsletters FP rate: 0.00%

Fortinet’s FortiMail is another product that has been included in our tests since the early days, having joined the test bench on the second test. This month FortiMail earns its 16th VBSpam award. What is more, with a nicely improved spam catch rate, the hardware appliance achieved the fifth highest final score.

SC rate: 99.88%

FP rate: 0.05%

Final score: 99.63

Project Honey Pot SC rate: 99.77%

Abusix SC rate: 99.98%

Newsletters FP rate: 1.10%

On its fifth visit to the VBSpam test bench, GFI MailEssentials yet again improved both its spam catch rate and its false positive rate. With a very good final score, the Windows product wins another VBSpam award.

SC rate: 99.54%

FP rate: 0.08%

Final score: 99.12

Project Honey Pot SC rate: 99.28%

Abusix SC rate: 99.78%

Newsletters FP rate: 0.00%

Like many products, Halon Security achieved a slightly higher spam catch rate in this test than on the last occasion, though this was combined with a handful of false positives. No doubt the product’s developers will be looking to improve its performance in the next test; for now it achieves its sixth consecutive VBSpam award.

SC rate: 99.89%

FP rate: 0.07%

Final score: 99.56

Project Honey Pot SC rate: 99.81%

Abusix SC rate: 99.97%

Newsletters FP rate: 0.55%

IBM Lotus Protector missed four legitimate emails, but with a good spam catch rate, its final score continues to be decent – showing that the ‘grand old man’ of computers protects well against existing threats and earning the product its third VBSpam award in as many tests.

SC rate: 99.75%

FP rate: 0.02%

Final score: 99.67

Project Honey Pot SC rate: 99.64%

Abusix SC rate: 99.85%

Newsletters FP rate: 0.00%

Many products saw their spam catch rates improve in this test, but few did as well as Kaspersky Anti-Spam, which more than halved its rate of missed spam. Once again, there was just a single false positive and the vendor wins its 15th VBSpam award.

SC rate: 99.96%

FP rate: 0.00%

Final score: 99.96

Project Honey Pot SC rate: 99.92%

Abusix SC rate: 99.99%

SC rate pre-DATA: 98.42%

Newsletters FP rate: 0.00%

This month Libra Esva finally managed what it had been so close to so many times before: achieving the highest final score in the test. An excellent spam catch rate was combined with a total lack of false positives (even among newsletters), which means that, along with its 11th VBSpam award, the Italian company should have extra cause for celebration.

SC rate: 99.85%

FP rate: 0.22%

Final score: 98.76

Project Honey Pot SC rate: 99.83%

Abusix SC rate: 99.88%

Newsletters FP rate: 1.10%

McAfee’s Email Gateway appliance blocked 13 legitimate emails in this test. While this is an improvement on the previous test, it continues to be a concern. Thanks to a good spam catch rate, the product wins its 15th VBSpam award in as many tests – but we hope to see the false positive rate drop in the next test.

SC rate: 99.92%

FP rate: 0.08%

Final score: 99.50

Project Honey Pot SC rate: 99.84%

Abusix SC rate: 99.99%

Newsletters FP rate: 2.21%

As in the previous test, McAfee’s Email and Web Security Appliance missed five legitimate emails. But since it also saw its spam catch rate improve a little further – to an impressive 99.92% – the product easily wins another VBSpam award – its 13th out of 14 tests.

SC rate: 99.95%

FP rate: 0.10%

Final score: 99.45

Project Honey Pot SC rate: 99.93%

Abusix SC rate: 99.98%

Newsletters FP rate: 2.76%

In this test McAfee’s hosted solution saw its false positive rate significantly reduced. And while six missed legitimate emails are still six too many, with a spam catch rate equally as high as it was in the previous test, the product’s final score improved quite a bit and a fourth VBSpam award can be added to the product’s tally.

SC rate: 99.96%

FP rate: 0.49%

Final score: 97.52

Project Honey Pot SC rate: 99.94%

Abusix SC rate: 99.99%

SC rate pre-DATA: 97.89%

Newsletters FP rate: 11.60%

We want vendors to submit their solutions to our tests, but when a choice must be made between working on a new, improved version of a product or supporting the test, we understand that vendors consider the former more important. It was nice, therefore, to see M+Guardian return to the test bench after a 14-month absence. Whereas in the past we tested a hardware appliance, this time we tested a virtual version of the product that runs under ESXi.

On its return, M+Guardian impressed us with one of the highest spam catch rates in this test, missing just over 50 spam messages. Unfortunately, the product also blocked a few dozen legitimate emails – more than any other product. For now, given the product’s long absence, we are willing to blame this on unfamiliarity with the ham corpus, but we hope that the developers can show that this is indeed the case. A VBSpam award should keep their spirits up in the meantime.

SC rate: 99.997%

FP rate: 0.02%

Final score: 99.91

Project Honey Pot SC rate: 99.99%

Abusix SC rate: 100.00%

Newsletters FP rate: 0.00%

In this test, OnlyMyEmail missed fewer than one in 36,000 spam messages. After several exceptional performances like this, we’ve almost stopped being surprised and thus it is good to note that many users do not even receive this much spam in a full year! Unfortunately, the product missed a single legitimate email (but no newsletters) and thus it ‘only’ achieved the third highest final score – but the vendor’s eighth consecutive VBSpam award is no less deserved.

SC rate: 99.80%

FP rate: 0.15%

Final score: 99.04

Project Honey Pot SC rate: 99.59%

Abusix SC rate: 99.996%

Newsletters FP rate: 0.00%

With nine false positives, Sophos’s performance in this test was a little disappointing and its developers will no doubt try to improve on this score in the next test. For now, thanks to a good spam catch rate, the product’s final score remained decent and the vendor’s 12th visit to the test bench brought Sophos its 12th VBSpam award.

SC rate: 99.97%

FP rate: 0.10%

Final score: 99.46

Project Honey Pot SC rate: 99.95%

Abusix SC rate: 99.98%

Newsletters FP rate: 2.76%

This month, SpamTitan achieved what all products aim to do: it both improved its spam catch rate (in fact, it blocked more spam than all but one other product) and reduced its false positive rate, resulting in an increased final score and earning the product its 14th VBSpam award.

SC rate: 99.92%

FP rate: 0.08%

Final score: 99.49

Project Honey Pot SC rate: 99.87%

Abusix SC rate: 99.96%

Newsletters FP rate: 2.21%

In this test Spider Antispam saw an improvement to what was already a very decent spam catch rate; in fact, the Czech product almost halved its percentage of missed spam compared with the last test.

Unfortunately, the product also picked up a handful of false positives, which reduced the final score a little, but Spider Antispam still achieved a high enough score to earn its fourth consecutive VBSpam award.

SC rate: 99.90%

FP rate: 0.08%

Final score: 99.48

Project Honey Pot SC rate: 99.81%

Abusix SC rate: 99.99%

Newsletters FP rate: 1.10%

As in the last test, Symantec Messaging Gateway picked up a few false positives – something the development team will undoubtedly want to look at. But with a slightly improved spam catch rate, the product still managed to achieve a slightly higher final score than on the last occasion – therefore also earning a VBSpam award, which takes its tally to 13 consecutive awards.

SC rate: 99.87%

FP rate: 0.12%

Final score: 99.28

Project Honey Pot SC rate: 99.76%

Abusix SC rate: 99.97%

SC rate pre-DATA: 99.01%

Newsletters FP rate: 0.00%

A score of seven false positives is something that the developers at The Email Laundry will almost certainly want to improve upon in the next test. Fortunately, as in previous tests the product’s spam catch rate was very good, which ensured that the product’s final score was high enough to earn it its 11th consecutive VBSpam award.

SC rate: 99.95%

FP rate: 0.00%

Final score: 99.95

Project Honey Pot SC rate: 99.89%

Abusix SC rate: 99.999%

Newsletters FP rate: 1.10%

Newsletters accuracy: 93.9%

Vade Retro’s developers were a little disappointed with the product’s relatively low spam catch rate in the last test. Upon investigation, they discovered that this was the result of a misconfiguration in the set-up of our account – causing large emails to evade the filter altogether. Understandably, they were eager to find out how the product had fared this month.

No doubt they will be very happy with the results: not only did the product block all but one in over 1,800 spam messages, but it also avoided blocking legitimate emails altogether. With the second highest final score this month (just a fraction lower than the highest), Vade Retro wins its 11th consecutive VBSpam award.

Vade Retro was also the only participating product that was both willing and able to have the accuracy of its newsletter classification feature measured. An accuracy of 93.9% (or 170 out of 181 emails) means that users of the product should easily be able to segregate non-urgent newsletters from the rest of their legitimate mail.

SC rate: 99.41%

FP rate: 0.00%

Final score: 99.41

Project Honey Pot SC rate: 98.89%

Abusix SC rate: 99.88%

Newsletters FP rate: 0.55%

Thanks to its track record, it came as little surprise to find that Vamsoft ORF managed to completely avoid blocking legitimate emails in this test – despite the fact that few other solutions managed to achieve the same feat this month. Alongside a score of zero false positives, the Hungarian product managed to increase its spam catch rate, and thus with an increased final score, earns its 11th VBSpam award.

SC rate: 99.08%

FP rate: 0.00%

Final score: 99.08

Project Honey Pot SC rate: 98.55%

Abusix SC rate: 99.56%

SC rate pre-DATA: 98.16%

Newsletters FP rate: 0.00%

After a drop in its spam catch rate in the last test, Spamhaus bounced back this month and blocked more than 99% of spam – more than it has ever done before. For a partial solution (one that is dependent on spammers using IP addresses and domains that are either not used or which are only rarely used to send legitimate email), this is a really good score and another VBSpam award – the product’s 13th – is well deserved.

SC rate: 66.43%

FP rate: 0.00%

Final score: 66.43

Project Honey Pot SC rate: 53.09%

Abusix SC rate: 78.44%

Newsletters FP rate: 0.00%

Like Spamhaus, SURBL is a partial solution (and one of a different kind): it only blocks emails based on URIs present in the email body, and no one would consider using it as a spam filter on its own. Close to two-thirds of the spam messages were blocked using the URI-based blacklist – a drop compared to the last test, though this is for the most part explained by the fact that fewer messages in this month’s spam corpus contained a (recognizable) URL.

(Click here for a larger version of the table)

(Click here for a larger version of the table)

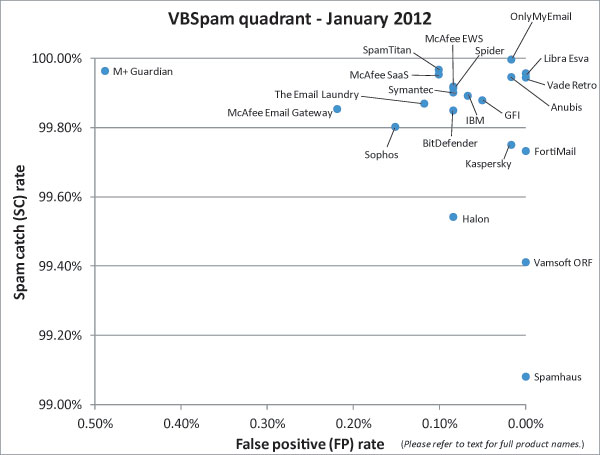

(Click here for a larger version of the chart)

Once again this month, all full solutions won a VBSpam award. This is good news and demonstrates the breadth of options available to customers when it comes to filtering spam.

However, from talking to the developers and product managers, I understand that winning an award isn’t necessarily at the top of their list of priorities: what they actually want is for their products to perform well and to be placed in the magic top right-hand corner of the VBSpam quadrant – where spam catch rates are high and false positive rates are low. While the top right-hand corner of the quadrant is currently fairly crowded, significant differences can be seen between the various participating solutions. Based on this month’s performances, many developers will be spending time over the next few weeks working to improve their products’ filtering abilities.

The next VBSpam test will run in February 2012, with the results scheduled for publication in March. Developers interested in submitting products should email [email protected].