2011-11-21

Abstract

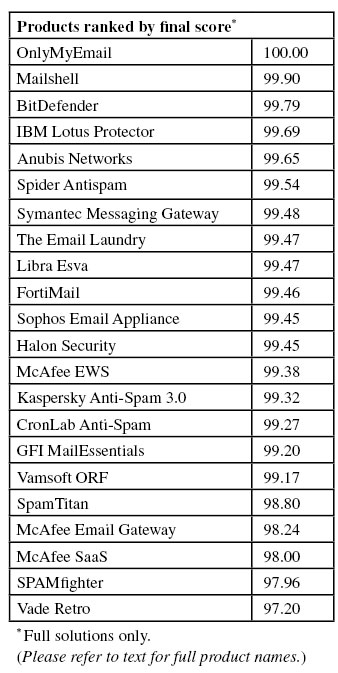

This month's VBSpam test sees 24 products on the test bench (22 full solutions and two blacklists). All full solutions achieved a VBSpam award, but there was more distinction between the products’ performance than in recent tests and some only just reached the certification threshold. Martijn Grooten has the details

Copyright © 2011 Virus Bulletin

One of the most important developments in IT security over the past year has been the significant increase in the number of targeted attacks. Examples include the attack on security firm RSA, attacks on various chemical companies and the mysterious ‘Duqu’ trojan. In each of these cases email was the main infection vector.

It is good to keep this in mind when using, developing or reviewing email filters. Spam has been a problem for well over a decade because of its sheer volume and most filters make use of this volume to improve their detection: they apply sensors (e.g. spam traps, customer reports) that detect new campaigns and which (usually automatically) update filters. Generally this works well.

But a problem has arisen at the other end of the spectrum where targeted emails are sent in very small numbers to specific addresses. Such emails tend to avoid the sensors described above. They also tend to avoid anti-spam tests – we have little hope of being sent ‘Duqu’-like emails in real time so that we can use them in our tests.

This does not mean that we do not take the problem seriously, though. While our main focus continues to be on the quantitative side of spam – the messages that are sent in their millions and which, despite a decline in the past year, continue to clog up networks and inboxes alike – we are also looking into adding tests and checks that will give an indication of how well filters protect against targeted attacks.

This month we have 24 products on the test bench, 22 of which are full solutions, while the other two are blacklists. Yet again, this number exceeds all previous tests, meaning that this month’s review covers an even greater share of the market. All full solutions achieved a VBSpam award, but there was more distinction between the products’ performance than in recent tests and some only just reached the certification threshold.

The VBSpam test methodology can be found at http://www.virusbtn.com/vbspam/methodology/. As usual, email was sent to the products in parallel and in real time, and products were given the option to block email pre-DATA. Three products chose to make use of this option.

As in previous tests, the products that needed to be installed on a server were installed on a Dell PowerEdge R200, with a 3.0GHz dual core processor and 4GB of RAM. The Linux products ran on SuSE Linux Enterprise Server 11; the Windows Server products ran on either the 2003 or the 2008 version, depending on which was recommended by the vendor.

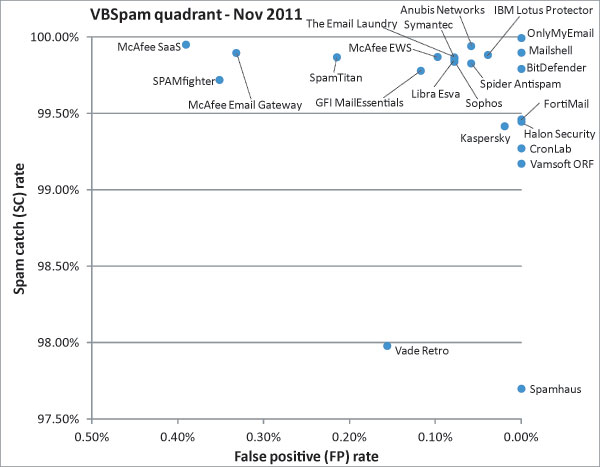

To compare the products, we calculate a ‘final score’, which is defined as the spam catch (SC) rate minus five times the false positive (FP) rate. Products earn VBSpam certification if this value is at least 97:

SC - (5 x FP) ≥ 97

The test ran for 16 consecutive days, from 12am BST on Saturday 22 October 2011 until 12am GMT on Monday 7 November 2011.

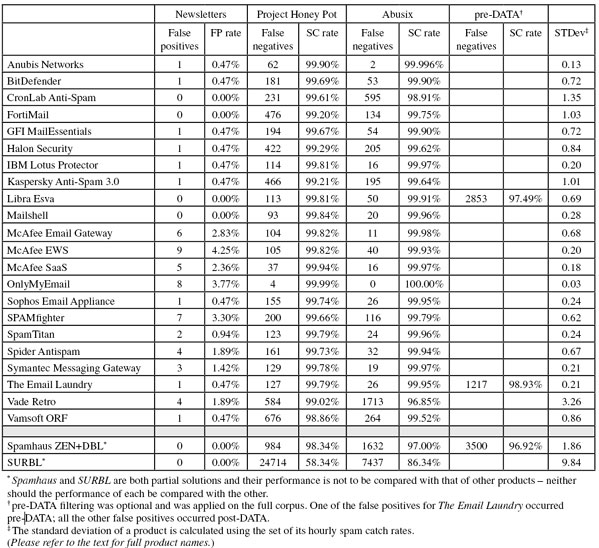

The corpus contained 119,105 emails, 113,774 of which were spam. Of these, 59,321 were provided by Project Honey Pot and 54,453 were provided by Spamfeed.me, a product from Abusix. They were all relayed in real time, as were the 5,119 legitimate emails (‘ham’). The remaining 212 emails were all newsletters – a corpus that was introduced in the last test.

Unfortunately a number of problems plagued the test. Firstly, one of the main test machines crashed in a bad way shortly before the test was due to start, meaning that time had to be spent finding a work-around and causing the start of the test to be postponed. Secondly, while the test was running, one of the routers connecting the VBSpam LAN to the Internet gave up the ghost no fewer than three times. Other than gaps in the traffic, this meant having to make absolutely sure no product’s performance was affected by this.

Thankfully, the sizes of the various corpora were still large enough for the results to have statistical relevance. In fact, the addition of a number of new sources made the ham corpus larger than it has ever been before with more than 5,000 legitimate emails being sent through the products.

On this occasion we saw more products having difficulties with legitimate emails than in recent tests and, interestingly, it was mostly different emails that caused the problems. As a result, the top right-hand corner of the VBSpam quadrant has thinned out somewhat.

Among the spam, we noticed a large number of fake notifications claiming to come from Facebook, Twitter and AOL. This did not come as a surprise: phishing for login details of these sites has been going on for years. However, rather than phishing, these emails contained links (usually to hacked websites) that sent victims (via some redirects) to fake pharmacy sites. It is anyone’s guess as to whether this is an indication that selling fake Viagra earns spammers more money than harvesting social networking logins, but it is an interesting trend and leads one to wonder how such emails are counted in phishing statistics.

I also feel obliged to add that we saw very few 419 scams claiming to come from a relative of Muamar Gadhafi; many vendors reported a surge of such emails after the death of the Libyan leader, though it was probably more something that made for interesting blog posts than a significant new trend in spam. Of course, the various spellings of the late colonel’s name mean that it is not entirely trivial to search for such emails.

As in the previous test, all newsletters included in this report had the subscriber confirm the subscription and no more than five newsletters were included per sender. Again, non-confirmed-opt-in newsletters were also sent through the products and we saw that confirming subscriptions is strongly correlated to the delivery rate.

In the text that follows, unless otherwise specified, ‘ham’ or ‘legitimate email’ refers to email in the ham corpus – which excludes the newsletters – and a ‘false positive’ is a message in that corpus erroneously marked by a product as spam.

Because the size of the newsletter corpus is significantly smaller than that of the ham corpus, a missed newsletter will have a much greater effect on the newsletter false positive rate than a missed legitimate email will have on the FP rate for the ham corpus (e.g. one missed email in the ham corpus results in an FP rate of 0.02%, while one missed email in the newsletter corpus results in an FP rate of 0.5%).

SC rate: 99.94%

FP rate: 0.06%

Final score: 99.65

Project Honey Pot SC rate: 99.90%

Abusix SC rate: 99.996%

Newsletters FP rate: 0.47%

After achieving the highest final score in the last test Anubis Networks takes a step down, missing three legitimate emails from this month’s corpus. It is, however, only a small step – the product’s final score is still very decent and earns the Portuguese solution its eighth VBSpam award in as many tests.

SC rate: 99.79%

FP rate: 0.00%

Final score: 99.79

Project Honey Pot SC rate: 99.69%

Abusix SC rate: 99.90%

Newsletters FP rate: 0.47%

BitDefender continues to be the only product to have won a VBSpam award in every test so far (and indeed the only product to have been submitted to every test since the beginning). In this test the Romanian anti-spam solution once again combined a good spam catch rate with zero false positives, which resulted in the third-highest final score and the product’s 16th VBSpam award.

SC rate: 99.27%

FP rate: 0.00%

Final score: 99.27

Project Honey Pot SC rate: 99.61%

Abusix SC rate: 98.91%

Newsletters FP rate: 0.00%

CronLab Anti-Spam is a newcomer to the VBSpam test. The company is headquartered in London, while its product development takes place in Sweden. CronLab offers both a hardware and a hosted anti-spam solution; we tested the latter.

I am agnostic when it comes to what kind of anti-spam solution is best and I do believe that different kinds of solutions serve different kinds of customers. However, the smooth set-up of this product – which involved little more than changing some MX records – was a good demonstration of how a hosted solution can save the customer a lot of work. I had little reason to look more closely at the product, since it worked well right away, but was pleased to learn that administrators can allow end-users to manage their own quarantines.

I was also pleased by the product’s lack of false positives, even among the newsletters. This is not common for products on their first appearance – even less so in a test where many other products had difficulties with the ham corpus. The product’s spam catch rate could be improved upon, though it is already well over 99% and the CronLab team should be congratulated on their first VBSpam award.

SC rate: 99.46%

FP rate: 0.00%

Final score: 99.46

Project Honey Pot SC rate: 99.20%

Abusix SC rate: 99.75%

Newsletters FP rate: 0.00%

FortiMail has won a VBSpam award in 14 consecutive tests so far, and this month notches up its 15th award with a nice clean sheet when it came to both the ham corpus and the newsletters.

SC rate: 99.78%

FP rate: 0.12%

Final score: 99.20

Project Honey Pot SC rate: 99.67%

Abusix SC rate: 99.90%

Newsletters FP rate: 0.47%

This test is as much about helping developers improve their products’ performance as it is about measuring which products perform well. Therefore I was pleased to see improvements in both the spam catch rate and the false positive rate of GFI’s MailEssentials; it even missed fewer newsletters than before. The product’s fourth VBSpam award is well deserved.

SC rate: 99.45%

FP rate: 0.00%

Final score: 99.45

Project Honey Pot SC rate: 99.29%

Abusix SC rate: 99.62%

Newsletters FP rate: 0.47%

Halon saw a slight drop in its spam catch rate this month, which no doubt will be something the product’s developers will want to improve upon in the next test. However, this test also saw the product’s false positive rate return to zero, resulting in an improvement in the final score. This means that the company can add a fifth consecutive VBSpam award to its tally.

SC rate: 99.89%

FP rate: 0.04%

Final score: 99.69

Project Honey Pot SC rate: 99.81%

Abusix SC rate: 99.97%

Newsletters FP rate: 0.47%

In literary or music circles, it is common to talk about the ‘difficult second’ book or album. For IBM Lotus Protector, this was certainly not a difficult second test: the virtual solution continued with an excellent spam catch rate and, as it missed just two legitimate emails, an improved final score, earning the product its second VBSpam award with the fourth highest final score overall.

SC rate: 99.42%

FP rate: 0.02%

Final score: 99.32

Project Honey Pot SC rate: 99.21%

Abusix SC rate: 99.64%

Newsletters FP rate: 0.47%

The people at Kaspersky are well aware of the difficulty in distinguishing legitimate email from well-crafted spam that tries hard to appear legitimate: they recently discovered a spam campaign selling fake copies of their own product. Kaspersky Anti-Spam does a good job of filtering this and other spam campaigns though, and its spam catch rate improved on this occasion quite a bit. With just a single false positive the product earns yet another VBSpam award.

SC rate: 99.86%

FP rate: 0.08%

Final score: 99.47

Project Honey Pot SC rate: 99.81%

Abusix SC rate: 99.91%

SC rate pre-DATA: 97.49%

Newsletters FP rate: 0.00%

During this test Libra Esva’s developers informed me that the installation of the product we use had been upgraded to the 2.5 version; I had not realised this as the product performs seamlessly and gives me little reason to log into the virtual appliance. Once again, the spam catch rate was good, but unfortunately four legitimate emails (all from the same sender) were blocked, resulting in a slightly lower final score than previously. The product still easily wins its tenth VBSpam award though.

SC rate: 99.90%

FP rate: 0.00%

Final score: 99.90

Project Honey Pot SC rate: 99.84%

Abusix SC rate: 99.96%

Newsletters FP rate: 0.00%

In this, its second test, Mailshell proved to be more than a one-hit wonder: the SDK, which is used by other anti-spam solutions, continued to block all but one in 1,000 spam emails and, once again, missed no legitimate emails or newsletters. With the second highest final score, the product’s second VBSpam award is well deserved.

SC rate: 99.90%

FP rate: 0.33%

Final score: 98.24

Project Honey Pot SC rate: 99.82%

Abusix SC rate: 99.98%

Newsletters FP rate: 2.83%

McAfee’s Email Gateway appliance yet again achieved one of the highest spam catch rates in the test, but the excitement this provoked was somewhat tempered by a fairly large number of false positives. The product still achieved a high enough final score to earn a VBSpam award, but we hope to see an improvement on this performance in the next test, to prove that this was just a temporary glitch.

SC rate: 99.87%

FP rate: 0.10%

Final score: 99.38

Project Honey Pot SC rate: 99.82%

Abusix SC rate: 99.93%

Newsletters FP rate: 4.25%

Unlike the other two McAfee products in this test, false positives were not a major issue for the Email and Web Security Appliance: five legitimate emails were missed, which was the average of all full solutions. With a good spam catch rate, the product easily adds another VBSpam award to its tally.

SC rate: 99.95%

FP rate: 0.39%

Final score: 98.00

Project Honey Pot SC rate: 99.94%

Abusix SC rate: 99.97%

Newsletters FP rate: 2.36%

With the second highest spam catch rate once again, McAfee SaaS Email Protection does credit to its name. However, this comes at a price as the product also scored the highest false positive rate. McAfee earns another VBSpam award, though the product’s developers will probably be slightly disappointed with its final score.

SC rate: 99.996%

FP rate: 0.00%

Final score: 100.00

Project Honey Pot SC rate: 99.99%

Abusix SC rate: 100.00%

Newsletters FP rate: 3.77%

Any participants who believe that this month’s corpora contained very difficult emails should take a leaf out of OnlyMyEmail’s book. The product’s spam catch rate of 99.996% (four missed spam messages) may not be surprising given previous results, but it is impressive nevertheless – as is, of course, the fact that no legitimate emails were missed. The eight missed newsletters may be a minor concern, but the product’s seventh consecutive VBSpam award was won with the highest final score for the fourth time.

SC rate: 99.84%

FP rate: 0.08%

Final score: 99.45

Project Honey Pot SC rate: 99.74%

Abusix SC rate: 99.95%

Newsletters FP rate: 0.47%

One incorrectly blacklisted IP address accounted for all four legitimate emails missed by Sophos’s Email Appliance, which was a shame, given that the product’s spam catch rate was high as usual. Of course, it meant that the final score was lower than in the previous test, but it was still good and the appliance wins its 11th VBSpam award in as many tests.

SC rate: 99.72%

FP rate: 0.35%

Final score: 97.96

Project Honey Pot SC rate: 99.66%

Abusix SC rate: 99.79%

Newsletters FP rate: 3.30%

SPAMfighter saw its spam catch rate increase a fair bit, but this was put into perspective by the 18 legitimate emails and seven newsletters missed by the product. This did not prevent the product from winning a VBSpam award, but it is up to the developers to show in the next test that this was a one-off slip.

SC rate: 99.87%

FP rate: 0.21%

Final score: 98.80

Project Honey Pot SC rate: 99.79%

Abusix SC rate: 99.96%

Newsletters FP rate: 0.94%

SpamTitan was one of several products to experience problems with the legitimate emails in this test, missing 11 of them, compared with two in the previous test. Of course, this resulted in a lower final score but it was still well above the VBSpam certification threshold – and it was nice to see the product’s newsletter false positive rate decrease a fair amount.

SC rate: 99.83%

FP rate: 0.06%

Final score: 99.54

Project Honey Pot SC rate: 99.73%

Abusix SC rate: 99.94%

Newsletters FP rate: 1.89%

Spider Antispam managed to maintain an excellent spam catch rate in its third test. A handful of false positives caused a slight drop in its final score, but a third VBSpam award was still easily won.

SC rate: 99.87%

FP rate: 0.08%

Final score: 99.48

Project Honey Pot SC rate: 99.78%

Abusix SC rate: 99.97%

Newsletters FP rate: 1.42%

The final score for Symantec Messaging Gateway also dropped this month due to an increase in false positives – in this case increasing from one to four. Of course, this is not something to ignore, but it did not jeopardize the product’s 12th consecutive VBSpam award.

SC rate: 99.87%

FP rate: 0.08%

Final score: 99.47

Project Honey Pot SC rate: 99.79%

Abusix SC rate: 99.95%

SC rate pre-DATA: 98.93%

Newsletters FP rate: 0.47%

While other products saw their pre-DATA catch rates decrease significantly, that of The Email Laundry barely declined as once again close to 99% of all spam was blocked based on sender and IP address reputation. After scanning the content of the email, the catch rate was improved further and while there were four false positives, the hosted solution easily earned its tenth consecutive VBSpam award.

SC rate: 97.98%

FP rate: 0.16%

Final score: 97.20

Project Honey Pot SC rate: 99.02%

Abusix SC rate: 96.85%

Newsletters FP rate: 1.89%

A few days before the end of the test, Vade Retro’s spam catch rate dropped suddenly – something which could be attributed to a lot of false negatives on AOL ‘phishing’ emails. We do not provide participants with feedback on their performance while a test is running, but when I contacted the developers after the test had finished, they found what they believe is a bug in the particular product set-up used in our test. For now, a VBSpam award is won with a final score that barely made the threshold; we look forward to seeing the product bounce back from this slip.

SC rate: 99.17%

FP rate: 0.00%

Final score: 99.17

Project Honey Pot SC rate: 98.86%

Abusix SC rate: 99.52%

Newsletters FP rate: 0.47%

It is good when filter developers include well-designed quarantine systems in their filters, but it is even better when their products avoid false positives in the first place. Vamsoft ORF tends to do that and this month wins its tenth consecutive VBSpam award – six of which were won without any false positives; a shared record among participants.

SC rate: 97.70%

FP rate: 0.00%

Final score: 97.70

Project Honey Pot SC rate: 98.34%

Abusix SC rate: 97.00%

SC rate pre-DATA: 96.92%

Newsletters FP rate: 0.00%

One of the advantages of including Spamhaus in these tests is that it demonstrates how much spammers are sending mail using IP addresses and domains that are also used by legitimate senders (and thus are not likely to be blocked). Of course, we cannot say how much of the drop in the blacklist’s catch rate is due to a lack of catching up with the spammers, but it is likely that this merely shows a shift in their activity. As on previous occasions Spamhaus avoided false positives in both the ham corpus and the newsletter corpus, and even with the lower catch rate, it wins yet another VBSpam award.

SC rate: 71.74%

FP rate: 0.00%

Final score: 71.74

Project Honey Pot SC rate: 58.34%

Abusix SC rate: 86.34%

Newsletters FP rate: 0.00%

Like Spamhaus, the performance of the SURBL domain blacklist depends partly on spammers’ activity. If they use compromised legitimate domains in their spam messages, these are less likely to be blocked by domain blacklists. The social media spam described earlier is an example of such a blacklist-avoiding spam, as is spam with malicious attachments (which more often than not does not contain any URLs). Still, it was nice to see the blacklist’s catch rate increase by over eight percentage points, while not compromising its perfect false positive score.

(Click here for a larger version of the table)

(Click here for a larger version of the table)

(Click here for a larger version of the chart)

Many readers of the VBSpam reports have expressed their wish to see more differentiation in the VBSpam quadrant – something I absolutely agree with. While a poorer performance overall is not something I would have hoped for, it does make the top right-hand corner of the VBSpam quadrant less crowded and thus makes for interesting reading.

Several participating products will have to work hard over the coming weeks to prove that their disappointing performance in this month’s test was nothing more than a temporary slip-up. Some developers may even have to fix their products using the feedback we provide them with.

After having attended both VB2011 and MAAWG’s Paris meeting recently, and having had discussions with several of the participants, I came away with some new ideas for the test which are already being worked on. We hope to include some of these in the next VBSpam test, which will run in December 2011, with the results scheduled for publication in January 2012. Developers interested in submitting products should email [email protected].