2011-01-01

Abstract

19 full solutions and one partial solution were put to the test in the VBSpam lab this month. Martijn Grooten has the details.

Copyright © 2011 Virus Bulletin

2011 started off with some good news as in the final weeks of December, the amount of spam circulating globally decreased significantly – adding to the general decline in spam volumes seen during the second half of 2010.

However, it would be wrong to suggest that spam is going away any time soon – or even that it will cease to be a problem in the future. Spammers are already finding ways to make up for the decrease in spam quantity – for instance by individually targeting their victims. Indeed, several cases of spear-phishing have recently made the news.

Thus in 2011, organizations will still need solutions to deal with massive streams of unsolicited emails and VB will continue to test such solutions in the VBSpam certification scheme.

Readers will notice that among the 18 products that have earned a VBSpam award in this month’s review, the differences in performance are often very small. To discover which of these products works best for a particular organization, running a trial might be useful (most vendors offer this possibility) – such a trial would also provide the opportunity to evaluate a product’s usability and additional features. The Messaging Anti-Abuse Working Group (MAAWG) has produced a useful document that explains how organizations can conduct such tests in-house and, in general, how to evaluate email anti-abuse products. The document can be found at https://www.maawg.org/system/files/news/MAAWG_Anti-Abuse_Product_Evaluation_BCP.pdf.

The VBSpam test methodology can be found at http://www.virusbtn.com/vbspam/methodology/. As usual, email was sent to the products in parallel and in real time, and products were given the option to block email pre-DATA. Four products chose to make use of this option.

As in previous tests, the products that needed to be installed on a server were installed on a Dell PowerEdge R200, with a 3.0GHz dual core processor and 4GB of RAM. The Linux products ran on SuSE Linux Enterprise Server 11; the Windows Server products ran on either the 2003 or the 2008 version, depending on which was recommended by the vendor.

To compare the products, we calculate a ‘final score’, which is defined as the spam catch (SC) rate minus five times the false positive (FP) rate. Products earn VBSpam certification if this value is at least 97:

SC - (5 x FP) ≥ 97

Note that this is different from the formula used in previous tests, where the weight of the false positives was three and the threshold was 96.

The test ran for just over 15 consecutive days, from around 6am GMT on Saturday 18 December 2010 until midday on Sunday 2 January 2011.

The corpus contained 91,384 emails, 89,027 of which were spam. Of these spam emails, 32,609 were provided by Project Honey Pot and the other 56,418 were provided by Abusix; in both cases, the messages were relayed in real time, as were the 2,357 legitimate emails. As before, the legitimate emails were sent in a number of languages to represent an international mail stream.

The graph below (Figure 1) shows the average catch rate of all full solutions throughout the test. As one can see, the decline in global spam volume coincided with a small decline in the average product’s performance.

In the previous review we looked at the geographical origin of the spam messages and compared those of the full corpus to those spam messages missed by at least two solutions. We saw, for instance, that spam from the US appears to be relatively easy to filter, while spam from various Asian countries is more likely to make it to users’ inboxes.

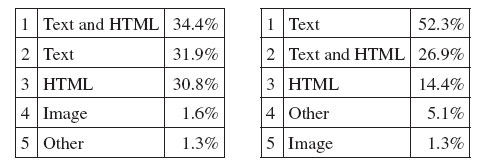

This time, we looked at the content of the messages, based on the MIME type of the message body. We distinguished five categories: messages with a body consisting of plain text; those with a pure HTML body; those with both plain text and HTML in the body; those with one or more embedded images; and other kinds of messages, including DSNs, messages with attached documents and those with an unclear and possibly broken MIME-structure.

The table below (Figure 2) shows the relative occurrence of the categories; left among the full spam corpus, right among those messages missed by at least two solutions. As in the previous test, the latter concerned slightly fewer than 1 in 60 spam messages.

Figure 2. Left: Content of messages seen in the spam feeds. Right: Content of spam messages missed by at least two full solutions.

An interesting conclusion is that plain text messages – which in theory are the easiest for a content filter to scan – are significantly more likely to cause problems for spam filters than other message types. On the other hand, messages containing HTML in the body - especially those with a pure HTML body - tend to be easier to filter.

Whether this really says something about spam filtering or whether this is a side effect of current filtering techniques (it could well be that the bots sending out HTML spam are easier to detect for other reasons) remains to be seen. We will certainly keep an eye on future results to see if this observed behaviour changes over time.

SC rate: 99.38%

FP rate: 0.00%

Final score: 99.38

Project Honey Pot SC rate: 98.63%

Abusix SC rate: 99.81%

AnubisNetworks achieved the highest final score in the previous test – and although the Portuguese product did not manage to repeat the achievement this month, it did score an excellent spam catch rate and, like last time, no false positives. AnubisNetworks thus easily earns its fourth VBSpam award.

SC rate: 99.79%

FP rate: 0.04%

Final score: 99.58

Project Honey Pot SC rate: 99.52%

Abusix SC rate: 99.95%

BitDefender’s final score was slightly improved this month, thanks to the number of false positives having been reduced to just one. BitDefender thus continues its unbroken run of VBSpam awards, having earned one in every test to date.

SC rate: 99.80%

FP rate: 0.13%

Final score: 99.17

Project Honey Pot SC rate: 99.77%

Abusix SC rate: 99.82%

If there were an award for the greatest improvement then FortiMail would certainly win it: the hardware appliance saw improvements to both its false positive rate and, most impressively, its spam catch rate, earning the product its tenth consecutive VBSpam award.

SC rate: 98.45%

FP rate: 0.42%

Final score: 96.33

Project Honey Pot SC rate: 98.23%

Abusix SC rate: 98.58%

In this test, GFI’s VIPRE missed just over 1.5 per cent of all spam emails. That in itself was not a problem (though it does, of course, leave some room for improvement), but ten legitimate emails were blocked by the product too. With such a high false positive rate users may be less likely to forgive the product for allowing the odd spam message through to their inboxes. With the lowest final score of all products in the test, VIPRE fails to win a VBSpam award this month.

SC rate: 99.58%

FP rate: 0.04%

Final score: 99.37

Project Honey Pot SC rate: 99.04%

Abusix SC rate: 99.90%

As in the previous test, Kaspersky’s anti-spam solution managed to keep the number of incorrectly classified legitimate emails down to just one, so the extra weighting on false positives introduced in the final score calculations this month did not cause much of an issue. In fact, with an improved spam catch rate, the product saw its final score increase and thus it easily wins its ninth VBSpam award.

SC rate: 99.97%

SC rate pre-DATA: 98.96%

FP rate: 0.00%

Final score: 99.97

Project Honey Pot SC rate: 99.94%

Abusix SC rate: 99.99%

As I have said in previous reviews, in the business of spam filters, the devil is in the details. However, sometimes tiny details fall within the statistical error margin: Libra Esva missed just four more spam emails than the best performing product in this test and, as the virtual solution did not block any legitimate email, it achieved the second highest final score. Esva’s Italian developers should consider their product one of the winners of this test.

SC rate: 99.98%

FP rate: 0.04%

Final score: 99.77

Project Honey Pot SC rate: 99.95%

Abusix SC rate: 99.99%

McAfee’s Email Gateway appliance saw its spam catch rate improve to just 19 missed spam emails, giving the product the joint best spam catch rate. But perhaps more impressive is the fact that its false positive rate was reduced greatly – to just one mislabelled legitimate email. With the fifth highest final score in this test, McAfee’s Email Gateway wins yet another VBSpam award.

SC rate: 99.39%

FP rate: 0.00%

Final score: 99.39

Project Honey Pot SC rate: 98.43%

Abusix SC rate: 99.95%

The second McAfee appliance also saw improvements to both its spam catch rate and its false positive rate; here it is the total lack of false positives that is most impressive. A very decent spam catch rate combined with that lack of false positives means that the product easily achieves its ninth consecutive VBSpam award.

SC rate: 99.91%

FP rate: 0.30%

Final score: 98.42

Project Honey Pot SC rate: 99.83%

Abusix SC rate: 99.95%

Fewer than one in 1,000 spam messages sent through MessageStream’s hosted solution made it to our MTA, which just shows the impact a spam filter can have. The product scored more false positives than the average solution in this test, which should be some concern for the developers and something for them to work on, but MessageStream still easily won its tenth VBSpam award.

SC rate: 99.98%

FP rate: 0.00%

Final score: 99.98

Project Honey Pot SC rate: 99.98%

Abusix SC rate: 99.98%

OnlyMyEmail’s MX-Defender made an impressive debut last month with the highest spam catch rate in the test. Not only did it manage to repeat that achievement this time, but it also achieved a score of zero false positives. This gives the product the highest final score this month, along with a very well deserved VBSpam award.

SC rate: 99.24%

FP rate: 0.47%

Final score: 96.90

Project Honey Pot SC rate: 98.61%

Abusix SC rate: 99.61%

False positives caused problems for Pro-Mail’s hosted solution last month and continued to do so in this test – the product missed more legitimate email than any other solution in the test. While the product’s spam catch rate was good, the high false positive rate was enough to keep its final score just below the threshold of 97, thus denying it a VBSpam award.

SC rate: 99.89%

FP rate: 0.04%

Final score: 99.68

Project Honey Pot SC rate: 99.75%

Abusix SC rate: 99.98%

Sophos’s hardware appliance combined another very decent spam catch rate with just one false positive (compared to four in the previous test) to give the product an excellent final score and earn it its sixth VBSpam award in as many tests.

SC rate: 99.31%

FP rate: 0.25%

Final score: 98.04

Project Honey Pot SC rate: 99.24%

Abusix SC rate: 99.35%

SPAMfighter Mail Gateway saw its spam catch rate improve for the second time in a row, and while its false positive score increased – something the developers hope will be dealt with better by a new scanning engine – it easily achieved its eighth VBSpam award.

SC rate: 99.97%

FP rate: 0.00%

Final score: 99.97

Project Honey Pot SC rate: 99.98%

Abusix SC rate: 99.97%

SpamTitan not only equalled the stunning spam catch rate it displayed in the previous test, but the virtual appliance also correctly identified all legitimate emails. With close to the highest final score, SpamTitan is among the winners of this test.

SC rate: 99.94%

FP rate: 0.04%

Final score: 99.73

Project Honey Pot SC rate: 99.88%

Abusix SC rate: 99.98%

This month’s test proved that the relatively high false positive rate displayed by Symantec’s virtual appliance in the last test was a one-off incident. With just one false positive this time, and a decent spam catch rate, the product is among the better performers in the test.

SC rate: 99.87%

SC rate pre-DATA: 99.57%

FP rate: 0.00%

Final score: 99.87

Project Honey Pot SC rate: 99.66%

Abusix SC rate: 99.99%

In its pre-DATA filtering, The Email Laundry blocks more spam than several solutions do overall. During the content scanning phase, more than two-thirds of the remaining spam was blocked. This, combined with the fact that there wasn’t a single false positive, gave the hosted solution the fourth highest final score.

SC rate: 99.74%

FP rate: 0.08%

Final score: 99.32

Project Honey Pot SC rate: 99.45%

Abusix SC rate: 99.91%

Both the spam catch rate and false positive rate for Vade Retro were slightly better in the last test, but the French hosted solution had some leeway: both are still good, and with a more than decent final score, the product wins its fifth consecutive VBSpam award.

SC rate: 99.39%

FP rate: 0.00%

Final score: 99.39

Project Honey Pot SC rate: 98.90%

Abusix SC rate: 99.67%

ORF’s false positive rates have always been among the lowest of the products we’ve tested so we were not surprised to see it among those that scored zero false positives in this month’s test. With another very good spam catch rate, ORF earns its fifth VBSpam award in as many tests.

SC rate: 99.90%

SC rate pre-DATA: 77.35%

FP rate: 0.13%

Final score: 99.27

Project Honey Pot SC rate: 99.92%

Abusix SC rate: 99.89%

Webroot’s spam catch rate has been close to 100% for many tests in a row and this one was no exception. I was pleased to see a good reduction in the number of false positives as well, giving the hosted solution an improved final score even using the more challenging formula, and earning the product its tenth consecutive VBSpam award.

SC rate: 98.68%

FP rate: 0.00%

Final score: 98.68

Project Honey Pot SC rate: 97.80%

Abusix SC rate: 99.18%

This test showed that the false positives produced by Spamhaus last month really were just a hiccup: in this test (as in all other tests but the previous one) no legitimate email was blocked using the combination of IP (Zen) and domain (DBL) based blacklists. Meanwhile, a lot of spam was blocked and with far from the lowest final score, Spamhaus demonstrates that, even as only a partial solution, it is well up to the task.

Developers of the two products that failed to win a VBSpam award this time around will be hard at work in the interim between this test and the next to improve their products’ performance and ensure they make it to the winners’ podium next time. Meanwhile, the developers of the other products will have to demonstrate that they are capable of keeping up with the way spam changes.

And so will we at Virus Bulletin. Just as the developers of anti-spam solutions can never rest on their laurels, we will keep looking at ways to improve our tests and make sure their results are as accurate a reflection of real customer experience as possible. Watch this space for an announcement of the exciting new additions we are planning.