2010-12-01

Abstract

One month later than planned, the tenth VBSpam report puts 20 full solutions to the test as well as one reputation blacklist. Martijn Grooten has all the details.

Copyright © 2010 Virus Bulletin

We owe an apology to regular readers of the VBSpam comparative reviews for having to wait an extra month for this review. The delay was not intentional – everything was on track for the test to run during the second week of October with a new, faster network and completely rewritten code to run the test (call it ‘VBSpam 2.0’ if you like) when we discovered that the new network suffered from unacceptable and unpredictable periods of downtime.

I often think of systems administrators when running the VBSpam tests: their jobs would be impossible without a reliable product to keep the vast majority of spam at bay, and I hope that these reviews give them some insight into which products are reliable. This time round, I suddenly felt like one of them: switching cables, restarting computers and routers, measuring downtime and throughput and spending many hours on the phone to the ISP’s helpdesk. For a long time my efforts were fruitless, but eventually we found a way to route traffic so that the downtime all but ceased to exist.

This tenth VBSpam report includes 19 full solutions as well as one reputation blacklist. As on some previous occasions, all products achieved a VBSpam award. I have explained before why I don’t believe this is a problem – for instance, there are several other solutions that weren’t submitted to the test, perhaps because their developers felt that they were not capable of the performance level required to qualify for an award. It also demonstrates that all of the participating products do a good job at blocking most spam while making few mistakes. Despite this, we do feel that, after ten tests, the time is ripe for the thresholds to be set a little higher and we will be reviewing them in time for the next test (more details on which later).

The test methodology can be found at http://www.virusbtn.com/vbspam/methodology/. Email was sent to the products in parallel and in real time, and products were given the option to block email pre-DATA. Four products chose to make use of this option.

As in previous tests, the products that needed to be installed on a server were installed on a Dell PowerEdge R200, with a 3.0GHz dual core processor and 4GB of RAM. The Linux products ran on SuSE Linux Enterprise Server 11; the Windows Server products ran on either the 2003 or the 2008 version, depending on which was recommended by the vendor.

To compare the products, we calculate a ‘final score’, which is currently defined as the spam catch (SC) rate minus three times the false positive (FP) rate. Products earn VBSpam certification if this value is at least 96:

SC - (3 x FP) ≥ 96

The test ran for 14 consecutive days, from midnight on 5 November 2010 to midnight on 19 November 2010. The test was interrupted twice during the final days of the test because of a hard disk problem; email was not sent during these periods, but this did not affect the test.

The corpus contained 95,008 emails, 92,613 of which were spam. Of these spam emails, 42,741 were provided by Project Honey Pot and the other 49,872 were provided by Abusix; in both cases, the messages were relayed in real time, as were the 2,395 legitimate emails. As before, the legitimate emails were sent in a number of languages to represent an international mail stream.

The graph below shows the average catch rate of all full solutions throughout the test. It shows, for instance, that a few days into the test, and again halfway through the second week, spam became more difficult to filter. Admittedly, the difference is small, but for larger organizations and ISPs this could have resulted in thousands of extra emails making it through their spam filters.

In previous tests, we reported products’ performance against large spam (messages of 50KB or larger), and against spam messages containing embedded images. In this test, the number of each of these message types dropped to levels that would be too low to draw any significant conclusions – indicating an apparent change in spammers’ tactics. Whether this change is permanent or only temporary remains to be seen.

Just over 93% of all spam messages were blocked by all products and just one in 60 messages was missed by more than one full solution. This was a significant improvement compared to previous tests, but again, only time will tell whether this improvement is permanent.

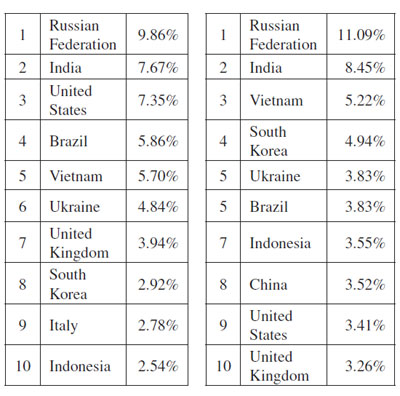

Both spam corpora used in the test contained spam sent from all over the world (the origin of a message is defined as the location of the computer from which it was sent; in the vast majority of cases, this will be a hijacked computer that is part of a botnet). Anti-spam vendors regularly publish a geographical distribution of the spam they have seen and we have done that too, in the left-hand part of the table below.

Figure 2. Left: Geographical distribution of the spam seen in the spam feeds. Right: Geographical distribution of spam messages missed by at least two full solutions.

However, it is just as interesting to see how hard it is to filter spam from the various regions. To give some insight into that, the table above right shows the geographical distribution of spam messages that were missed by at least two full solutions. It is worth noting that spam from Russia and several Asian countries appears to be hard to filter, whereas spam sent from computers in the United States doesn’t appear to pose much of a problem for filters.

Of course, it would be interesting to get similar data on the legitimate emails. However, the relatively small size of our ham corpus, and the fact that such corpora are almost by nature not fully representative of the legitimate email sent globally, make this infeasible. Still, experience with running these tests has taught me that emails in non-English languages – particularly those in non-Roman character sets – tend to be harder to filter. Having said that, a significant proportion of the false positives seen in this test were written in English.

It is not true that false positives are something products cannot help: even the emails that were blocked by several products – four emails from the same sender were blocked by eight products (see the explanation in the Spamhaus section below) – were correctly identified as ham by the other products. All other false positives were blocked by just three products or (usually) fewer.

SC rate: 99.88%

FP rate: 0.00%

Final score: 99.88

With the second highest final score in the previous test, there was little room for improvement for Anubis. Despite this, the Portuguese hosted solution was still able to better its last performance: an excellent spam catch rate was combined with zero false positives – the only product in this test to correctly identify all the legitimate mails – which means it earns its third VBSpam award and outperforms all other products.

SC rate: 99.89%

FP rate: 0.21%

Final score: 99.27

BitDefender’s anti-spam solution achieved the highest final score in the previous test and its developers were hoping for a repeat performance this time round. Disappointingly for the developers the final score wasn’t top of this month’s leader board, but the product’s spam catch rate stayed almost the same, and although there were a handful of false positives this time, the FP rate was still lower than that of many other products. A strong final score means that BitDefender is still the only product to have won a VBSpam award in every single test.

SC rate: 98.50%

FP rate: 0.21%

Final score: 97.87

With a slightly improved spam catch rate and, like most products, a few more false positives than in the previous test, Fortinet wins another VBSpam award with its FortiMail appliance. This is the product’s ninth award in as many tests.

SC rate: 98.05%

SC rate pre-DATA: 97.54%

FP rate: 0.58%

Final score: 96.30

This month sees VIPRE return to the VBSpam test bench after a brief absence, and it returns under a slightly different name – Sunbelt has been acquired by GFI in the meantime. Both Sunbelt and GFI have years of experience in email security, and with their combined force behind the product, VIPRE wins yet another VBSpam award. However, there is certainly room for improvement and either the spam catch rate or the false positive rate – ideally both – must improve if the product is to retain its certified status when the stricter benchmarks are brought in next month.

SC rate: 99.39%

FP rate: 0.04%

Final score: 99.26

From the next test we will be assigning a heavier weight to the false positive score – this change will no doubt be welcomed by Kaspersky’s developers whose product once again achieved an impressively low false positive rate. With a decent and much improved spam catch rate, the Linux product achieves its eighth VBSpam award.

SC rate: 99.78%

SC rate pre-DATA: 98.14%

FP rate: 0.17%

Final score: 99.28

Libra Esva’s false positive rate improved in this test, only missing the four trickiest legitimate emails. The cost was a slight decrease in the product’s spam catch rate, but a solid final score places it among the top five performers in this test. The virtual solution well deserves its fourth VBSpam award.

SC rate: 99.84%

FP rate: 0.71%

Final score: 97.71

I suspected a temporary glitch in the performance of McAfee’s Email Gateway appliance when the spam catch rate dropped significantly in the last test (see VB, September 2010, p.22). It seems I was right, as this time round the product performed very well on filtering spam. It is now the false positive rate that the developers must pay attention to – interestingly enough, more than half of the incorrectly filtered legitimate mails were written in French – however, the product’s performance was still decent enough to earn a VBSpam award.

SC rate: 99.05%

FP rate: 0.21%

Final score: 98.43

McAfee’s Email and Web Security Appliance equalled its spam catch rate of the previous test. Like most products, it had a slightly higher false positive rate on this occasion, but it still easily achieved its eighth consecutive VBSpam award.

SC rate: 99.95%

FP rate: 0.63%

Final score: 98.07

As one of the three products that has participated in all ten VBSpam tests, MessageStream was still able to find room for improvement, scoring the third highest spam catch rate. This wins the product its ninth VBSpam award, despite the relatively high false positive rate. However, the false positive rate must be improved upon if the product is to earn its tenth award next time.

SC rate: 99.99%

FP rate: 0.42%

Final score: 98.74

Neither the company name, OnlyMyEmail, nor the product name, MX-Defender, leave much room for imagination about what this product does: the customer’s mail servers (or MXs) are defended by having all email routed through this hosted solution, which uses a large number of in-house-developed tests to classify email into ‘ham’, ’spam’ and other categories such as ‘bulk’ or ‘phishing’. Systems administrators can easily configure the solution through a web interface, and end-users can fine-tune it even more.

Of course, what ultimately matters here are the numbers, and these were rather good: with just seven spam emails missed, the product’s spam catch rate was the highest in this test. There was a handful of false positives, but nevertheless the false positive rate was far from the worst. A VBSpam award is well deserved on the product’s debut.

SC rate: 98.84%

FP rate: 0.42%

Final score: 97.59

Like most products in this test, Pro-Mail’s hosted anti-spam solution saw significantly more false positives this month than previously. Hopefully, this will prove to be only a temporary glitch, possibly caused by being presented with ‘more difficult’ ham. Despite the increase in FPs, the product wins its third consecutive VBSpam award in as many tests, thanks to a significantly improved spam catch rate.

SC rate: 99.92%

FP rate: 0.17%

Final score: 99.42

With just four false positives (the same four as many other products) and a very good spam catch rate, Sophos’s email appliance achieves a final score that puts it in the top five for the third time in a row – the only product that can claim this. We are always keen to stress the importance of judging a product by its performance over several tests in a row, rather than by any single test in isolation, and Sophos’s recent run of test results make it a very good example of a consistent performer.

SC rate: 99.10%

FP rate: 0.13%

Final score: 98.72

Developers at SPAMfighter’s headquarters in Copenhagen will be pleased to know that the product achieved a significantly improved spam catch rate this month – and that the product’s false positive rate did not increase significantly. A seventh VBSpam award for the Windows Server product is well deserved.

SC rate: 99.97%

FP rate: 0.08%

Final score: 99.72

SpamTitan had just two false positives in this test – not only a significant improvement over the previous test, but also better than all but three of the products in this test. Better still, the product achieved the second highest spam catch rate this month, resulting in the second highest final score. This should make the product’s seventh VBSpam award shine rather brightly.

SC rate: 99.92%

FP rate: 0.63%

Final score: 98.04

Brightmail’s developers will no doubt be a little disappointed with the number of false positives this month, as the product incorrectly falgged more legitimate email in this test than in the five previous tests put together. With a very decent spam catch rate, the product still achieves a VBSpam award though, and hopefully the next test will show that this month’s false positives were simply due to bad luck.

SC rate: 99.75%

SC rate pre-DATA: 99.11%

FP rate: 0.21%

Final score: 99.12

The Email Laundry’s strategy of blocking most email ‘at the gate’ has paid off well in previous tests, and once again, the product blocked over 99% of all email based on the sender’s IP address and domain name alone – a number that increased further when the bodies of the emails were scanned. There were a handful of false positives (including some that were blocked pre-DATA; see the remark in the Spamhaus section below) but that didn’t get in the way of winning a fourth VBSpam award.

SC rate: 99.77%

FP rate: 0.04%

Final score: 99.65

Interestingly, in this test many products appeared to have difficulties with legitimate email written in French, or sent from France. It should come as no surprise that Vade Retro – the only French product in the test – had no such difficulty with these emails, but then it only misclassified one email in the entire ham corpus.

It also had little in the way of problems with the spam corpus, where its performance saw a significant improvement since the last test. The solution wins a fourth VBSpam award in as many attempts with the third highest final score.

SC rate: 98.92%

FP rate: 0.17%

Final score: 98.42

A score of four false positives (the same four legitimate emails that were misclassified by seven other products) is no doubt higher than ORF’s developers would have hoped, but the product still managed to achieve one of the lowest false positive rates in this test. This low FP score combined with a decent spam catch rate results in a very respectable final score, and the Hungarian company wins its fourth VBSpam award in as many consecutive tests.

SC rate: 99.94%

SC rate pre-DATA: 70.36%

FP rate: 0.42%

Final score: 98.69

Just as in the previous test, very few spam emails were returned from Webroot’s servers without a header indicating that they had been blocked as spam. (Most users of the product will have set it up so that these messages are not even sent to their MTAs.) Four false positives lowered the final score a little, but nowhere near enough to deny the hosted solution another VBSpam award.

SC rate: 98.69%

FP rate: 0.17%

Final score: 98.19

At the recent VB conference, The Spamhaus Project won an award for its contribution to the anti-spam industry over the past ten years. While there will be few in the community who do not think this award was well deserved, spam is constantly changing and no award or accolade can be any guarantee of future performance. Spamhaus is constantly developing though, and recently added two new whitelists to its portfolio of reputation lists (for technical reasons, these weren’t tested).

The lists included in this test – the ZEN combined IP blacklist and the DBL domain blacklists – again blocked a very large number of spam messages, outperforming some commercial solutions. However, for the first time since Spamhaus joined the tests, there were false positives; in fact, one IP address from which four legitimate emails were sent, was incorrectly blacklisted. Further investigation showed that the IP address was dynamic and therefore listed on the PBL – a list of end-user IP addresses that under normal circumstances should not be delivering unauthenticated SMTP email to the Internet. One could well argue that the sender (and/or their ISP) is partly to blame, as such IP addresses – unless explicitly delisted – are likely to be blocked by many a recipient. Still, these were legitimate, non-commercial emails and to their intended recipients, they counted as false positives.

Even though all products achieved a VBSpam award this month, several will have to improve their performance if they are to repeat this in the future. From the next test, the formula used to determine the final score will be the spam catch rate minus five times the false positive rate, and in order to earn VBSpam certification a product’s final score must be at least 97:

SC - (5 x FP) ≥ 97

Next month, products will also see competition from a number of new products whose developers are eager to submit them to the tests to find out how well they perform compared to their competitors.

With the next test we will be back to our normal schedule: the test is due to run throughout the second half of December, with results published in the January issue of Virus Bulletin. The deadline for submission of products will be Monday 6 December. Any developers interested in submitting a product should email [email protected].

Finally, I would like to reiterate that comments, suggestions and criticism of these tests are always welcome – whether referring to the methodology in general, or specific parts of the test. Just as no product has ever scored 100% in this test, there will always be ways to improve the test itself.