Posted by on Oct 22, 2019

The debate on responsible disclosure is about as old as IT security itself. In a guest post for Virus Bulletin Robert Neumann suggests we need to reconsider a one-size-fits-all solution and instead look for a well-respected independent organization to handle security issues. (All views expressed in this article are the author's own and do not necessarily reflect the opinion of any of his associates.)

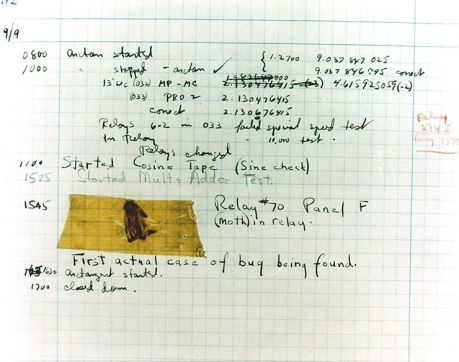

It all started with computer bugs some time back in the 1940s. At that time, a 'bug' literally meant a tiny creature sabotaging the working condition of the very first generation of computers. In the first recorded case it was a moth that was trapped in a relay of an early electromechanical computer.

The first 'computer bug' – a moth found trapped between points at Relay # 70, Panel F, of the Mark II Aiken Relay Calculator while it was being tested at Harvard University, 9 September 1947. The operators affixed the moth to the computer log, with the entry: "First actual case of bug being found". Source: Naval History and Heritage Command.

Fast forward to the present and a 'bug' still represents a malfunction of a piece of equipment or computer program – but with some differences. Due to current architectural concepts bugs can also be (ab)used to execute unwanted code on the system. That doesn't sound good, does it? Unwanted execution of arbitrary code on any system is very close to the top of the 'never want to see happen' list. Software can crash, hardware can fail, but execution of unwanted code is much worse – it usually means something is happening silently, without us being aware of it, and the intention is rarely good.

To understand how we ended up in this situation we have to go back in time. In the late 1990s, when Jonathan "HardC0de" Jack created his new vision of an MP3 playback application called The Player and made it publicly available for anyone to download, only very few users – if anyone at all – cared about the quality of his code from a security standpoint. Provided that the application was working as intended and let you listen to your favourite music in a digitalized form, users didn't care about the quality of its code. Unless it crashed for any reason and playback stopped. Bug reports were few and far between, they were not as easily submitted as they are today, and fixing them could take a considerably long time. Reasons for this could vary: lack of resources, alternative priorities, or just being ignorant. Still, those bugs mainly affected user experience and thus it made sense for them to be fixed after all. The decades-old debate about usability versus security (as many believe they are polar opposites) may also have started here.

In this brave new world Jonathan probably didn't have the time or desire to check his code for perfect input sanitization or to use 'secure' functions of his preferred programming language, as these factors did not alter the user experience at all. Even if he was receiving bug reports he still probably wouldn't care to examine the so-called 'vulnerabilities' (bugs) which were introducing weaknesses that could ultimately result in malicious code execution.

As we all know, these days are long gone. If Jonathan's software was being used by as few as a couple of thousand people and there was a known Remote Code Execution (RCE) related weakness, it could be considered a cheap target. If the user base was in the millions, it could be considered a good target; above that, it would be a great target. The only question would be who would be willing to pay, and how much, to get their hands on the details of the vulnerability. Or, in the more fortunate event of the vulnerability being discovered by responsible individuals, how much time he would be allowed to fix it before it all went public.

The software landscape has changed drastically over the intervening years, and with it so has the market for unpatched vulnerabilities and zero-days. While just three decades ago computer viruses and malicious code were either experimental or something used to 'show off', today there is a whole ecosystem built upon them. People are not just witnessing software bugs and applications crashing, but are proactively trying to make it happen. The saner part of your brain would probably ask: what for? Is the world going to be a better place once someone fuzzed the hell out of an MP3 player's playlist handling code? Probably not. Is there a way to make money out of that? Surely there is.

It should be no surprise that money is the main incentive for finding vulnerabilities in popular software applications. The other incentive is pride. When it comes to pride, Google's Project Zero initiative is surely one of the top contenders. Today, responsible disclosure usually means offering a software vendor 90 days to fix a privately disclosed vulnerability and make the fix available to their user base. Ninety days! That's it! While many from the various 'coloured' hat (grey, black, white) communities would consider this to be more than enough time, it's often a different story for the vendors involved. The unfortunate fact is that members from the former communities often have no experience in enterprise-level software engineering environments. Consequently, they can make incorrect assumptions about what the complete build/QA/release cycle of such companies look like, and more precisely how much time it takes under normal circumstances. Making things worse, these vulnerabilities come completely out of the blue, and will likely interfere with already scheduled product releases and resources allocated for new features or fixing other known bugs.

There is a huge difference when we compare Jonathan's sole MP3 playback software to a global software vendor with a broad multi-platform portfolio. While it might only take hours or days for the former to fix and publish a new build of his application mitigating an RCE, it could take weeks or months for the latter. This is the point where people advocating the release of a PoC after 90 days would say 'they had enough time for it'. Is this really the case all of the time, most of the time – or at all?

Is there a decent understanding of what it takes for such a vendor to fix a relatively simple looking RCE? That given vendor might have products for different markets (consumer/pro), different platforms and architectures (client-based, server-based, cloud-based, x86, x64, ARM), multiple ones for each category, and every single one of them supporting the vulnerable feature. Not all of them are necessarily broken, but that has to be investigated. Depending on how flexible the architecture is, fixing even a simple vulnerability in such a product often means doing a full build of the application itself. These new builds will certainly contain other fixes and improvements, and any of those failing QA might result in restarting the whole cycle over (and over) again.

While internal testing can be challenging for the reasons described above, it is still mainly controlled by the vendor itself. If they feel the need to drop a number of unit tests, exclude certain outdated hardware configurations or software platforms in order to speed up the QA process, it is up to them to do so. Companies interested in OEM deals might not have the same luxury of (total) control. Contractual agreements come into play and it will be up to their external partners to carry out tests meeting their own desire. Changing up the roles, what would you do if your software was affected by an external library – with a known vulnerability – over which you have absolutely no control?

Irrespective of size, everyone is working against the clock all the time. Failing to deliver a fix within 90 days can easily happen even with the best efforts and the right intentions.

As with everything, there are vendors who take all this very seriously, and some that do not. Right now, Microsoft is very good at its vulnerability management and patching. The much-maligned software giant has previously been in the crosshairs of security researchers when its approach towards security could be considered somewhat naïve, but those days are long gone. A lot of time and effort has gone into fixing bugs – especially security-related ones – in a timely fashion, and those actions reflect a renewed approach towards security and secure computing. It hasn't always been the case, but lessons have been learned the hard way to get us to a mindset of 'security first' instead of 'security last'.

Having said that, it's really disappointing to see some security researchers apparently pushing their own personal agenda against particular vendors. Was the 90-day grace period ever discussed with representatives of the software industry? Should it have been? It seems clear that a grace period prior to disclosure is a fair halfway house between full disclosure and security through obscurity, but how is the length of that period decided, and by whom? Should it be variable depending on the complexity and damage potential of the vulnerability in question? The saying 'Patch Tuesday, zero-day Wednesday' was commonly used throughout the security community in reference to how quickly cybercriminals start to exploit newly published information. Is bullying software vendors into patching within a certain timeframe 'or else' really that responsible?

There are no easy answers to the questions above, but one thing has become evident over the years: the way security issues are currently being handled is far from practical. Having a grace period is a fundamental requirement, however the one-size-fits-all mentality needs to be reconsidered. The software engineering industry either needs a well-respected independent organization responsible for handling security issues by providing an established framework or yet another regulatory compliance similar to GDPR. No matter which, transparent discussion must be opened up with software giants such as Amazon, Apple, Facebook, Google and Microsoft, as some of them will be the slowest moving. This could work in favour of both sides, companies resisting to overview their existing internal policies and build processes will be called out (or fined in case of regulatory compliance) on repeated security issues, while still keeping PoCs at bay until a fix is available. A good example of such an independent organization – although for a different purpose – is AMTSO. It has had its fair number of battles throughout the years, but in the end, it was well worth it. Unfortunately, the scale and stakes are much larger here.