Posted by on Sep 4, 2019

![]() Over the last few years, SE Labs has tested more than 50 different security products against over 5,000 targeted attacks. In this guest blog post Stefan Dumitrascu, Chief Technical Officer at SE Labs, looks at the different attack tools available, how effective they are at helping attackers bypass anti-malware products, and how security vendors have been handling this type of threat for over a year.

Over the last few years, SE Labs has tested more than 50 different security products against over 5,000 targeted attacks. In this guest blog post Stefan Dumitrascu, Chief Technical Officer at SE Labs, looks at the different attack tools available, how effective they are at helping attackers bypass anti-malware products, and how security vendors have been handling this type of threat for over a year.

If the headline is a question, the answer is usually 'no'. We emulated a series of targeted attacks, running them in a realistic way and using publicly available hacking tools, and the results were surprising. As attackers, our success levels were far greater than we had predicted. Using free tools that are widely distributed on the Internet we were able to compromise large numbers of systems, often without detection.

The good news is that, as we have worked closely with security vendors, their products have improved over time. We have shared over half a terabyte of data with security partners in an effort to help strengthen the protection provided by their products.

However, it is interesting to see which hacking approaches were most effective. We used a combination of techniques including:

The results show that many endpoint products detect most of the attacks. However, while anti-malware evasion tools were mostly detected and prevented, injection techniques resulted in higher levels of compromise, and the use of PowerShell is currently an excellent way to break into systems, with far higher levels of compromise compared to the other methods.

Products were generally poor at cleaning up after a detected attack.

We have also tested email security services with many of these attacks and our public reports show that email remains an effective route for attackers. Combined with a good endpoint product, things don't look too hopeless, but you wouldn't be best advised to rely solely on an email gateway right now.

When we started working on testing with targeted attacks we considered options that the security industry would most easily understand and accept. The obvious answer was Metasploit, a framework for building and executing attacks against a variety of targets. We were worried that every attack we generated would be detected, as Metasploit was such a well-known platform. But the results showed a different story.

Initially, we found that even using the standard Metasploit modules was enough to differentiate between the efficacy of products. However, as we progressed we noticed an improvement in security products when dealing with standard threats built using Metasploit. Time for a change! Just like a real attacker, we moved to new tools and techniques. We have now tested using five publicly available tools.

Using publicly available tools allows both our enterprise customers and our partner vendors to replicate the results to check that they are accurate. This transparency allows us to help vendors improve their products rather than just giving them a shiny badge for their marketing departments.

Aren't these attacks just in the domain of script kiddies? While these attacks won't reflect the capability of an attacker with the resources of a nation-state actor, they are still harmful and sufficiently advanced to show a difference in the efficacy of the products in a test. On top of that, there are a lot of reports of public tools being used in malicious campaigns. At the end of the day, criminals will use the easiest way to reach their goal, and using public tools is easier than writing malware.

The following table shows the success rates we have enjoyed using different tools:

| Toolkit compromise rates (with no detection) |

2018 - 2019 |

| Shellter | 4% |

| Veil | 4% |

| PowerShell Empire | 20% |

For more details about these tools, read on.

Before we get stuck into describing the tools that we've used and their relative success rates, it's worth having a quick look at how we score products and the terms we use, because these appear later in the article.

When we say a product 'blocked' a threat we mean that it stopped any malicious activity on the target system, including the execution of malware. If a product stops malicious activity after it starts to occur, then the result is a 'neutralization'. A good example of this happening is letting an executable file run and then stopping the threat before it can achieve its evil goal.

Both a blocked and neutralized result mean that the product successfully stopped the threat, but at different stages. However, alongside these possible results we also have 'complete remediation'. To achieve this happy state the product must delete all significant traces of the attack from the system. These are the good results.

Blocked or Neutralized = Protection

+ Complete Remediation = Complete Protection

A 'compromised' result means that the attacker was successful in gaining access to the target system. This is split further into different stages of the attack, which we label as 'access', 'action', 'escalation' and 'post escalation action'. To learn more about these, and how we test using the full attack chain, please read our 2019 Annual Report [1] or any of our enterprise endpoint protection test reports [2].

One of the first tools we tested with was Shellter [3], an injection tool that can be used to inject shellcode into Windows applications. You can consider it a tool for creating trojans. Simple to use, we enjoyed Shellter for its ability to produce great results (for the attacker). In the real world of criminal behaviour we observed its use by the Dragonfly threat group [4] against the energy industry.

We have now run over 1,300 tests using malicious files generated using this tool. These executables were delivered via download using a web browser. We found a 96 per cent detection rate for attacks using Shellter. While this seems like a good ratio for the defender, the more worrying statistic is a compromise rate of 20 per cent. This is worrying when you consider the relatively low barrier of entry for and ease of use of this tool.

How is it possible to have a detection rate of 96 per cent and an infection rate of 20 per cent? A security product can detect a threat but fail to protect against it! The table below shows how the products we have tested for over a year have handled Shellter-based threats:

| Shellter detection and protection rates | 2018 Q1 | 2018 Q2 | 2018 Q3 | 2018 Q4 | 2019 Q1 |

| Detection rate | 97% | 95% | 94% | 100% | 96% |

| Protection rate | 79% | 74% | 75% | 88% | 94% |

| Complete Protection | 41% | 45% | 49% | 66% | 76% |

We can see that the detection rate is fairly stable, at around an average of 96 per cent. More impressively, the rate at which products can completely protect against the threat rises from 40 per cent steadily to over 75 per cent 15 months later. This shows that the security vendors we work with have made improvements to their products that, in turn, have a positive impact on their customers – you and me.

The goal of an evasion framework is to help an attacker create threats that can evade detection by anti-malware products. We use these in our tests with varying success.

One of the most popular evasion frameworks is Veil [5] and we used it for a full year before swapping it out for Phantom Evasion.

During the year that we used it we ran 1,665 Veil-powered attacks. While the detection rate was similar to Shellter, at around 96 per cent, it only allowed us to compromise the target systems 13 per cent of the time. The overall Complete Protection rating against Veil averaged 69 per cent for the year.

| Veil detection and protection rates | 2018 Q1 | 2018 Q2 | 2018 Q3 | 2018 Q4 |

| Detection | 96% | 96% | 94% | 100% |

| Protection | 81% | 82% | 87% | 92% |

| Complete Protection | 63% | 68% | 70% | 75% |

We have found PowerShell Empire [6] to be the most exciting weapon in our tool set. On top of a wide range of capabilities it seems that, unless users block all PowerShell scripts outright, an attacker is likely to be successful. So far in our endpoint protection tests we have really only scraped the surface of Empire, having launched just 470 attacks, but it has already generated some of the best results when it comes to compromising systems.

While the detection rate by security products hovers at around 80 per cent across all of the threats tested, it is by far the best at evading detection. We have found a success rate of 20 per cent with this tool and that, when it succeeds, victims will not be notified by their security products. When using any other tool there is usually at least get some sort of notification.

| PowerShell Empire detection and protection rates | 2018 Q3 | 2018 Q4 | 2019 Q1 |

| Detection | 77% | 78% | 86% |

| Protection | 70% | 75% | 86% |

| Complete Protection | 54% | 75% | 60% |

We have also used two other frameworks as replacements for Veil: Phantom Evasion [7] and Metasploit's [8] newly introduced evasion modules. It's early days, so we don't have enough data yet to compare their success rates directly with the other tools. However, here are some early figures:

| Phantom Evasion detection and protection rates | 2019 Q1 |

| Detection | 97% |

| Protection | 91% |

| Complete Protection | 69% |

| Metasploit Evasion detection and protection rates | 2018 Q4 | 2019 Q1 |

| Detection | 100% | 99% |

| Protection | 86% | 94% |

| Complete Protection | 55% | 77% |

Looking at the data from the past 15 months, it's great to see the improvement made by the security vendors when dealing with increasingly advanced attacks. There is always something new around the corner and ways in which we can improve our tests. However, transparency in what we do helps everyone understand our work better and hopefully improves the efficacy of the tested products.

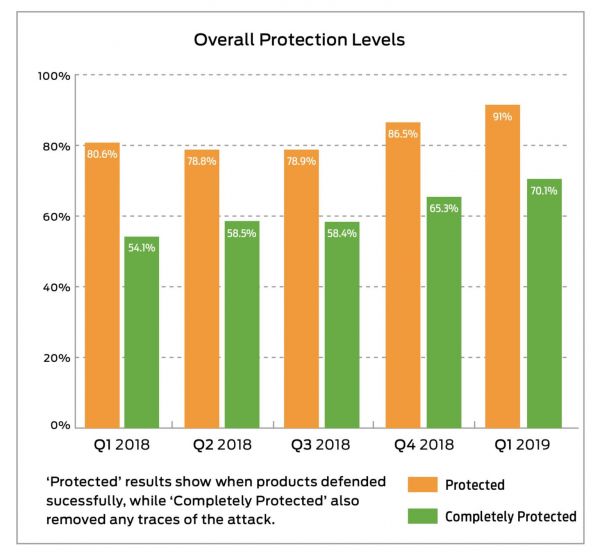

Overall protection levels in the past 15 months.

Overall protection levels in the past 15 months.

In every table in this article, which we have pulled from our raw test data, we've seen constant improvement in protection rates. So rather than go for the 'doom and gloom' approach you see reported so often when a new breach is uncovered, both security vendors and testers should work together to try and improve protection for who really matters, the end-user.

[1] https://selabs.uk/download/enterprise/ar/2019-selabs-annual-report-1-0.pdf.

[2] https://selabs.uk/en/reports/enterprise.

[3] https://www.shellterproject.com/.

[4] https://www.infosecurity-magazine.com/news/dragonfly-20-attackers-probe/.

[5] https://github.com/Veil-Framework/Veil.

[6] https://github.com/EmpireProject/Empire.