Posted by Martijn Grooten on Aug 24, 2017

Authors of security software in general, and anti-virus software in particular, have always needed to find the right balance between a high detection rate and a low false positive rate – something that has become even more important with advances in machine-learning detection technologies. Making the model too strict will result in false positives, while making it too relaxed will result in missed malware – and in both cases unhappy customers.

To help guide the decision as to where to pitch that balance, Microsoft researchers Holly Stewart and Joe Blackbird came up with the interesting idea of using the wisdom of the crowd: they decided to use Microsoft's telemetry to look at what kind of errors (false positives or false negatives) had prompted end-users to switch anti-virus solutions.

In their paper, which Holly will present at VB2017 in Madrid, the researchers discuss what the data showed; they also looked at how different user types, or users in different geographic locations, may respond differently to errors made by their anti-virus solutions. It is an interesting approach that will likely be useful to other security software developers who have to make similar decisions when it comes to applying their machine-learning models.

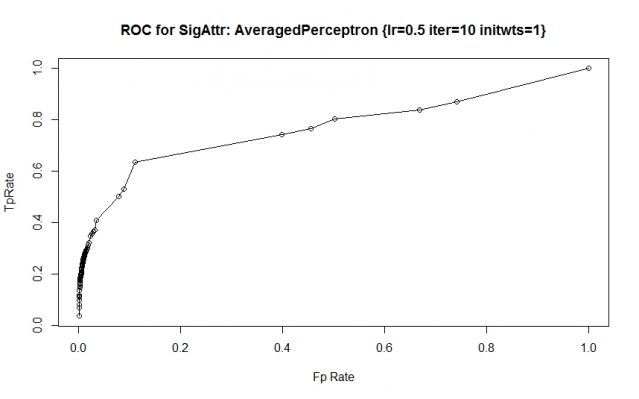

Receiver Operating Characteristic (ROC) curve that plots the true positive (good detection) rate against the false positive (incorrect detection) rate.

You can still register for VB2017 to hear more about this research and to learn from the work of more than 50 security researchers from all over the world!